RT-DETR改进策略【RT-DETR和Mamba】| MLLA:Mamba-Like Linear Attention,融合Mamba设计优势的注意力机制

一、本文介绍

本文记录的是

利用

MLLA

模块优化

RT-DETR

的目标检测网络模型

。

MLLA

模块具有独特优势。它不同于传统模块,能

同时兼顾局部特征高效建模与长距离交互学习

。常见模块要么在局部特征处理上有优势但长距离交互能力弱,要么反之,而

MLLA

模块克服了此问题。它融合了

Mamba模型

和

线性注意力机制

的优势,通过独特的结构设计,能够在

保持计算效率

的同时,

精准地建模局部特征并学习长距离交互信息

。本文将其用于

RT-DETR

的模型改进和二次创新,能够更加关注图像中的重要特征区域,抑制背景等无关信息的干扰,从而突出目标物体的关键特征。

二、MLLA模块介绍

Demystify Mamba in Vision: A Linear Attention Perspective

2.1 出发点

在探索

Mamba

与

线性注意力Transformer

关系时发现,

Mamba

的特殊设计中遗忘门和块设计对性能提升贡献大。

MLLA模块

旨在将这两个关键设计

融入线性注意力

,以提升其在视觉任务中的性能,同时保持

并行计算和快速推理优势

。

2.2 原理

2.2.1 选择性状态空间模型(Selective SSM)与线性注意力的关联

-

选择性SSM公式(如 h i = A ~ i ⊙ h i − 1 + B i ( Δ i ⊙ x i ) h_{i}=\tilde{A}_{i}\odot h_{i - 1}+B_{i}(\Delta_{i}\odot x_{i}) h i = A ~ i ⊙ h i − 1 + B i ( Δ i ⊙ x i ) 和 y i = C i h i + D ⊙ x i y_{i}=C_{i}h_{i}+D\odot x_{i} y i = C i h i + D ⊙ x i )与单头线性注意力公式(如 S i = 1 ⊙ S i − 1 + K i ⊤ ( 1 ⊙ V i ) S_{i}=1\odot S_{i - 1}+K_{i}^{\top}(1\odot V_{i}) S i = 1 ⊙ S i − 1 + K i ⊤ ( 1 ⊙ V i ) 和 y i = Q i S i / Q i Z i + 0 ⊙ x i y_{i}=Q_{i}S_{i}/Q_{i}Z_{i}+0\odot x_{i} y i = Q i S i / Q i Z i + 0 ⊙ x i )相似。如下图,能直观看到两者结构上的相似性,进而理解 将选择性SSM视为线性注意力特殊变体的依据 。 -

其中

Δ

i

\Delta_{i}

Δ

i

为输入门,

A

~

i

\tilde{A}_{i}

A

~

i

为遗忘门,

D

⊙

x

i

D\odot x_{i}

D

⊙

x

i

是捷径,且

选择性SSM无归一化且类似单头设计。

2.2.2 遗忘门的特性与作用

- 遗忘门 A ~ i \tilde{A}_{i} A ~ i 元素值在0到1之间,产生局部偏差且提供位置信息,下 图b 中遗忘门平均值在不同层的情况可辅助理解其在不同层的作用特性。

-

但遗忘门需循环计算,降低吞吐量,不适合非自回归视觉模型,不过可利用位置编码(如

APE、LePE、CPE和RoPE)替代。下表中对比使用 遗忘门 和 不同位置编码 时模型的性能,体现了 位置编码替代遗忘门的可行性 。

2.2.3 块设计的改进

-

Mamba块设计结合H3和Gated Attention,集成多种操作,比传统Transformer块设计更有效。 -

MLLA模块通过替换Transformer块中的注意力子块为Mamba的块设计,并用 线性注意力 替代 选择性SSM ,调整参数。

2.3 结构

MLLA模块

结构基于上述原理,包含输入/输出投影、

Q

/

K

Q/K

Q

/

K

投影、门控投影、线性注意力、深度卷积(DWConv)和多层感知机(MLP)等组件,下图中是

MLLA模型

架构图,可清晰看到各组件在模块中的位置和连接关系。

数据先经投影,再通过线性注意力聚合信息,接着经深度卷积和门控机制处理,最后通过MLP非线性变换得到输出,以此往复。

2.4 优势

-

性能提升

- 在图像分类(ImageNet - 1K数据集)、目标检测(COCO数据集)和语义分割(ADE20K数据集)等任务中表现出色,超过多种视觉Mamba模型。

-

计算效率高

- 保持并行计算能力,推理速度快。与Mamba模型相比,MLLA模型推理速度显著提升。如MLLA模型比Mamba2D快4.5倍,比VMamba快1.5倍且准确性更好,处理高分辨率图像等任务更具优势。

论文: https://arxiv.org/pdf/2405.16605

源码: https://github.com/LeapLabTHU/MLLA

三、MLLA的实现代码

MLLA

及其改进的实现代码如下:

# This code implements concepts and methods described in the paper:

# "Demystify Mamba in Vision: A Linear Attention Perspective"

#

# Authors: Dongchen Han, Ziyi Wang, Zhuofan Xia, Yizeng Han, Yifan Pu, Chunjiang Ge, Jun Song, Shiji Song, Bo Zheng, Gao Huang

#

# Original Paper: https://arxiv.org/abs/2405.16605

import torch

import torch.nn as nn

import torch.nn.functional as F

def drop_path(x, drop_prob: float = 0., training: bool = False, scale_by_keep: bool = True):

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

random_tensor = x.new_empty(shape).bernoulli_(keep_prob)

if keep_prob > 0.0 and scale_by_keep:

random_tensor.div_(keep_prob)

return x * random_tensor

class DropPath(nn.Module):

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"""

def __init__(self, drop_prob: float = 0., scale_by_keep: bool = True):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

self.scale_by_keep = scale_by_keep

def forward(self, x):

return drop_path(x, self.drop_prob, self.training, self.scale_by_keep)

def extra_repr(self):

return f'drop_prob={round(self.drop_prob,3):0.3f}'

class Mlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = nn.Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

class ConvLayer(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=3, stride=1, padding=0, dilation=1, groups=1,

bias=True, dropout=0, norm=nn.BatchNorm2d, act_func=nn.ReLU):

super(ConvLayer, self).__init__()

self.dropout = nn.Dropout2d(dropout, inplace=False) if dropout > 0 else None

self.conv = nn.Conv2d(

in_channels,

out_channels,

kernel_size=(kernel_size, kernel_size),

stride=(stride, stride),

padding=(padding, padding),

dilation=(dilation, dilation),

groups=groups,

bias=bias,

)

self.norm = norm(num_features=out_channels) if norm else None

self.act = act_func() if act_func else None

def forward(self, x: torch.Tensor) -> torch.Tensor:

if self.dropout is not None:

x = self.dropout(x)

x = self.conv(x)

if self.norm:

x = self.norm(x)

if self.act:

x = self.act(x)

return x

class RoPE(torch.nn.Module):

r"""Rotary Positional Embedding.

"""

def __init__(self, base=10000):

super(RoPE, self).__init__()

self.base = base

def generate_rotations(self, x):

# 获取输入张量的形状

*channel_dims, feature_dim = x.shape[1:-1][0], x.shape[-1]

k_max = feature_dim // (2 * len(channel_dims))

assert feature_dim % k_max == 0, "Feature dimension must be divisible by 2 * k_max"

# 生成角度

theta_ks = 1 / (self.base ** (torch.arange(k_max, dtype=x.dtype, device=x.device) / k_max))

angles = torch.cat([t.unsqueeze(-1) * theta_ks for t in

torch.meshgrid([torch.arange(d, dtype=x.dtype, device=x.device) for d in channel_dims],

indexing='ij')], dim=-1)

# 计算旋转矩阵的实部和虚部

rotations_re = torch.cos(angles).unsqueeze(dim=-1)

rotations_im = torch.sin(angles).unsqueeze(dim=-1)

rotations = torch.cat([rotations_re, rotations_im], dim=-1)

return rotations

def forward(self, x):

# 生成旋转矩阵

rotations = self.generate_rotations(x)

# 将 x 转换为复数形式

x_complex = torch.view_as_complex(x.reshape(*x.shape[:-1], -1, 2))

# 应用旋转矩阵

pe_x = torch.view_as_complex(rotations) * x_complex

# 将结果转换回实数形式并展平最后两个维度

return torch.view_as_real(pe_x).flatten(-2)

class LinearAttention(nn.Module):

r""" Linear Attention with LePE and RoPE.

Args:

dim (int): Number of input channels.

num_heads (int): Number of attention heads.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

"""

def __init__(self, dim, num_heads=4, qkv_bias=True, **kwargs):

super().__init__()

self.dim = dim

self.num_heads = num_heads

self.qk = nn.Linear(dim, dim * 2, bias=qkv_bias)

self.elu = nn.ELU()

self.lepe = nn.Conv2d(dim, dim, 3, padding=1, groups=dim)

self.rope = RoPE()

def forward(self, x):

"""

Args:

x: input features with shape of (B, N, C)

"""

b, n, c = x.shape

h = int(n ** 0.5)

w = int(n ** 0.5)

num_heads = self.num_heads

head_dim = c // num_heads

qk = self.qk(x).reshape(b, n, 2, c).permute(2, 0, 1, 3)

q, k, v = qk[0], qk[1], x

# q, k, v: b, n, c

q = self.elu(q) + 1.0

k = self.elu(k) + 1.0

q_rope = self.rope(q.reshape(b, h, w, c)).reshape(b, n, num_heads, head_dim).permute(0, 2, 1, 3)

k_rope = self.rope(k.reshape(b, h, w, c)).reshape(b, n, num_heads, head_dim).permute(0, 2, 1, 3)

q = q.reshape(b, n, num_heads, head_dim).permute(0, 2, 1, 3)

k = k.reshape(b, n, num_heads, head_dim).permute(0, 2, 1, 3)

v = v.reshape(b, n, num_heads, head_dim).permute(0, 2, 1, 3)

z = 1 / (q @ k.mean(dim=-2, keepdim=True).transpose(-2, -1) + 1e-6)

kv = (k_rope.transpose(-2, -1) * (n ** -0.5)) @ (v * (n ** -0.5))

x = q_rope @ kv * z

x = x.transpose(1, 2).reshape(b, n, c)

v = v.transpose(1, 2).reshape(b, h, w, c).permute(0, 3, 1, 2)

x = x + self.lepe(v).permute(0, 2, 3, 1).reshape(b, n, c)

return x

def extra_repr(self) -> str:

return f'dim={self.dim}, num_heads={self.num_heads}'

class MLLABlock(nn.Module):

r""" MLLA Block.

Args:

dim (int): Number of input channels.

input_resolution (tuple[int]): Input resulotion.

num_heads (int): Number of attention heads.

mlp_ratio (float): Ratio of mlp hidden dim to embedding dim.

qkv_bias (bool, optional): If True, add a learnable bias to query, key, value. Default: True

drop (float, optional): Dropout rate. Default: 0.0

drop_path (float, optional): Stochastic depth rate. Default: 0.0

act_layer (nn.Module, optional): Activation layer. Default: nn.GELU

norm_layer (nn.Module, optional): Normalization layer. Default: nn.LayerNorm

"""

def __init__(self, dim, num_heads=4, mlp_ratio=4., qkv_bias=True, drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm, **kwargs):

super().__init__()

self.dim = dim

self.num_heads = num_heads

self.mlp_ratio = mlp_ratio

self.cpe1 = nn.Conv2d(dim, dim, 3, padding=1, groups=dim)

self.norm1 = norm_layer(dim)

self.in_proj = nn.Linear(dim, dim)

self.act_proj = nn.Linear(dim, dim)

self.dwc = nn.Conv2d(dim, dim, 3, padding=1, groups=dim)

self.act = nn.SiLU()

self.attn = LinearAttention(dim=dim, num_heads=num_heads, qkv_bias=qkv_bias)

self.out_proj = nn.Linear(dim, dim)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.cpe2 = nn.Conv2d(dim, dim, 3, padding=1, groups=dim)

self.norm2 = norm_layer(dim)

self.mlp = Mlp(in_features=dim, hidden_features=int(dim * mlp_ratio), act_layer=act_layer, drop=drop)

def forward(self, x):

x = x.reshape((x.size(0), x.size(2) * x.size(3), x.size(1)))

b, n, c = x.shape

H = int(n ** 0.5)

W = int(n ** 0.5)

B, L, C = x.shape

assert L == H * W, "input feature has wrong size"

x = x + self.cpe1(x.reshape(B, H, W, C).permute(0, 3, 1, 2)).flatten(2).permute(0, 2, 1)

shortcut = x

x = self.norm1(x)

act_res = self.act(self.act_proj(x))

x = self.in_proj(x).view(B, H, W, C)

x = self.act(self.dwc(x.permute(0, 3, 1, 2))).permute(0, 2, 3, 1).view(B, L, C)

# Linear Attention

x = self.attn(x)

x = self.out_proj(x * act_res)

x = shortcut + self.drop_path(x)

x = x + self.cpe2(x.reshape(B, H, W, C).permute(0, 3, 1, 2)).flatten(2).permute(0, 2, 1)

# FFN

x = x + self.drop_path(self.mlp(self.norm2(x)))

x = x.transpose(2, 1).reshape((b, c, H, W))

return x

def extra_repr(self) -> str:

return f"dim={self.dim}, input_resolution={self.input_resolution}, num_heads={self.num_heads}, " \

f"mlp_ratio={self.mlp_ratio}"

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

"""Perform transposed convolution of 2D data."""

return self.act(self.conv(x))

class ResNetBlock(nn.Module):

"""ResNet block with standard convolution layers."""

def __init__(self, c1, c2, s=1, e=4):

"""Initialize convolution with given parameters."""

super().__init__()

c3 = e * c2

self.cv1 = Conv(c1, c2, k=1, s=1, act=True)

self.cv2 = Conv(c2, c2, k=3, s=s, p=1, act=True)

self.cv3 = Conv(c2, c3, k=1, act=False)

self.cv4 = MLLABlock(c2)

self.shortcut = nn.Sequential(Conv(c1, c3, k=1, s=s, act=False)) if s != 1 or c1 != c3 else nn.Identity()

def forward(self, x):

"""Forward pass through the ResNet block."""

return F.relu(self.cv3(self.cv4(self.cv2(self.cv1(x)))) + self.shortcut(x))

class ResNetLayer_MLLA(nn.Module):

"""ResNet layer with multiple ResNet blocks."""

def __init__(self, c1, c2, s=1, is_first=False, n=1, e=4):

"""Initializes the ResNetLayer given arguments."""

super().__init__()

self.is_first = is_first

if self.is_first:

self.layer = nn.Sequential(

Conv(c1, c2, k=7, s=2, p=3, act=True), nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

else:

blocks = [ResNetBlock(c1, c2, s, e=e)]

blocks.extend([ResNetBlock(e * c2, c2, 1, e=e) for _ in range(n - 1)])

self.layer = nn.Sequential(*blocks)

def forward(self, x):

"""Forward pass through the ResNet layer."""

return self.layer(x)

四、创新模块

4.1 改进点1⭐

模块改进方法

:直接加入

MLLABlock模块

(

第五节讲解添加步骤

)。

MLLABlock模块

添加后如下:

4.2 改进点2⭐

模块改进方法

:基于

MLLABlock模块

的

ResNetLayer

(

第五节讲解添加步骤

)。

第二种改进方法是对

RT-DETR

中的

ResNetLayer模块

进行改进,并将

MLLABlock

在加入到

ResNetLayer

模块中。

改进代码如下:

在

ResNetLayer

中加入

MLLABlock模块

,并重命名为

ResNetLayer_MLLA

class ResNetBlock(nn.Module):

"""ResNet block with standard convolution layers."""

def __init__(self, c1, c2, s=1, e=4):

"""Initialize convolution with given parameters."""

super().__init__()

c3 = e * c2

self.cv1 = Conv(c1, c2, k=1, s=1, act=True)

self.cv2 = Conv(c2, c2, k=3, s=s, p=1, act=True)

self.cv3 = Conv(c2, c3, k=1, act=False)

self.cv4 = MLLABlock(c2)

self.shortcut = nn.Sequential(Conv(c1, c3, k=1, s=s, act=False)) if s != 1 or c1 != c3 else nn.Identity()

def forward(self, x):

"""Forward pass through the ResNet block."""

return F.relu(self.cv3(self.cv4(self.cv2(self.cv1(x)))) + self.shortcut(x))

class ResNetLayer_MLLA(nn.Module):

"""ResNet layer with multiple ResNet blocks."""

def __init__(self, c1, c2, s=1, is_first=False, n=1, e=4):

"""Initializes the ResNetLayer given arguments."""

super().__init__()

self.is_first = is_first

if self.is_first:

self.layer = nn.Sequential(

Conv(c1, c2, k=7, s=2, p=3, act=True), nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

else:

blocks = [ResNetBlock(c1, c2, s, e=e)]

blocks.extend([ResNetBlock(e * c2, c2, 1, e=e) for _ in range(n - 1)])

self.layer = nn.Sequential(*blocks)

def forward(self, x):

"""Forward pass through the ResNet layer."""

return self.layer(x)

注意❗:在

第五小节

中需要声明的模块名称为:

MLLABlock

和

ResNetLayer_MLLA

。

五、添加步骤

5.1 修改一

① 在

ultralytics/nn/

目录下新建

AddModules

文件夹用于存放模块代码

② 在

AddModules

文件夹下新建

MLLA.py

,将

第三节

中的代码粘贴到此处

5.2 修改二

在

AddModules

文件夹下新建

__init__.py

(已有则不用新建),在文件内导入模块:

from .MLLA import *

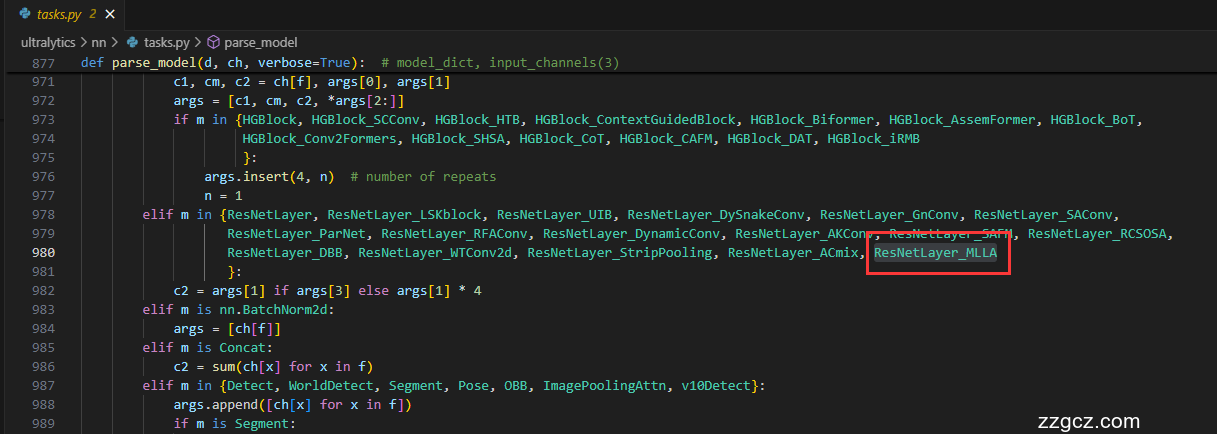

5.3 修改三

在

ultralytics/nn/modules/tasks.py

文件中,需要在两处位置添加各模块类名称。

首先:导入模块

其次:在

parse_model函数

中注册

ResNetLayer_MLLA

模块

最后:在

parse_model函数

中添加如下代码:

elif m in {MLLABlock}:

c2 = ch[f]

args = [c2, *args]

六、yaml模型文件

6.1 模型改进版本1

此处以

ultralytics/cfg/models/rt-detr/rtdetr-l.yaml

为例,在同目录下创建一个用于自己数据集训练的模型文件

rtdetr-l-MLLA.yaml

。

将

rtdetr-l.yaml

中的内容复制到

rtdetr-l-MLLA.yaml

文件下,修改

nc

数量等于自己数据中目标的数量。

📌 模型的修改方法是在

骨干网络

添加

MLLABlock模块

。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-l object detection model with P3-P5 outputs. For details see https://docs.ultralytics.com/models/rtdetr

# Parameters

nc: 1 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'

# [depth, width, max_channels]

l: [1.00, 1.00, 1024]

backbone:

# [from, repeats, module, args]

- [-1, 1, HGStem, [32, 48]] # 0-P2/4

- [-1, 6, HGBlock, [48, 128, 3]] # stage 1

- [-1, 1, DWConv, [128, 3, 2, 1, False]] # 2-P3/8

- [-1, 6, HGBlock, [96, 512, 3]] # stage 2

- [-1, 1, DWConv, [512, 3, 2, 1, False]] # 4-P4/16

- [-1, 6, HGBlock, [192, 1024, 5, True, False]] # cm, c2, k, light, shortcut

- [-1, 6, HGBlock, [192, 1024, 5, True, True]]

- [-1, 6, HGBlock, [192, 1024, 5, True, True]] # stage 3

- [-1, 1, DWConv, [1024, 3, 2, 1, False]] # 8-P5/32

- [-1, 1, MLLABlock, []] # stage 4

- [-1, 6, HGBlock, [384, 2048, 5, True, False]] # stage 4

head:

- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 10 input_proj.2

- [-1, 1, AIFI, [1024, 8]]

- [-1, 1, Conv, [256, 1, 1]] # 12, Y5, lateral_convs.0

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [7, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14 input_proj.1

- [[-2, -1], 1, Concat, [1]]

- [-1, 3, RepC3, [256]] # 16, fpn_blocks.0

- [-1, 1, Conv, [256, 1, 1]] # 17, Y4, lateral_convs.1

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 19 input_proj.0

- [[-2, -1], 1, Concat, [1]] # cat backbone P4

- [-1, 3, RepC3, [256]] # X3 (21), fpn_blocks.1

- [-1, 1, Conv, [256, 3, 2]] # 22, downsample_convs.0

- [[-1, 18], 1, Concat, [1]] # cat Y4

- [-1, 3, RepC3, [256]] # F4 (24), pan_blocks.0

- [-1, 1, Conv, [256, 3, 2]] # 25, downsample_convs.1

- [[-1, 13], 1, Concat, [1]] # cat Y5

- [-1, 3, RepC3, [256]] # F5 (27), pan_blocks.1

- [[22, 25, 28], 1, RTDETRDecoder, [nc]] # Detect(P3, P4, P5)

6.2 模型改进版本2⭐

此处以

ultralytics/cfg/models/rt-detr/rtdetr-resnet50.yaml

为例,在同目录下创建一个用于自己数据集训练的模型文件

rtdetr-ResNetLayer_MLLA .yaml

。

将

rtdetr-resnet50.yaml

中的内容复制到

rtdetr-ResNetLayer_MLLA .yaml

文件下,修改

nc

数量等于自己数据中目标的数量。

📌 模型的修改方法是将

骨干网络

中的

ResNetLayer模块

替换成

ResNetLayer_MLLA 模块

。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# RT-DETR-ResNet50 object detection model with P3-P5 outputs.

# Parameters

nc: 1 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n-cls.yaml' will call yolov8-cls.yaml with scale 'n'

# [depth, width, max_channels]

l: [1.00, 1.00, 1024]

backbone:

# [from, repeats, module, args]

- [-1, 1, ResNetLayer_MLLA, [3, 64, 1, True, 1]] # 0

- [-1, 1, ResNetLayer_MLLA, [64, 64, 1, False, 3]] # 1

- [-1, 1, ResNetLayer_MLLA, [256, 128, 2, False, 4]] # 2

- [-1, 1, ResNetLayer_MLLA, [512, 256, 2, False, 6]] # 3

- [-1, 1, ResNetLayer_MLLA, [1024, 512, 2, False, 3]] # 4

head:

- [-1, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 5

- [-1, 1, AIFI, [1024, 8]]

- [-1, 1, Conv, [256, 1, 1]] # 7

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [3, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 9

- [[-2, -1], 1, Concat, [1]]

- [-1, 3, RepC3, [256]] # 11

- [-1, 1, Conv, [256, 1, 1]] # 12

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [2, 1, Conv, [256, 1, 1, None, 1, 1, False]] # 14

- [[-2, -1], 1, Concat, [1]] # cat backbone P4

- [-1, 3, RepC3, [256]] # X3 (16), fpn_blocks.1

- [-1, 1, Conv, [256, 3, 2]] # 17, downsample_convs.0

- [[-1, 12], 1, Concat, [1]] # cat Y4

- [-1, 3, RepC3, [256]] # F4 (19), pan_blocks.0

- [-1, 1, Conv, [256, 3, 2]] # 20, downsample_convs.1

- [[-1, 7], 1, Concat, [1]] # cat Y5

- [-1, 3, RepC3, [256]] # F5 (22), pan_blocks.1

- [[16, 19, 22], 1, RTDETRDecoder, [nc]] # Detect(P3, P4, P5)

七、成功运行结果

打印网络模型可以看到

MLLABlock

和

ResNetLayer_MLLA

已经加入到模型中,并可以进行训练了。

rtdetr-l-MLLA :

rtdetr-l-MLLA summary: 702 layers, 46,494,915 parameters, 46,494,915 gradients, 118.9 GFLOPs

from n params module arguments

0 -1 1 25248 ultralytics.nn.modules.block.HGStem [3, 32, 48]

1 -1 6 155072 ultralytics.nn.modules.block.HGBlock [48, 48, 128, 3, 6]

2 -1 1 1408 ultralytics.nn.modules.conv.DWConv [128, 128, 3, 2, 1, False]

3 -1 6 839296 ultralytics.nn.modules.block.HGBlock [128, 96, 512, 3, 6]

4 -1 1 5632 ultralytics.nn.modules.conv.DWConv [512, 512, 3, 2, 1, False]

5 -1 6 1695360 ultralytics.nn.modules.block.HGBlock [512, 192, 1024, 5, 6, True, False]

6 -1 6 2055808 ultralytics.nn.modules.block.HGBlock [1024, 192, 1024, 5, 6, True, True]

7 -1 6 2055808 ultralytics.nn.modules.block.HGBlock [1024, 192, 1024, 5, 6, True, True]

8 -1 1 11264 ultralytics.nn.modules.conv.DWConv [1024, 1024, 3, 2, 1, False]

9 -1 1 13686784 ultralytics.nn.AddModules.MLLA.MLLABlock [1024]

10 -1 6 6708480 ultralytics.nn.modules.block.HGBlock [1024, 384, 2048, 5, 6, True, False]

11 -1 1 524800 ultralytics.nn.modules.conv.Conv [2048, 256, 1, 1, None, 1, 1, False]

12 -1 1 789760 ultralytics.nn.modules.transformer.AIFI [256, 1024, 8]

13 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

14 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

15 7 1 262656 ultralytics.nn.modules.conv.Conv [1024, 256, 1, 1, None, 1, 1, False]

16 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

17 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

18 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

19 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

20 3 1 131584 ultralytics.nn.modules.conv.Conv [512, 256, 1, 1, None, 1, 1, False]

21 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

22 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

23 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

24 [-1, 18] 1 0 ultralytics.nn.modules.conv.Concat [1]

25 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

26 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

27 [-1, 13] 1 0 ultralytics.nn.modules.conv.Concat [1]

28 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

29 [22, 25, 28] 1 7303907 ultralytics.nn.modules.head.RTDETRDecoder [1, [256, 256, 256]]

rtdetr-l-MLLA summary: 702 layers, 46,494,915 parameters, 46,494,915 gradients, 118.9 GFLOPs

rtdetr-ResNetLayer_MLLA :

rtdetr-ResNetLayer_MLLA summary: 929 layers, 59,313,827 parameters, 59,313,827 gradients, 175.1 GFLOPs

from n params module arguments

0 -1 1 9536 ultralytics.nn.AddModules.MLLA.ResNetLayer_MLLA[3, 64, 1, True, 1]

1 -1 1 385920 ultralytics.nn.AddModules.MLLA.ResNetLayer_MLLA[64, 64, 1, False, 3]

2 -1 1 2099200 ultralytics.nn.AddModules.MLLA.ResNetLayer_MLLA[256, 128, 2, False, 4]

3 -1 1 12293120 ultralytics.nn.AddModules.MLLA.ResNetLayer_MLLA[512, 256, 2, False, 6]

4 -1 1 25271296 ultralytics.nn.AddModules.MLLA.ResNetLayer_MLLA[1024, 512, 2, False, 3]

5 -1 1 524800 ultralytics.nn.modules.conv.Conv [2048, 256, 1, 1, None, 1, 1, False]

6 -1 1 789760 ultralytics.nn.modules.transformer.AIFI [256, 1024, 8]

7 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

8 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

9 3 1 262656 ultralytics.nn.modules.conv.Conv [1024, 256, 1, 1, None, 1, 1, False]

10 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

11 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

12 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 2 1 131584 ultralytics.nn.modules.conv.Conv [512, 256, 1, 1, None, 1, 1, False]

15 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

16 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

17 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

18 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

19 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

20 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

21 [-1, 7] 1 0 ultralytics.nn.modules.conv.Concat [1]

22 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

23 [16, 19, 22] 1 7303907 ultralytics.nn.modules.head.RTDETRDecoder [1, [256, 256, 256]]

rtdetr-ResNetLayer_MLLA summary: 929 layers, 59,313,827 parameters, 59,313,827 gradients, 175.1 GFLOPs