一、本文介绍

本文给大家带来的最新改进机制是 融合改进 ,最近有好几个读者和我反应单独的机制都能够涨点,一融合起来就掉点,这是大家不了解其中的原理(这也是为什么我每一个机制都给大家讲解一下原理, 大家要明白其中的每个单独的机制涨点原理然后才能够更好的融合,有一些结构是有冲突的 ) ,不知道哪些模块和那些模块融合起来才能够涨点。所以本文给大家带来的改进机制是融合 BiFPN+ MobileNetv4 的融合改进机制, 同时本文的MobileNetV4可以替换本专栏的其它任何主干网络机制.。

| 训练信息:YOLO11-BiFPN-MobileNetV4 summary: 436 layers, 1,999,494 parameters, 1,999,478 gradients, 5.1 GFLOPs |

| 基础版本:319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs |

二、MobileNetv4基本原理

官方论文地址: 官方论文地址点击此处即可跳转

官方代码地址: 官方代码地址点击此处即可跳转

MobileNetV4 是MobileNet系列的最新版本,专为移动设备设计,引入了多种新颖且高效的架构组件。其中最关键的是通用反转瓶颈(UIB),它结合了以前模型如 MobileNetV2 的反转瓶颈和新元素,例如ConvNext块和视觉变换器(ViT)中的前馈网络。这种结构允许在不过度复杂化架构搜索过程的情况下,适应性地并有效地扩展模型到各种平台。

此外,MobileNetV4还包括一种名为Mobile MQA的新型注意力机制,该机制通过优化算术运算与内存访问的比率,显著提高了移动加速器上的推理速度,这是移动 性能 的关键因素。该架构通过精细的 神经网络 架构搜索(NAS)和新颖的蒸馏技术进一步优化,使得MobileNetV4能够在多种 硬件 平台上达到最优性能,包括移动CPU、DSP、GPU和特定的加速器,如Apple的Neural Engine和Google的Pixel EdgeTPU。

此外,MobileNetV4还引入了改进的NAS策略,通过粗粒度和细粒度搜索相结合的方法,显著提高搜索效率并改善模型质量。通过这种方法,MobileNetV4能够实现大多数情况下的Pareto最优性能,这意味着在不同设备上都能达到效率和准确性的最佳平衡。

最后,通过一种新的蒸馏技术,MobileNetV4进一步提高了准确性,其混合型大模型在ImageNet-1K数据集上达到了87%的顶级准确率,同时在Pixel 8 EdgeTPU上的运行时间仅为3.8毫秒。这些特性使MobileNetV4成为适用于移动环境中高效视觉任务的理想选择。

主要思想提取和总结:

1. 通用反转瓶颈(UIB),本文利用的机制:

MobileNetV4引入了一种名为通用反转瓶颈(UIB)的新架构组件。UIB是一个灵活的架构单元,融合了反转瓶颈(IB)、ConvNext、前馈网络(FFN),以及新颖的额外深度(ExtraDW)变体。

2. Mobile MQA注意力机制:

为了优化移动加速器的性能,MobileNetV4设计了一个特殊的注意力模块,名为Mobile MQA。这一模块针对移动设备的计算和存储限制进行了优化,提供了高达39%的推理速度提升。

3. 优化的神经架构搜索(NAS)配方:

通过改进的NAS配方,MobileNetV4能够更高效地搜索和优化网络架构,这有助于发现适合特定硬件的最优模型配置。

4. 模型蒸馏技术:

引入了一种新的蒸馏技术,用以提高模型的准确性。通过这种技术,MNv4-Hybrid-Large模型在ImageNet-1K上达到了87%的准确率,并且在Pixel 8 EdgeTPU上的运行时间仅为3.8毫秒。

个人总结: MobileNetV4是一个专为移动设备设计的高效 深度学习 模型。它通过整合多种先进技术,如通用反转瓶颈(UIB)、针对移动设备优化的注意力机制(Mobile MQA),以及先进的架构搜索方法(NAS),实现了在不同硬件上的高效运行。这些技术的融合不仅大幅提升了模型的运行速度,还显著提高了准确率。特别是,它的一个变体模型在标准图像识别测试中取得了87%的准确率,运行速度极快。

三、 BiFPN 原理

论文地址: 论文官方地址

代码地址: 官方代码地址

2.1 BiFPN的基本原理

BiFPN(Bidirectional Feature Pyramid Network) ,双向特征金字塔网络是一种 高效的多尺度特征融合网络 ,它在传统特征金字塔网络(FPN)的基础上进行了优化。 主要特点 包括:

1. 高效的双向跨尺度连接: BiFPN通过在自顶向下和自底向上路径之间建立双向连接,允许不同尺度特征间的信息更有效地流动和融合。

2. 简化的网络结构: BiFPN通过删除只有一个输入边的节点、在同一层级的输入和输出节点间添加额外边,以及将每个双向路径视为一个特征网络层并重复多次,来优化跨尺度连接。

3. 加权特征融合: BiFPN引入了可学习的权重来确定不同输入特征的重要性,从而提高了特征融合的效果。

我们可以将其基本原理概括分为以下几点:

1. 双向特征融合: BiFPN允许特征在自顶向下和自底向上两个方向上进行融合,从而更有效地结合不同尺度的特征。

2. 加权融合机制: BiFPN通过为每个输入特征添加权重来优化特征融合过程,使得网络可以更加重视信息量更大的特征。

3. 结构优化: BiFPN通过移除只有一个输入边的节点、添加同一层级的输入输出节点之间的额外边,并将每个双向路径视为一个特征网络层,来优化跨尺度连接。

我将通过下图为大家对比展示BiFPN与 其他四种不同特征金字塔网络设计的不同 以及BiFPN如何 更有效地整合特征 :

(a) FPN (Feature Pyramid Network):

引入了自顶向下的路径来融合从第3层到第7层(P3 - P7)的多尺度特征。

(b) PANet:

在FPN的基础上增加了自底向上的额外路径。

(c) NAS-FPN:

使用神经架构搜索(NAS)来找到不规则的特征网络拓扑,然后重复应用相同的块。

(d) BiFPN:

通过高效的双向跨尺度连接和重复的块结构,改进了准确度和效率之间的权衡。

我们可以看出BiFPN通过双向路径 允许特征信息在不同尺度间双向流动 ,这种双向流动可以看做是在不同尺度之间进行有效信息交换。这样的设计旨在通过 强化特征的双向流动 来提升特征融合的效率和有效性,从而提高目标检测的性能。

2.2 双向特征融合

双向特征融合 在BiFPN(双向特征金字塔网络)中指的是一种机制,它允许在特征网络层中的信息在自顶向下和自底向上两个方向上流动和融合。这种方法与传统的单向特征金字塔网络(如PANet)相比,能够 在不同层级之间更高效地融合特征,而无需增加显著的计算成本。

在BiFPN中,每一条双向路径(自顶向下和自底向上)被视作一个单独的特征网络层,然后这些层可以被重复多次,以促进更高级别的特征融合。这样做的结果是一个简化的双向网络,它增强了网络对特征融合的能力,使网络能够更有效地利用不同尺度的信息,从而提高目标检测的性能。

下图展示的是 EfficientDet架构的具体细节 ,其中包含了EfficientNet作为骨干网络(backbone),以及BiFPN作为特征网络的使用。在这个架构中,BiFPN层通过其双向特征融合的能力,从EfficientNet骨干网络接收多尺度的输入特征,然后生成用于对象分类和边框预测的富有表现力的特征。

在BiFPN层中,我们可以看到不同尺度的特征(P2至P7)如何通过 上下双向路径进行融合 。这种结构设计的目的是在保持计算效率的同时最大化特征融合的效果,以提高对象检测的整体性能。图中还显示了类别预测网络和边框预测网络,这些是在BiFPN特征融合后用于预测对象类别和定位对象边界框的网络部分。

2.3 加权融合机制

加权融合机制 是BiFPN中用于 改进特征融合效果 的一种技术。在传统的特征金字塔网络中,所有输入特征通常在没有区分的情况下等同对待,这意味着不同分辨率的特征被简单地相加在一起,而不考虑它们对输出特征的不同贡献。然而,在BiFPN中,观察到由于不同的输入特征具有不同的分辨率,它们通常 对输出特征的贡献是不等的 。

为了解决这个问题,BiFPN提出了 为每个输入添加一个额外的权重 ,并让网络学习每个输入特征的重要性:

其中

是一个可学习的权重,可以是标量(每个特征),向量(每个通道)或多维张量(每个像素)。这些权重是可学习的,可以是标量(针对每个特征),向量(针对每个通道),或者多维张量(针对每个像素)。这种加权融合方法可以在最小化计算成本的同时实现与其他方法可比的准确度。

是一个可学习的权重,可以是标量(每个特征),向量(每个通道)或多维张量(每个像素)。这些权重是可学习的,可以是标量(针对每个特征),向量(针对每个通道),或者多维张量(针对每个像素)。这种加权融合方法可以在最小化计算成本的同时实现与其他方法可比的准确度。

2.4 结构优化

结构优化 是为了在不同的资源约束下,通过复合缩放方法确定不同的层数,从而在保持效率的同时提高准确性。我们通过分析观察BiFPN的设计, 其结构优化包括:

1. 简化的双向网络: 通过优化结构,减少了网络中的节点数,特别是移除了那些只有一个输入边的节点。这种简化的直觉是如果一个节点没有进行特征融合,即它只有一个输入边,那么它对于融合不同特征的特征网络的贡献会更小。

2. 增加额外的边缘 :在相同层级的原始输入和输出节点之间增加了额外的边缘,以便在不显著增加成本的情况下融合更多的特征。

3. 重复使用双向路径: 与只有单一自顶向下和自底向上路径的PANet不同,BiFPN将每条双向(自顶向下和自底向上)路径视为一个特征网络层,并重复多次,以实现更高级别的特征融合。

四、手把手教你修改BiFPN

BiFPN核心代码

- import torch.nn as nn

- import torch

- class swish(nn.Module):

- def forward(self, x):

- return x * torch.sigmoid(x)

- class Bi_FPN(nn.Module):

- def __init__(self, length):

- super().__init__()

- self.weight = nn.Parameter(torch.ones(length, dtype=torch.float32), requires_grad=True)

- self.swish = swish()

- self.epsilon = 0.0001

- def forward(self, x):

- weights = self.weight / (torch.sum(self.swish(self.weight), dim=0) + self.epsilon) # 权重归一化处理

- weighted_feature_maps = [weights[i] * x[i] for i in range(len(x))]

- stacked_feature_maps = torch.stack(weighted_feature_maps, dim=0)

- result = torch.sum(stacked_feature_maps, dim=0)

- return result

4.1 修改一

第一还是建立文件,我们找到如下ultralytics/nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

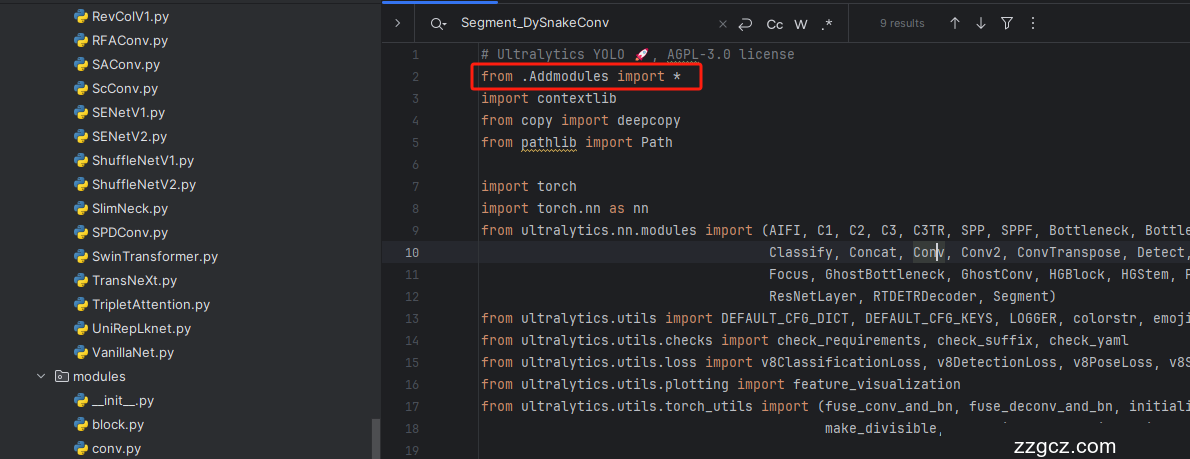

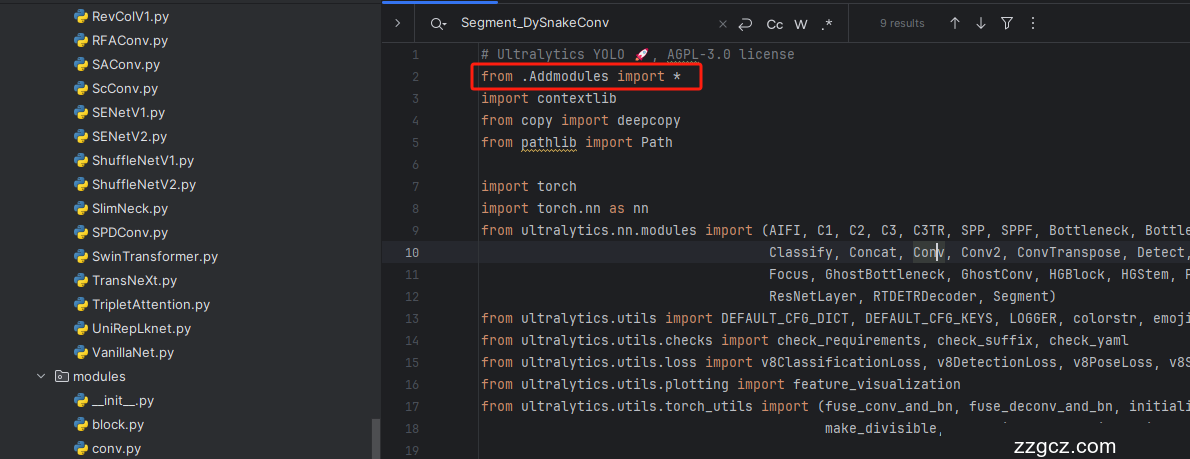

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

4.4 修改四

按照我的添加在ultralytics/nn/tasks.py文件下的parse_model里添加即可。

- elif m is Bi_FPN:

- length = len([ch[x] for x in f])

- args = [length]

五、手把手教你添加MobileNetv4

MobileNetv4核心代码

- from typing import Optional

- import torch

- import torch.nn as nn

- import torch.nn.functional as F

- __all__ = ['MobileNetV4ConvLarge', 'MobileNetV4ConvSmall', 'MobileNetV4ConvMedium', 'MobileNetV4HybridMedium', 'MobileNetV4HybridLarge']

- MNV4ConvSmall_BLOCK_SPECS = {

- "conv0": {

- "block_name": "convbn",

- "num_blocks": 1,

- "block_specs": [

- [3, 32, 3, 2]

- ]

- },

- "layer1": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [32, 32, 3, 2],

- [32, 32, 1, 1]

- ]

- },

- "layer2": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [32, 96, 3, 2],

- [96, 64, 1, 1]

- ]

- },

- "layer3": {

- "block_name": "uib",

- "num_blocks": 6,

- "block_specs": [

- [64, 96, 5, 5, True, 2, 3],

- [96, 96, 0, 3, True, 1, 2],

- [96, 96, 0, 3, True, 1, 2],

- [96, 96, 0, 3, True, 1, 2],

- [96, 96, 0, 3, True, 1, 2],

- [96, 96, 3, 0, True, 1, 4],

- ]

- },

- "layer4": {

- "block_name": "uib",

- "num_blocks": 6,

- "block_specs": [

- [96, 128, 3, 3, True, 2, 6],

- [128, 128, 5, 5, True, 1, 4],

- [128, 128, 0, 5, True, 1, 4],

- [128, 128, 0, 5, True, 1, 3],

- [128, 128, 0, 3, True, 1, 4],

- [128, 128, 0, 3, True, 1, 4],

- ]

- },

- "layer5": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [128, 960, 1, 1],

- [960, 1280, 1, 1]

- ]

- }

- }

- MNV4ConvMedium_BLOCK_SPECS = {

- "conv0": {

- "block_name": "convbn",

- "num_blocks": 1,

- "block_specs": [

- [3, 32, 3, 2]

- ]

- },

- "layer1": {

- "block_name": "fused_ib",

- "num_blocks": 1,

- "block_specs": [

- [32, 48, 2, 4.0, True]

- ]

- },

- "layer2": {

- "block_name": "uib",

- "num_blocks": 2,

- "block_specs": [

- [48, 80, 3, 5, True, 2, 4],

- [80, 80, 3, 3, True, 1, 2]

- ]

- },

- "layer3": {

- "block_name": "uib",

- "num_blocks": 8,

- "block_specs": [

- [80, 160, 3, 5, True, 2, 6],

- [160, 160, 3, 3, True, 1, 4],

- [160, 160, 3, 3, True, 1, 4],

- [160, 160, 3, 5, True, 1, 4],

- [160, 160, 3, 3, True, 1, 4],

- [160, 160, 3, 0, True, 1, 4],

- [160, 160, 0, 0, True, 1, 2],

- [160, 160, 3, 0, True, 1, 4]

- ]

- },

- "layer4": {

- "block_name": "uib",

- "num_blocks": 11,

- "block_specs": [

- [160, 256, 5, 5, True, 2, 6],

- [256, 256, 5, 5, True, 1, 4],

- [256, 256, 3, 5, True, 1, 4],

- [256, 256, 3, 5, True, 1, 4],

- [256, 256, 0, 0, True, 1, 4],

- [256, 256, 3, 0, True, 1, 4],

- [256, 256, 3, 5, True, 1, 2],

- [256, 256, 5, 5, True, 1, 4],

- [256, 256, 0, 0, True, 1, 4],

- [256, 256, 0, 0, True, 1, 4],

- [256, 256, 5, 0, True, 1, 2]

- ]

- },

- "layer5": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [256, 960, 1, 1],

- [960, 1280, 1, 1]

- ]

- }

- }

- MNV4ConvLarge_BLOCK_SPECS = {

- "conv0": {

- "block_name": "convbn",

- "num_blocks": 1,

- "block_specs": [

- [3, 24, 3, 2]

- ]

- },

- "layer1": {

- "block_name": "fused_ib",

- "num_blocks": 1,

- "block_specs": [

- [24, 48, 2, 4.0, True]

- ]

- },

- "layer2": {

- "block_name": "uib",

- "num_blocks": 2,

- "block_specs": [

- [48, 96, 3, 5, True, 2, 4],

- [96, 96, 3, 3, True, 1, 4]

- ]

- },

- "layer3": {

- "block_name": "uib",

- "num_blocks": 11,

- "block_specs": [

- [96, 192, 3, 5, True, 2, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 5, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 3, 0, True, 1, 4]

- ]

- },

- "layer4": {

- "block_name": "uib",

- "num_blocks": 13,

- "block_specs": [

- [192, 512, 5, 5, True, 2, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 3, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 3, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4]

- ]

- },

- "layer5": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [512, 960, 1, 1],

- [960, 1280, 1, 1]

- ]

- }

- }

- def mhsa(num_heads, key_dim, value_dim, px):

- if px == 24:

- kv_strides = 2

- elif px == 12:

- kv_strides = 1

- query_h_strides = 1

- query_w_strides = 1

- use_layer_scale = True

- use_multi_query = True

- use_residual = True

- return [

- num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides,

- use_layer_scale, use_multi_query, use_residual

- ]

- MNV4HybridConvMedium_BLOCK_SPECS = {

- "conv0": {

- "block_name": "convbn",

- "num_blocks": 1,

- "block_specs": [

- [3, 32, 3, 2]

- ]

- },

- "layer1": {

- "block_name": "fused_ib",

- "num_blocks": 1,

- "block_specs": [

- [32, 48, 2, 4.0, True]

- ]

- },

- "layer2": {

- "block_name": "uib",

- "num_blocks": 2,

- "block_specs": [

- [48, 80, 3, 5, True, 2, 4],

- [80, 80, 3, 3, True, 1, 2]

- ]

- },

- "layer3": {

- "block_name": "uib",

- "num_blocks": 8,

- "block_specs": [

- [80, 160, 3, 5, True, 2, 6],

- [160, 160, 0, 0, True, 1, 2],

- [160, 160, 3, 3, True, 1, 4],

- [160, 160, 3, 5, True, 1, 4, mhsa(4, 64, 64, 24)],

- [160, 160, 3, 3, True, 1, 4, mhsa(4, 64, 64, 24)],

- [160, 160, 3, 0, True, 1, 4, mhsa(4, 64, 64, 24)],

- [160, 160, 3, 3, True, 1, 4, mhsa(4, 64, 64, 24)],

- [160, 160, 3, 0, True, 1, 4]

- ]

- },

- "layer4": {

- "block_name": "uib",

- "num_blocks": 12,

- "block_specs": [

- [160, 256, 5, 5, True, 2, 6],

- [256, 256, 5, 5, True, 1, 4],

- [256, 256, 3, 5, True, 1, 4],

- [256, 256, 3, 5, True, 1, 4],

- [256, 256, 0, 0, True, 1, 2],

- [256, 256, 3, 5, True, 1, 2],

- [256, 256, 0, 0, True, 1, 2],

- [256, 256, 0, 0, True, 1, 4, mhsa(4, 64, 64, 12)],

- [256, 256, 3, 0, True, 1, 4, mhsa(4, 64, 64, 12)],

- [256, 256, 5, 5, True, 1, 4, mhsa(4, 64, 64, 12)],

- [256, 256, 5, 0, True, 1, 4, mhsa(4, 64, 64, 12)],

- [256, 256, 5, 0, True, 1, 4]

- ]

- },

- "layer5": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [256, 960, 1, 1],

- [960, 1280, 1, 1]

- ]

- }

- }

- MNV4HybridConvLarge_BLOCK_SPECS = {

- "conv0": {

- "block_name": "convbn",

- "num_blocks": 1,

- "block_specs": [

- [3, 24, 3, 2]

- ]

- },

- "layer1": {

- "block_name": "fused_ib",

- "num_blocks": 1,

- "block_specs": [

- [24, 48, 2, 4.0, True]

- ]

- },

- "layer2": {

- "block_name": "uib",

- "num_blocks": 2,

- "block_specs": [

- [48, 96, 3, 5, True, 2, 4],

- [96, 96, 3, 3, True, 1, 4]

- ]

- },

- "layer3": {

- "block_name": "uib",

- "num_blocks": 11,

- "block_specs": [

- [96, 192, 3, 5, True, 2, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 3, True, 1, 4],

- [192, 192, 3, 5, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4],

- [192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],

- [192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],

- [192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],

- [192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],

- [192, 192, 3, 0, True, 1, 4]

- ]

- },

- "layer4": {

- "block_name": "uib",

- "num_blocks": 14,

- "block_specs": [

- [192, 512, 5, 5, True, 2, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 3, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 0, True, 1, 4],

- [512, 512, 5, 3, True, 1, 4],

- [512, 512, 5, 5, True, 1, 4, mhsa(8, 64, 64, 12)],

- [512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],

- [512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],

- [512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],

- [512, 512, 5, 0, True, 1, 4]

- ]

- },

- "layer5": {

- "block_name": "convbn",

- "num_blocks": 2,

- "block_specs": [

- [512, 960, 1, 1],

- [960, 1280, 1, 1]

- ]

- }

- }

- MODEL_SPECS = {

- "MobileNetV4ConvSmall": MNV4ConvSmall_BLOCK_SPECS,

- "MobileNetV4ConvMedium": MNV4ConvMedium_BLOCK_SPECS,

- "MobileNetV4ConvLarge": MNV4ConvLarge_BLOCK_SPECS,

- "MobileNetV4HybridMedium": MNV4HybridConvMedium_BLOCK_SPECS,

- "MobileNetV4HybridLarge": MNV4HybridConvLarge_BLOCK_SPECS

- }

- def make_divisible(

- value: float,

- divisor: int,

- min_value: Optional[float] = None,

- round_down_protect: bool = True,

- ) -> int:

- """

- This function is copied from here

- "https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_layers.py"

- This is to ensure that all layers have channels that are divisible by 8.

- Args:

- value: A `float` of original value.

- divisor: An `int` of the divisor that need to be checked upon.

- min_value: A `float` of minimum value threshold.

- round_down_protect: A `bool` indicating whether round down more than 10%

- will be allowed.

- Returns:

- The adjusted value in `int` that is divisible against divisor.

- """

- if min_value is None:

- min_value = divisor

- new_value = max(min_value, int(value + divisor / 2) // divisor * divisor)

- # Make sure that round down does not go down by more than 10%.

- if round_down_protect and new_value < 0.9 * value:

- new_value += divisor

- return int(new_value)

- def conv_2d(inp, oup, kernel_size=3, stride=1, groups=1, bias=False, norm=True, act=True):

- conv = nn.Sequential()

- padding = (kernel_size - 1) // 2

- conv.add_module('conv', nn.Conv2d(inp, oup, kernel_size, stride, padding, bias=bias, groups=groups))

- if norm:

- conv.add_module('BatchNorm2d', nn.BatchNorm2d(oup))

- if act:

- conv.add_module('Activation', nn.ReLU6())

- return conv

- class InvertedResidual(nn.Module):

- def __init__(self, inp, oup, stride, expand_ratio, act=False, squeeze_excitation=False):

- super(InvertedResidual, self).__init__()

- self.stride = stride

- assert stride in [1, 2]

- hidden_dim = int(round(inp * expand_ratio))

- self.block = nn.Sequential()

- if expand_ratio != 1:

- self.block.add_module('exp_1x1', conv_2d(inp, hidden_dim, kernel_size=3, stride=stride))

- if squeeze_excitation:

- self.block.add_module('conv_3x3',

- conv_2d(hidden_dim, hidden_dim, kernel_size=3, stride=stride, groups=hidden_dim))

- self.block.add_module('red_1x1', conv_2d(hidden_dim, oup, kernel_size=1, stride=1, act=act))

- self.use_res_connect = self.stride == 1 and inp == oup

- def forward(self, x):

- if self.use_res_connect:

- return x + self.block(x)

- else:

- return self.block(x)

- class UniversalInvertedBottleneckBlock(nn.Module):

- def __init__(self,

- inp,

- oup,

- start_dw_kernel_size,

- middle_dw_kernel_size,

- middle_dw_downsample,

- stride,

- expand_ratio

- ):

- """An inverted bottleneck block with optional depthwises.

- Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.py

- """

- super().__init__()

- # Starting depthwise conv.

- self.start_dw_kernel_size = start_dw_kernel_size

- if self.start_dw_kernel_size:

- stride_ = stride if not middle_dw_downsample else 1

- self._start_dw_ = conv_2d(inp, inp, kernel_size=start_dw_kernel_size, stride=stride_, groups=inp, act=False)

- # Expansion with 1x1 convs.

- expand_filters = make_divisible(inp * expand_ratio, 8)

- self._expand_conv = conv_2d(inp, expand_filters, kernel_size=1)

- # Middle depthwise conv.

- self.middle_dw_kernel_size = middle_dw_kernel_size

- if self.middle_dw_kernel_size:

- stride_ = stride if middle_dw_downsample else 1

- self._middle_dw = conv_2d(expand_filters, expand_filters, kernel_size=middle_dw_kernel_size, stride=stride_,

- groups=expand_filters)

- # Projection with 1x1 convs.

- self._proj_conv = conv_2d(expand_filters, oup, kernel_size=1, stride=1, act=False)

- # Ending depthwise conv.

- # this not used

- # _end_dw_kernel_size = 0

- # self._end_dw = conv_2d(oup, oup, kernel_size=_end_dw_kernel_size, stride=stride, groups=inp, act=False)

- def forward(self, x):

- if self.start_dw_kernel_size:

- x = self._start_dw_(x)

- # print("_start_dw_", x.shape)

- x = self._expand_conv(x)

- # print("_expand_conv", x.shape)

- if self.middle_dw_kernel_size:

- x = self._middle_dw(x)

- # print("_middle_dw", x.shape)

- x = self._proj_conv(x)

- # print("_proj_conv", x.shape)

- return x

- class MultiQueryAttentionLayerWithDownSampling(nn.Module):

- def __init__(self, inp, num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides,

- dw_kernel_size=3, dropout=0.0):

- """Multi Query Attention with spatial downsampling.

- Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.py

- 3 parameters are introduced for the spatial downsampling:

- 1. kv_strides: downsampling factor on Key and Values only.

- 2. query_h_strides: vertical strides on Query only.

- 3. query_w_strides: horizontal strides on Query only.

- This is an optimized version.

- 1. Projections in Attention is explict written out as 1x1 Conv2D.

- 2. Additional reshapes are introduced to bring a up to 3x speed up.

- """

- super().__init__()

- self.num_heads = num_heads

- self.key_dim = key_dim

- self.value_dim = value_dim

- self.query_h_strides = query_h_strides

- self.query_w_strides = query_w_strides

- self.kv_strides = kv_strides

- self.dw_kernel_size = dw_kernel_size

- self.dropout = dropout

- self.head_dim = key_dim // num_heads

- if self.query_h_strides > 1 or self.query_w_strides > 1:

- self._query_downsampling_norm = nn.BatchNorm2d(inp)

- self._query_proj = conv_2d(inp, num_heads * key_dim, 1, 1, norm=False, act=False)

- if self.kv_strides > 1:

- self._key_dw_conv = conv_2d(inp, inp, dw_kernel_size, kv_strides, groups=inp, norm=True, act=False)

- self._value_dw_conv = conv_2d(inp, inp, dw_kernel_size, kv_strides, groups=inp, norm=True, act=False)

- self._key_proj = conv_2d(inp, key_dim, 1, 1, norm=False, act=False)

- self._value_proj = conv_2d(inp, key_dim, 1, 1, norm=False, act=False)

- self._output_proj = conv_2d(num_heads * key_dim, inp, 1, 1, norm=False, act=False)

- self.dropout = nn.Dropout(p=dropout)

- def forward(self, x):

- batch_size, seq_length, _, _ = x.size()

- if self.query_h_strides > 1 or self.query_w_strides > 1:

- q = F.avg_pool2d(self.query_h_stride, self.query_w_stride)

- q = self._query_downsampling_norm(q)

- q = self._query_proj(q)

- else:

- q = self._query_proj(x)

- px = q.size(2)

- q = q.view(batch_size, self.num_heads, -1, self.key_dim) # [batch_size, num_heads, seq_length, key_dim]

- if self.kv_strides > 1:

- k = self._key_dw_conv(x)

- k = self._key_proj(k)

- v = self._value_dw_conv(x)

- v = self._value_proj(v)

- else:

- k = self._key_proj(x)

- v = self._value_proj(x)

- k = k.view(batch_size, self.key_dim, -1) # [batch_size, key_dim, seq_length]

- v = v.view(batch_size, -1, self.key_dim) # [batch_size, seq_length, key_dim]

- # calculate attn score

- attn_score = torch.matmul(q, k) / (self.head_dim ** 0.5)

- attn_score = self.dropout(attn_score)

- attn_score = F.softmax(attn_score, dim=-1)

- context = torch.matmul(attn_score, v)

- context = context.view(batch_size, self.num_heads * self.key_dim, px, px)

- output = self._output_proj(context)

- return output

- class MNV4LayerScale(nn.Module):

- def __init__(self, init_value):

- """LayerScale as introduced in CaiT: https://arxiv.org/abs/2103.17239

- Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.py

- As used in MobileNetV4.

- Attributes:

- init_value (float): value to initialize the diagonal matrix of LayerScale.

- """

- super().__init__()

- self.init_value = init_value

- def forward(self, x):

- gamma = self.init_value * torch.ones(x.size(-1), dtype=x.dtype, device=x.device)

- return x * gamma

- class MultiHeadSelfAttentionBlock(nn.Module):

- def __init__(

- self,

- inp,

- num_heads,

- key_dim,

- value_dim,

- query_h_strides,

- query_w_strides,

- kv_strides,

- use_layer_scale,

- use_multi_query,

- use_residual=True

- ):

- super().__init__()

- self.query_h_strides = query_h_strides

- self.query_w_strides = query_w_strides

- self.kv_strides = kv_strides

- self.use_layer_scale = use_layer_scale

- self.use_multi_query = use_multi_query

- self.use_residual = use_residual

- self._input_norm = nn.BatchNorm2d(inp)

- if self.use_multi_query:

- self.multi_query_attention = MultiQueryAttentionLayerWithDownSampling(

- inp, num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides

- )

- else:

- self.multi_head_attention = nn.MultiheadAttention(inp, num_heads, kdim=key_dim)

- if self.use_layer_scale:

- self.layer_scale_init_value = 1e-5

- self.layer_scale = MNV4LayerScale(self.layer_scale_init_value)

- def forward(self, x):

- # Not using CPE, skipped

- # input norm

- shortcut = x

- x = self._input_norm(x)

- # multi query

- if self.use_multi_query:

- x = self.multi_query_attention(x)

- else:

- x = self.multi_head_attention(x, x)

- # layer scale

- if self.use_layer_scale:

- x = self.layer_scale(x)

- # use residual

- if self.use_residual:

- x = x + shortcut

- return x

- def build_blocks(layer_spec, factor=0.25):

- if not layer_spec.get('block_name'):

- return nn.Sequential()

- block_names = layer_spec['block_name']

- layers = nn.Sequential()

- if block_names == "convbn":

- schema_ = ['inp', 'oup', 'kernel_size', 'stride']

- for i in range(layer_spec['num_blocks']):

- args = dict(zip(schema_, layer_spec['block_specs'][i]))

- if args['inp'] != 3:

- args['inp'] = int(args['inp'] * factor)

- args['oup'] = int(args['oup'] * factor)

- layers.add_module(f"convbn_{i}", conv_2d(**args))

- elif block_names == "uib":

- schema_ = ['inp', 'oup', 'start_dw_kernel_size', 'middle_dw_kernel_size', 'middle_dw_downsample', 'stride',

- 'expand_ratio', 'msha']

- for i in range(layer_spec['num_blocks']):

- args = dict(zip(schema_, layer_spec['block_specs'][i]))

- args['inp'] = int(args['inp'] * factor)

- args['oup'] = int(args['oup'] * factor)

- msha = args.pop("msha") if "msha" in args else 0

- layers.add_module(f"uib_{i}", UniversalInvertedBottleneckBlock(**args))

- if msha:

- msha_schema_ = [

- "inp", "num_heads", "key_dim", "value_dim", "query_h_strides", "query_w_strides", "kv_strides",

- "use_layer_scale", "use_multi_query", "use_residual"

- ]

- args['inp'] = int(args['inp'] * factor)

- args = dict(zip(msha_schema_, [args['oup']] + (msha)))

- layers.add_module(f"msha_{i}", MultiHeadSelfAttentionBlock(**args))

- elif block_names == "fused_ib":

- schema_ = ['inp', 'oup', 'stride', 'expand_ratio', 'act']

- for i in range(layer_spec['num_blocks']):

- args = dict(zip(schema_, layer_spec['block_specs'][i]))

- args['inp'] = int(args['inp'] * factor)

- args['oup'] = int(args['oup'] * factor)

- layers.add_module(f"fused_ib_{i}", InvertedResidual(**args))

- else:

- raise NotImplementedError

- return layers

- class MobileNetV4(nn.Module):

- def __init__(self, model, factor=0.25):

- # MobileNetV4ConvSmall MobileNetV4ConvMedium MobileNetV4ConvLarge

- # MobileNetV4HybridMedium MobileNetV4HybridLarge

- """Params to initiate MobilenNetV4

- Args:

- model : support 5 types of models as indicated in

- "https://github.com/tensorflow/models/blob/master/official/vision/modeling/backbones/mobilenet.py"

- """

- super().__init__()

- assert model in MODEL_SPECS.keys()

- self.model = model

- self.spec = MODEL_SPECS[self.model]

- # conv0

- self.conv0 = build_blocks(self.spec['conv0'], factor=factor)

- # layer1

- self.layer1 = build_blocks(self.spec['layer1'], factor=factor)

- # layer2

- self.layer2 = build_blocks(self.spec['layer2'], factor=factor)

- # layer3

- self.layer3 = build_blocks(self.spec['layer3'], factor=factor)

- # layer4

- self.layer4 = build_blocks(self.spec['layer4'], factor=factor)

- self.width_list = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]

- def forward(self, x):

- x0 = self.conv0(x)

- x1 = self.layer1(x0)

- x2 = self.layer2(x1)

- x3 = self.layer3(x2)

- x4 = self.layer4(x3)

- return [x1, x2, x3, x4]

- def MobileNetV4ConvSmall(factor=0.5):

- model = MobileNetV4('MobileNetV4ConvSmall', factor=factor)

- return model

- def MobileNetV4ConvMedium(factor=0.5):

- model = MobileNetV4('MobileNetV4ConvMedium', factor=factor)

- return model

- def MobileNetV4ConvLarge(factor=0.5):

- model = MobileNetV4('MobileNetV4ConvLarge', factor=factor)

- return model

- def MobileNetV4HybridMedium(factor=0.5):

- model = MobileNetV4('MobileNetV4HybridMedium', factor=factor)

- return model

- def MobileNetV4HybridLarge(factor=0.5):

- model = MobileNetV4('MobileNetV4HybridLarge', factor=factor)

- return model

- if __name__ == "__main__":

- # Generating Sample image

- image_size = (1, 3, 640, 640)

- image = torch.rand(*image_size)

- # Model

- model = MobileNetV4HybridMedium()

- out = model(image)

- for i in range(len(out)):

- print(out[i].shape)

5.1 修改一

第一步还是建立文件,我们找到如下ultralytics/nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可

5.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

5.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

从今天开始以后的教程就都统一成这个样子了,因为我默认大家用了我群内的文件来进行修改!!

5.4 修改四

添加如下两行代码!!!

5.5 修改五

找到七百多行大概把具体看图片,按照图片来修改就行,添加红框内的部分,注意没有()只是函数名。

- elif m in {自行添加对应的模型即可,下面都是一样的}:

- m = m(*args)

- c2 = m.width_list # 返回通道列表

- backbone = True

5.6 修改六

下面的两个红框内都是需要改动的。

- if isinstance(c2, list):

- m_ = m

- m_.backbone = True

- else:

- m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

- t = str(m)[8:-2].replace('__main__.', '') # module type

- m.np = sum(x.numel() for x in m_.parameters()) # number params

- m_.i, m_.f, m_.type = i + 4 if backbone else i, f, t # attach index, 'from' index, type

5.7 修改七

如下的也需要修改,全部按照我的来。

代码如下把原先的代码替换了即可。

- if verbose:

- LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # print

- save.extend(x % (i + 4 if backbone else i) for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

- layers.append(m_)

- if i == 0:

- ch = []

- if isinstance(c2, list):

- ch.extend(c2)

- if len(c2) != 5:

- ch.insert(0, 0)

- else:

- ch.append(c2)

5.8 修改八

修改八和前面的都不太一样,需要修改前向传播中的一个部分, 已经离开了parse_model方法了。

可以在图片中开代码行数,没有离开task.py文件都是同一个文件。 同时这个部分有好几个前向传播都很相似,大家不要看错了, 是70多行左右的!!!,同时我后面提供了代码,大家直接复制粘贴即可,有时间我针对这里会出一个视频。

代码如下->

- def _predict_once(self, x, profile=False, visualize=False, embed=None):

- """

- Perform a forward pass through the network.

- Args:

- x (torch.Tensor): The input tensor to the model.

- profile (bool): Print the computation time of each layer if True, defaults to False.

- visualize (bool): Save the feature maps of the model if True, defaults to False.

- embed (list, optional): A list of feature vectors/embeddings to return.

- Returns:

- (torch.Tensor): The last output of the model.

- """

- y, dt, embeddings = [], [], [] # outputs

- for m in self.model:

- if m.f != -1: # if not from previous layer

- x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

- if profile:

- self._profile_one_layer(m, x, dt)

- if hasattr(m, 'backbone'):

- x = m(x)

- if len(x) != 5: # 0 - 5

- x.insert(0, None)

- for index, i in enumerate(x):

- if index in self.save:

- y.append(i)

- else:

- y.append(None)

- x = x[-1] # 最后一个输出传给下一层

- else:

- x = m(x) # run

- y.append(x if m.i in self.save else None) # save output

- if visualize:

- feature_visualization(x, m.type, m.i, save_dir=visualize)

- if embed and m.i in embed:

- embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten

- if m.i == max(embed):

- return torch.unbind(torch.cat(embeddings, 1), dim=0)

- return x

到这里就完成了修改部分,但是这里面细节很多,大家千万要注意不要替换多余的代码,导致报错,也不要拉下任何一部,都会导致运行失败,而且报错很难排查!!!很难排查!!!

六、融合改进yaml文件

训练信息:YOLO11-BiFPN-MobileNetV4 summary: 436 layers, 1,999,494 parameters, 1,999,478 gradients, 5.1 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # 支持版本有__all__ = ['MobileNetV4ConvLarge', 'MobileNetV4ConvSmall', 'MobileNetV4ConvMedium', 'MobileNetV4HybridMedium', 'MobileNetV4HybridLarge'] 5

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- # 此处适合专栏里其它的主干可以替换其它任何一个.

- - [-1, 1, MobileNetV4ConvSmall, [0.50]] # 0-4 P1/2 这里是四层大家不要被yaml文件限制住了思维,不会画图进群看视频.

- # 上面的参数0.75支持大家自由根据v11的 0.25 0.50 0.75 1.00 1.5 进行放缩我只尝试过这几个如果你设置一些比较特殊的数字可能会报错.

- # 所以本文有至少 5 x 5 = 25种组合方式.

- - [-1, 1, SPPF, [1024, 5]] # 5

- - [-1, 2, C2PSA, [1024]] # 6

- # YOLO11n head

- head:

- - [2, 1, Conv, [256]] # 7-P3/8

- - [3, 1, Conv, [256]] # 8-P4/16

- - [6, 1, Conv, [256]] # 9-P5/32

- - [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 10 P5->P4

- - [[-1, 8], 1, Bi_FPN, []] # 11

- - [-1, 3, C2f, [256]] # 12-P4/16

- - [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 13 P4->P3

- - [[-1, 7], 1, Bi_FPN, []] # 14

- - [-1, 3, C2f, [256]] # 15-P3/8

- - [1, 1, Conv, [256, 3, 2]] # 16 P2->P3

- - [[-1, 7, 15], 1, Bi_FPN, []] # 17

- - [-1, 3, C2f, [256]] # 18-P3/8

- - [-1, 1, Conv, [256, 3, 2]] # 19 P3->P4

- - [[-1, 8, 12], 1, Bi_FPN, []] # 20

- - [-1, 3, C2f, [512]] # 21-P4/16

- - [-1, 1, Conv, [256, 3, 2]] # 22 P4->P5

- - [[-1, 9], 1, Bi_FPN, []] # 23

- - [-1, 3, C2f, [1024]] # 24-P5/32

- - [[18, 21, 24], 1, Detect, [nc]] # Detect(P3, P4, P5)

七、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,目前本专栏免费阅读(暂时,大家尽早关注不迷路~),如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~