一、本文介绍

本文给大家带来的改进机制是 ModulatedDeformConv 来替换我们 模型 的 下采样 操作, 同时含二次创新C3k2机制 ,其主要思想是通过引入可学习的空间 偏移量 ,实现感受野的动态调整,增强 卷积神经网络 对图像中几何变换的适应能力。不同于其它的Conv这种可变形Conv主要就是通过学习下采样的位置来进行提高检测精度,但是 这种方法可以减少计算量,网络层数 , 所以这个方法还是比较推荐大家在自己数据集上尝试一下的, 能够减少网络层数的机制不多。

欢迎大家订阅我的专栏一起学习YOLO!

| 训练信息1:YOLO11-C3k2-MDConv-1 summary: 315 layers, 2,616,209 parameters, 2,616,193 gradients, 6.4 GFLOPs |

| 训练信息2:YOLO11-C3k2-MDConv-2 summary: 314 layers, 2,668,612 parameters, 2,668,596 gradients, 6.4 GFLOPs |

| 训练信息3:YOLO11-MDConv summary: 313 layers, 2,718,804 parameters, 2,718,788 gradients, 5.4 GFLOPs |

| 基础版本:YOLO11 summary: 319 layers, 2,591,010 parameters, 2,590,994 gradients, 6.4 GFLOPs |

二、原理介绍

官方论文地址 : 官方论文地址点击此处即可跳转

官方代码地址: 官方代码地址点击此处即可跳转

可变形卷积(Deformable Convolution)的主要思想可以总结为以下几点:

1. 引入可学习的偏移量:传统卷积在固定的规则网格上对输入特征图进行采样,例如3×3的卷积核总是在预定义的固定位置上采样。可变形卷积通过引入可学习的2D偏移量,使每个采样点的位置可以根据输入特征自适应地调整。这意味着卷积核的采样位置不再固定,可以根据图像的内容进行变化。

2. 增强几何变换建模能力:通过引入这些偏移量,可变形卷积可以动态调整卷积核的 感受野 ,使其适应图像中不同尺度、形状和姿态的对象。这种灵活性使网络更好地捕捉复杂的几何变化,如物体的形变、旋转和缩放,从而提高对目标对象的特征表达能力。

3. 自适应空间变换:这些偏移量是由前一层的特征图通过额外的卷积层来学习的,并根据局部特征进行自适应调整。这种调整是针对输入特征的局部性、密集性和自适应性进行的,不需要任何额外的监督信号。这使得可变形卷积可以在保持轻量级的同时,灵活地处理复杂的几何变换。

4. 易于集成:可变形卷积可以直接替换现有CNN中的标准卷积层,且能够通过标准的反向传播进行端到端的训练。因此,它可以很容易地集成到现有的 深度学习 模型中,显著提高性能,尤其在目标检测和语义分割等视觉任务中表现突出。

简而言之,可变形卷积的核心思想是通过学习可变的空间偏移量,动态调整卷积核的采样位置,从而增强卷积神经网络对图像中几何变化的适应性,提高对复杂视觉任务的建模能力。

三、核心代码

核心代码的使用方式看章节四,需要注意的是该方法需要安装mmcv-full包,这是一个很难安装的第三方库!

- import math

- from typing import Optional, Tuple, Union

- import torch

- import torch.nn as nn

- from torch.autograd import Function

- from torch.autograd.function import once_differentiable

- from torch.nn.modules.utils import _pair, _single

- import importlib

- __all__ = ['C3k2_MDConv1', 'C3k2_MDConv2']

- ext_module = importlib.import_module('mmcv.' + "_ext")

- class ModulatedDeformConv2dFunction(Function):

- @staticmethod

- def symbolic(g, input, offset, mask, weight, bias, stride, padding,

- dilation, groups, deform_groups):

- input_tensors = [input, offset, mask, weight]

- if bias is not None:

- input_tensors.append(bias)

- return g.op(

- 'mmcv::MMCVModulatedDeformConv2d',

- *input_tensors,

- stride_i=stride,

- padding_i=padding,

- dilation_i=dilation,

- groups_i=groups,

- deform_groups_i=deform_groups)

- @staticmethod

- def forward(ctx,

- input: torch.Tensor,

- offset: torch.Tensor,

- mask: torch.Tensor,

- weight: nn.Parameter,

- bias: Optional[nn.Parameter] = None,

- stride: int = 1,

- padding: int = 0,

- dilation: int = 1,

- groups: int = 1,

- deform_groups: int = 1) -> torch.Tensor:

- if input is not None and input.dim() != 4:

- raise ValueError(

- f'Expected 4D tensor as input, got {input.dim()}D tensor \

- instead.')

- ctx.stride = _pair(stride)

- ctx.padding = _pair(padding)

- ctx.dilation = _pair(dilation)

- ctx.groups = groups

- ctx.deform_groups = deform_groups

- ctx.with_bias = bias is not None

- if not ctx.with_bias:

- bias = input.new_empty(0) # fake tensor

- # When pytorch version >= 1.6.0, amp is adopted for fp16 mode;

- # amp won't cast the type of model (float32), but "offset" is cast

- # to float16 by nn.Conv2d automatically, leading to the type

- # mismatch with input (when it is float32) or weight.

- # The flag for whether to use fp16 or amp is the type of "offset",

- # we cast weight and input to temporarily support fp16 and amp

- # whatever the pytorch version is.

- input = input.type_as(offset)

- weight = weight.type_as(input)

- bias = bias.type_as(input) # type: ignore

- ctx.save_for_backward(input, offset, mask, weight, bias)

- output = input.new_empty(

- ModulatedDeformConv2dFunction._output_size(ctx, input, weight))

- ctx._bufs = [input.new_empty(0), input.new_empty(0)]

- ext_module.modulated_deform_conv_forward(

- input,

- weight,

- bias,

- ctx._bufs[0],

- offset,

- mask,

- output,

- ctx._bufs[1],

- kernel_h=weight.size(2),

- kernel_w=weight.size(3),

- stride_h=ctx.stride[0],

- stride_w=ctx.stride[1],

- pad_h=ctx.padding[0],

- pad_w=ctx.padding[1],

- dilation_h=ctx.dilation[0],

- dilation_w=ctx.dilation[1],

- group=ctx.groups,

- deformable_group=ctx.deform_groups,

- with_bias=ctx.with_bias)

- return output

- @staticmethod

- @once_differentiable

- def backward(ctx, grad_output: torch.Tensor) -> tuple:

- input, offset, mask, weight, bias = ctx.saved_tensors

- grad_input = torch.zeros_like(input)

- grad_offset = torch.zeros_like(offset)

- grad_mask = torch.zeros_like(mask)

- grad_weight = torch.zeros_like(weight)

- grad_bias = torch.zeros_like(bias)

- grad_output = grad_output.contiguous()

- ext_module.modulated_deform_conv_backward(

- input,

- weight,

- bias,

- ctx._bufs[0],

- offset,

- mask,

- ctx._bufs[1],

- grad_input,

- grad_weight,

- grad_bias,

- grad_offset,

- grad_mask,

- grad_output,

- kernel_h=weight.size(2),

- kernel_w=weight.size(3),

- stride_h=ctx.stride[0],

- stride_w=ctx.stride[1],

- pad_h=ctx.padding[0],

- pad_w=ctx.padding[1],

- dilation_h=ctx.dilation[0],

- dilation_w=ctx.dilation[1],

- group=ctx.groups,

- deformable_group=ctx.deform_groups,

- with_bias=ctx.with_bias)

- if not ctx.with_bias:

- grad_bias = None

- return (grad_input, grad_offset, grad_mask, grad_weight, grad_bias,

- None, None, None, None, None)

- @staticmethod

- def _output_size(ctx, input, weight):

- channels = weight.size(0)

- output_size = (input.size(0), channels)

- for d in range(input.dim() - 2):

- in_size = input.size(d + 2)

- pad = ctx.padding[d]

- kernel = ctx.dilation[d] * (weight.size(d + 2) - 1) + 1

- stride_ = ctx.stride[d]

- output_size += ((in_size + (2 * pad) - kernel) // stride_ + 1, )

- if not all(map(lambda s: s > 0, output_size)):

- raise ValueError(

- 'convolution input is too small (output would be ' +

- 'x'.join(map(str, output_size)) + ')')

- return output_size

- modulated_deform_conv2d = ModulatedDeformConv2dFunction.apply

- class ModulatedDeformConv2d(nn.Module):

- def __init__(self,

- in_channels: int,

- out_channels: int,

- kernel_size: Union[int, Tuple[int]],

- stride: int = 1,

- padding: int = 1,

- dilation: int = 1,

- groups: int = 1,

- deform_groups: int = 1,

- bias: Union[bool, str] = True):

- super().__init__()

- self.in_channels = in_channels

- self.out_channels = out_channels

- self.kernel_size = _pair(kernel_size)

- self.stride = _pair(stride)

- self.padding = _pair(padding)

- self.dilation = _pair(dilation)

- self.groups = groups

- self.deform_groups = deform_groups

- # enable compatibility with nn.Conv2d

- self.transposed = False

- self.output_padding = _single(0)

- self.weight = nn.Parameter(

- torch.Tensor(out_channels, in_channels // groups,

- *self.kernel_size))

- if bias:

- self.bias = nn.Parameter(torch.Tensor(out_channels))

- else:

- self.register_parameter('bias', None)

- self.init_weights()

- def init_weights(self):

- n = self.in_channels

- for k in self.kernel_size:

- n *= k

- stdv = 1. / math.sqrt(n)

- self.weight.data.uniform_(-stdv, stdv)

- if self.bias is not None:

- self.bias.data.zero_()

- def forward(self, x: torch.Tensor, offset: torch.Tensor,

- mask: torch.Tensor) -> torch.Tensor:

- return modulated_deform_conv2d(x, offset, mask, self.weight, self.bias,

- self.stride, self.padding,

- self.dilation, self.groups,

- self.deform_groups)

- class ModulatedDeformConv2dPack(ModulatedDeformConv2d):

- """A ModulatedDeformable Conv Encapsulation that acts as normal Conv

- layers.

- Args:

- in_channels (int): Same as nn.Conv2d.

- out_channels (int): Same as nn.Conv2d.

- kernel_size (int or tuple[int]): Same as nn.Conv2d.

- stride (int): Same as nn.Conv2d, while tuple is not supported.

- padding (int): Same as nn.Conv2d, while tuple is not supported.

- dilation (int): Same as nn.Conv2d, while tuple is not supported.

- groups (int): Same as nn.Conv2d.

- bias (bool or str): If specified as `auto`, it will be decided by the

- norm_cfg. Bias will be set as True if norm_cfg is None, otherwise

- False.

- """

- _version = 2

- def __init__(self, *args, **kwargs):

- super().__init__(*args, **kwargs)

- self.conv_offset = nn.Conv2d(

- self.in_channels,

- self.deform_groups * 3 * self.kernel_size[0] * self.kernel_size[1],

- kernel_size=self.kernel_size,

- stride=self.stride,

- padding=self.padding,

- dilation=self.dilation,

- bias=True)

- self.init_weights()

- def init_weights(self) -> None:

- super().init_weights()

- if hasattr(self, 'conv_offset'):

- self.conv_offset.weight.data.zero_()

- self.conv_offset.bias.data.zero_()

- def forward(self, x: torch.Tensor) -> torch.Tensor: # type: ignore

- out = self.conv_offset(x)

- o1, o2, mask = torch.chunk(out, 3, dim=1)

- offset = torch.cat((o1, o2), dim=1)

- mask = torch.sigmoid(mask)

- return modulated_deform_conv2d(x, offset, mask, self.weight, self.bias,

- self.stride, self.padding,

- self.dilation, self.groups,

- self.deform_groups)

- def _load_from_state_dict(self, state_dict, prefix, local_metadata, strict,

- missing_keys, unexpected_keys, error_msgs):

- version = local_metadata.get('version', None)

- if version is None or version < 2:

- # the key is different in early versions

- # In version < 2, ModulatedDeformConvPack

- # loads previous benchmark models.

- if (prefix + 'conv_offset.weight' not in state_dict

- and prefix[:-1] + '_offset.weight' in state_dict):

- state_dict[prefix + 'conv_offset.weight'] = state_dict.pop(

- prefix[:-1] + '_offset.weight')

- if (prefix + 'conv_offset.bias' not in state_dict

- and prefix[:-1] + '_offset.bias' in state_dict):

- state_dict[prefix +

- 'conv_offset.bias'] = state_dict.pop(prefix[:-1] +

- '_offset.bias')

- super()._load_from_state_dict(state_dict, prefix, local_metadata,

- strict, missing_keys, unexpected_keys,

- error_msgs)

- def autopad(k, p=None, d=1): # kernel, padding, dilation

- """Pad to 'same' shape outputs."""

- if d > 1:

- k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

- if p is None:

- p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

- return p

- class Conv(nn.Module):

- """Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

- default_act = nn.SiLU() # default activation

- def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

- """Initialize Conv layer with given arguments including activation."""

- super().__init__()

- self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

- self.bn = nn.BatchNorm2d(c2)

- self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

- def forward(self, x):

- """Apply convolution, batch normalization and activation to input tensor."""

- return self.act(self.bn(self.conv(x)))

- def forward_fuse(self, x):

- """Perform transposed convolution of 2D data."""

- return self.act(self.conv(x))

- class Bottleneck_MDConv(nn.Module):

- # Standard bottleneck with DCN

- def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5): # ch_in, ch_out, shortcut, groups, kernels, expand

- super().__init__()

- c_ = int(c2 * e) # hidden channels

- self.cv1 = Conv(c1, c_, k[0], 1)

- self.cv2 = ModulatedDeformConv2dPack(c_, c2, 3)

- self.add = shortcut and c1 == c2

- def forward(self, x):

- return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

- class Bottleneck(nn.Module):

- """Standard bottleneck."""

- def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5):

- """Initializes a standard bottleneck module with optional shortcut connection and configurable parameters."""

- super().__init__()

- c_ = int(c2 * e) # hidden channels

- self.cv1 = Conv(c1, c_, k[0], 1)

- self.cv2 = Conv(c_, c2, k[1], 1, g=g)

- self.add = shortcut and c1 == c2

- def forward(self, x):

- """Applies the YOLO FPN to input data."""

- return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

- class C2f(nn.Module):

- """Faster Implementation of CSP Bottleneck with 2 convolutions."""

- def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):

- """Initializes a CSP bottleneck with 2 convolutions and n Bottleneck blocks for faster processing."""

- super().__init__()

- self.c = int(c2 * e) # hidden channels

- self.cv1 = Conv(c1, 2 * self.c, 1, 1)

- self.cv2 = Conv((2 + n) * self.c, c2, 1) # optional act=FReLU(c2)

- self.m = nn.ModuleList(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))

- def forward(self, x):

- """Forward pass through C2f layer."""

- y = list(self.cv1(x).chunk(2, 1))

- y.extend(m(y[-1]) for m in self.m)

- return self.cv2(torch.cat(y, 1))

- def forward_split(self, x):

- """Forward pass using split() instead of chunk()."""

- y = list(self.cv1(x).split((self.c, self.c), 1))

- y.extend(m(y[-1]) for m in self.m)

- return self.cv2(torch.cat(y, 1))

- class C3(nn.Module):

- """CSP Bottleneck with 3 convolutions."""

- def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

- """Initialize the CSP Bottleneck with given channels, number, shortcut, groups, and expansion values."""

- super().__init__()

- c_ = int(c2 * e) # hidden channels

- self.cv1 = Conv(c1, c_, 1, 1)

- self.cv2 = Conv(c1, c_, 1, 1)

- self.cv3 = Conv(2 * c_, c2, 1) # optional act=FReLU(c2)

- self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, k=((1, 1), (3, 3)), e=1.0) for _ in range(n)))

- def forward(self, x):

- """Forward pass through the CSP bottleneck with 2 convolutions."""

- return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

- class C3k(C3):

- """C3k is a CSP bottleneck module with customizable kernel sizes for feature extraction in neural networks."""

- def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5, k=3):

- """Initializes the C3k module with specified channels, number of layers, and configurations."""

- super().__init__(c1, c2, n, shortcut, g, e)

- c_ = int(c2 * e) # hidden channels

- # self.m = nn.Sequential(*(RepBottleneck(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

- self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

- class C3kMDConv(C3):

- """C3k is a CSP bottleneck module with customizable kernel sizes for feature extraction in neural networks."""

- def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5, k=3):

- """Initializes the C3k module with specified channels, number of layers, and configurations."""

- super().__init__(c1, c2, n, shortcut, g, e)

- c_ = int(c2 * e) # hidden channels

- # self.m = nn.Sequential(*(RepBottleneck(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

- self.m = nn.Sequential(*(Bottleneck_MDConv(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

- class C3k2_MDConv1(C2f):

- """Faster Implementation of CSP Bottleneck with 2 convolutions."""

- def __init__(self, c1, c2, n=1, c3k=False, e=0.5, g=1, shortcut=True):

- """Initializes the C3k2 module, a faster CSP Bottleneck with 2 convolutions and optional C3k blocks."""

- super().__init__(c1, c2, n, shortcut, g, e)

- self.m = nn.ModuleList(

- C3k(self.c, self.c, 2, shortcut, g) if c3k else Bottleneck_MDConv(self.c, self.c, shortcut, g)for _ in range(n)

- )

- # 解析利用MLLABlock替换Bottneck

- class C3k2_MDConv2(C2f):

- """Faster Implementation of CSP Bottleneck with 2 convolutions."""

- def __init__(self, c1, c2, n=1, c3k=False, e=0.5, g=1, shortcut=True):

- """Initializes the C3k2 module, a faster CSP Bottleneck with 2 convolutions and optional C3k blocks."""

- super().__init__(c1, c2, n, shortcut, g, e)

- self.m = nn.ModuleList(

- C3kMDConv(self.c, self.c, 2, shortcut, g) if c3k else Bottleneck(self.c, self.c, shortcut, g) for _ in

- range(n)

- )

- # 解析利用MLLABlock替换C3k中的Bottneck

- if __name__ == '__main__':

- x1 = torch.randn(1, 32, 16, 16)

- x2 = torch.randn(1, 32, 16, 16)

- model = ModulatedDeformConv2dPack(32, 16, 3, 2, 1)

- x = model(x1)

- print(x.shape)

四、手把手教你添加本文机制

4.1 修改一

第一还是建立文件,我们找到如下 ultralytics /nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

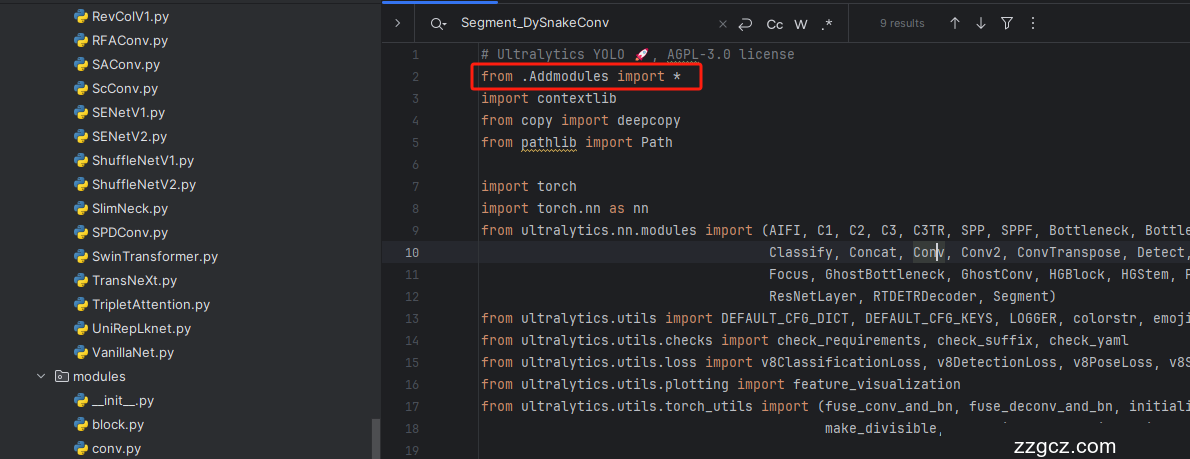

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

4.4 修改四

按照我的添加在parse_model里添加即可。

到此就修改完成了,大家可以复制下面的yaml文件运行。

五、正式训练

5.1 yaml文件1

训练信息:YOLO11-C3k2-MDConv-1 summary: 315 layers, 2,616,209 parameters, 2,616,193 gradients, 6.4 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2_MDConv1, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2_MDConv1, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2_MDConv1, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2_MDConv1, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2_MDConv1, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2_MDConv1, [256, False]] # 16 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2_MDConv1, [512, False]] # 19 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2_MDConv1, [1024, True]] # 22 (P5/32-large)

- - [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.2 yaml文件2

训练信息:YOLO11-C3k2-MDConv-2 summary: 314 layers, 2,668,612 parameters, 2,668,596 gradients, 6.4 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2_MDConv2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2_MDConv2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2_MDConv2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2_MDConv2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2_MDConv2, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2_MDConv2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2_MDConv2, [512, False]] # 19 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2_MDConv2, [1024, True]] # 22 (P5/32-large)

- - [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.3 yaml文件3

训练信息:YOLO11-MDConv summary: 313 layers, 2,718,804 parameters, 2,718,788 gradients, 5.4 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, ModulatedDeformConv2dPack, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, ModulatedDeformConv2dPack, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, ModulatedDeformConv2dPack, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, ModulatedDeformConv2dPack, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, ModulatedDeformConv2dPack, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

- - [-1, 1, ModulatedDeformConv2dPack, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

- - [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.4 训练代码

大家可以创建一个py文件将我给的代码复制粘贴进去,配置好自己的文件路径即可运行。

- import warnings

- warnings.filterwarnings('ignore')

- from ultralytics import YOLO

- if __name__ == '__main__':

- model = YOLO('yolov8-MLLA.yaml')

- # 如何切换模型版本, 上面的ymal文件可以改为 yolov8s.yaml就是使用的v8s,

- # 类似某个改进的yaml文件名称为yolov8-XXX.yaml那么如果想使用其它版本就把上面的名称改为yolov8l-XXX.yaml即可(改的是上面YOLO中间的名字不是配置文件的)!

- # model.load('yolov8n.pt') # 是否加载预训练权重,科研不建议大家加载否则很难提升精度

- model.train(data=r"C:\Users\Administrator\PycharmProjects\yolov5-master\yolov5-master\Construction Site Safety.v30-raw-images_latestversion.yolov8\data.yaml",

- # 如果大家任务是其它的'ultralytics/cfg/default.yaml'找到这里修改task可以改成detect, segment, classify, pose

- cache=False,

- imgsz=640,

- epochs=150,

- single_cls=False, # 是否是单类别检测

- batch=16,

- close_mosaic=0,

- workers=0,

- device='0',

- optimizer='SGD', # using SGD

- # resume='runs/train/exp21/weights/last.pt', # 如过想续训就设置last.pt的地址

- amp=True, # 如果出现训练损失为Nan可以关闭amp

- project='runs/train',

- name='exp',

- )

5.5 训练过程截图

五、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~