一、本文介绍

本文给大家带来的最新改进机制是在 DynamicHead 上替换 DCNv3 模块,其中DynamicHead的核心为 DCNv2 ,但是今年新更新了DCNv3其作为v2的升级版效果肯定是更好的, 所以我将其中的核心机制替换为DCNv3给Dyhead相当于做了一个升级 ,效果也比之前的普通版本要好,这个机制我认为是我个人融合的算是,先用先得全网无第二份此改进机制,同时我发布的一比一复现版本Dyhead也是收获了多个读者的反馈均有涨点效果,本文的DCNv3在我的数据上成功涨了三四个点,大家可以尝试效果比基础版本的Dyhead更好, 该检测头非常适合大家用来发表论文 !!

欢迎大家订阅我的专栏一起学习YOLO!

二、 DCN 的框架原理

首先我们先来介绍一个大的概念 DCN 全称为Deformable Convolutional Networks,翻译过来就是可变形卷积的意思, 其是一种用于目标检测和图像分割的卷积神经网络模块, 通过引入可变形卷积操作来提升模型对目标形变的建模能力。

什么是可变形卷积?我们看下图来看一下就了解了。

上图中展示了标准卷积和可变形卷积中的采样位置。在 标准卷积(a) 中,采样位置按照规则的网格形式排列(绿色点)。这意味着卷积核在进行卷积操作时,会在输入特征图的规则网格位置上进行采样。

而在可变形卷积(b)中, 采样位置是通过引入偏移量进行变形的(深蓝色点) ,并用增强的偏移量(浅蓝色箭头)进行表示。这意味着在可变形卷积中,不再局限于规则的网格位置,而是可以根据需要在输入特征图上自由地进行采样。

通过引入可变形卷积,可以推广各种变换,例如尺度变换、(异向)长宽比和旋转等变换 ,这在(c)和(d)中进行了特殊情况的展示。这说明可变形卷积能够更灵活地适应不同类型的变换,从而增强了模型对目标形变的建模能力。

总之,标准卷积(规则采样)在进行卷积操作时按照规则网格位置进行采样,而可变形卷积通过引入偏移量来实现非规则采样,从而在形状变换(尺度、长宽比、旋转等)方面具有更强的 泛化能力 。

下面是一个三维的角度来分析大家应该会看的更直观。

其中左侧的是输入特征,右侧的是输出特征, 我们的卷积核大小是一个3x3的,我们将输入特征中3x3区域映射为输出特征中的1x1,问题就在于这个3x3的区域怎么选择,传统的卷积就是规则的形状,可变形卷积就是在其中加入一个偏移量,然后对于个每个点分别计算,然后改变3x3区域中每个点的选取,提取一些可能具有更丰富特征的点,从而提高检测效果。

下面我们来看一下在实际检测效果中,可变形卷积的效果,下面的图片分别为大物体、中物体、小物体检测,其中红色的部分就是我们提取出来的特征。

图中的每个图像三元组展示了三个级别的3×3可变形滤波器的采样位置(每个图像中有729个红色点),以及分别位于 背景(左侧)、小物体(中间)和大物体(右侧) 上的三个激活单元(绿色点)。

这个图示的目的是说明在不同的物体尺度上,可变形卷积中的采样位置如何变化。 在左侧的背景图像中,可变形滤波器的采样位置主要集中在图像的背景部分。在中间的小物体图像中,采样位置的焦点开始向小物体的位置移动,并在小物体周围形成更密集的采样点。在右侧的大物体图像中,采样位置进一步扩展并覆盖整个大物体,以更好地捕捉其细节和形变。

通过这些图示,我们可以观察到可变形卷积的采样位置可以根据不同的目标尺度自适应地调整,从而在不同尺度的物体上更准确地捕捉特征。这增强了模型对于不同尺度目标的感知能力,并使其更适用于不同尺度物体的检测任务 ,这也是为什么开头的地方我说了本文适合于各种目标的检测对象。

上图可能可能更加直观一些。

三、 DynamicDCNv3Head的核心代码

该代码的使用方式看章节四!

- import copy

- import math

- from mmcv.ops import ModulatedDeformConv2d

- from ultralytics.nn.modules import DFL

- from ultralytics.utils.tal import dist2bbox, make_anchors

- import warnings

- import torch

- from torch import nn

- import torch.nn.functional as F

- from torch.nn.init import xavier_uniform_, constant_

- __all__ = ['DynamicDCNv3Head']

- def _get_reference_points(spatial_shapes, device, kernel_h, kernel_w, dilation_h, dilation_w, pad_h=0, pad_w=0, stride_h=1, stride_w=1):

- _, H_, W_, _ = spatial_shapes

- H_out = (H_ - (dilation_h * (kernel_h - 1) + 1)) // stride_h + 1

- W_out = (W_ - (dilation_w * (kernel_w - 1) + 1)) // stride_w + 1

- ref_y, ref_x = torch.meshgrid(

- torch.linspace(

- # pad_h + 0.5,

- # H_ - pad_h - 0.5,

- (dilation_h * (kernel_h - 1)) // 2 + 0.5,

- (dilation_h * (kernel_h - 1)) // 2 + 0.5 + (H_out - 1) * stride_h,

- H_out,

- dtype=torch.float32,

- device=device),

- torch.linspace(

- # pad_w + 0.5,

- # W_ - pad_w - 0.5,

- (dilation_w * (kernel_w - 1)) // 2 + 0.5,

- (dilation_w * (kernel_w - 1)) // 2 + 0.5 + (W_out - 1) * stride_w,

- W_out,

- dtype=torch.float32,

- device=device))

- ref_y = ref_y.reshape(-1)[None] / H_

- ref_x = ref_x.reshape(-1)[None] / W_

- ref = torch.stack((ref_x, ref_y), -1).reshape(

- 1, H_out, W_out, 1, 2)

- return ref

- def _generate_dilation_grids(spatial_shapes, kernel_h, kernel_w, dilation_h, dilation_w, group, device):

- _, H_, W_, _ = spatial_shapes

- points_list = []

- x, y = torch.meshgrid(

- torch.linspace(

- -((dilation_w * (kernel_w - 1)) // 2),

- -((dilation_w * (kernel_w - 1)) // 2) +

- (kernel_w - 1) * dilation_w, kernel_w,

- dtype=torch.float32,

- device=device),

- torch.linspace(

- -((dilation_h * (kernel_h - 1)) // 2),

- -((dilation_h * (kernel_h - 1)) // 2) +

- (kernel_h - 1) * dilation_h, kernel_h,

- dtype=torch.float32,

- device=device))

- points_list.extend([x / W_, y / H_])

- grid = torch.stack(points_list, -1).reshape(-1, 1, 2).\

- repeat(1, group, 1).permute(1, 0, 2)

- grid = grid.reshape(1, 1, 1, group * kernel_h * kernel_w, 2)

- return grid

- def dcnv3_core_pytorch(

- input, offset, mask, kernel_h,

- kernel_w, stride_h, stride_w, pad_h,

- pad_w, dilation_h, dilation_w, group,

- group_channels, offset_scale):

- # for debug and test only,

- # need to use cuda version instead

- input = F.pad(

- input,

- [0, 0, pad_h, pad_h, pad_w, pad_w])

- N_, H_in, W_in, _ = input.shape

- _, H_out, W_out, _ = offset.shape

- ref = _get_reference_points(

- input.shape, input.device, kernel_h, kernel_w, dilation_h, dilation_w, pad_h, pad_w, stride_h, stride_w)

- grid = _generate_dilation_grids(

- input.shape, kernel_h, kernel_w, dilation_h, dilation_w, group, input.device)

- spatial_norm = torch.tensor([W_in, H_in]).reshape(1, 1, 1, 2).\

- repeat(1, 1, 1, group*kernel_h*kernel_w).to(input.device)

- sampling_locations = (ref + grid * offset_scale).repeat(N_, 1, 1, 1, 1).flatten(3, 4) + \

- offset * offset_scale / spatial_norm

- P_ = kernel_h * kernel_w

- sampling_grids = 2 * sampling_locations - 1

- # N_, H_in, W_in, group*group_channels -> N_, H_in*W_in, group*group_channels -> N_, group*group_channels, H_in*W_in -> N_*group, group_channels, H_in, W_in

- input_ = input.view(N_, H_in*W_in, group*group_channels).transpose(1, 2).\

- reshape(N_*group, group_channels, H_in, W_in)

- # N_, H_out, W_out, group*P_*2 -> N_, H_out*W_out, group, P_, 2 -> N_, group, H_out*W_out, P_, 2 -> N_*group, H_out*W_out, P_, 2

- sampling_grid_ = sampling_grids.view(N_, H_out*W_out, group, P_, 2).transpose(1, 2).\

- flatten(0, 1)

- # N_*group, group_channels, H_out*W_out, P_

- sampling_input_ = F.grid_sample(

- input_, sampling_grid_, mode='bilinear', padding_mode='zeros', align_corners=False)

- # (N_, H_out, W_out, group*P_) -> N_, H_out*W_out, group, P_ -> (N_, group, H_out*W_out, P_) -> (N_*group, 1, H_out*W_out, P_)

- mask = mask.view(N_, H_out*W_out, group, P_).transpose(1, 2).\

- reshape(N_*group, 1, H_out*W_out, P_)

- output = (sampling_input_ * mask).sum(-1).view(N_,

- group*group_channels, H_out*W_out)

- return output.transpose(1, 2).reshape(N_, H_out, W_out, -1).contiguous()

- class to_channels_first(nn.Module):

- def __init__(self):

- super().__init__()

- def forward(self, x):

- return x.permute(0, 3, 1, 2)

- class to_channels_last(nn.Module):

- def __init__(self):

- super().__init__()

- def forward(self, x):

- return x.permute(0, 2, 3, 1)

- def build_norm_layer(dim,

- norm_layer,

- in_format='channels_last',

- out_format='channels_last',

- eps=1e-6):

- layers = []

- if norm_layer == 'BN':

- if in_format == 'channels_last':

- layers.append(to_channels_first())

- layers.append(nn.BatchNorm2d(dim))

- if out_format == 'channels_last':

- layers.append(to_channels_last())

- elif norm_layer == 'LN':

- if in_format == 'channels_first':

- layers.append(to_channels_last())

- layers.append(nn.LayerNorm(dim, eps=eps))

- if out_format == 'channels_first':

- layers.append(to_channels_first())

- else:

- raise NotImplementedError(

- f'build_norm_layer does not support {norm_layer}')

- return nn.Sequential(*layers)

- def build_act_layer(act_layer):

- if act_layer == 'ReLU':

- return nn.ReLU(inplace=True)

- elif act_layer == 'SiLU':

- return nn.SiLU(inplace=True)

- elif act_layer == 'GELU':

- return nn.GELU()

- raise NotImplementedError(f'build_act_layer does not support {act_layer}')

- def _is_power_of_2(n):

- if (not isinstance(n, int)) or (n < 0):

- raise ValueError(

- "invalid input for _is_power_of_2: {} (type: {})".format(n, type(n)))

- return (n & (n - 1) == 0) and n != 0

- class CenterFeatureScaleModule(nn.Module):

- def forward(self,

- query,

- center_feature_scale_proj_weight,

- center_feature_scale_proj_bias):

- center_feature_scale = F.linear(query,

- weight=center_feature_scale_proj_weight,

- bias=center_feature_scale_proj_bias).sigmoid()

- return center_feature_scale

- class DCNv3_pytorch(nn.Module):

- def __init__(

- self,

- channels=64,

- kernel_size=3,

- dw_kernel_size=None,

- stride=1,

- pad=1,

- dilation=1,

- group=4,

- offset_scale=1.0,

- act_layer='GELU',

- norm_layer='LN',

- center_feature_scale=False):

- """

- DCNv3 Module

- :param channels

- :param kernel_size

- :param stride

- :param pad

- :param dilation

- :param group

- :param offset_scale

- :param act_layer

- :param norm_layer

- """

- super().__init__()

- if channels % group != 0:

- raise ValueError(

- f'channels must be divisible by group, but got {channels} and {group}')

- _d_per_group = channels // group

- dw_kernel_size = dw_kernel_size if dw_kernel_size is not None else kernel_size

- # you'd better set _d_per_group to a power of 2 which is more efficient in our CUDA implementation

- if not _is_power_of_2(_d_per_group):

- warnings.warn(

- "You'd better set channels in DCNv3 to make the dimension of each attention head a power of 2 "

- "which is more efficient in our CUDA implementation.")

- self.offset_scale = offset_scale

- self.channels = channels

- self.kernel_size = kernel_size

- self.dw_kernel_size = dw_kernel_size

- self.stride = stride

- self.dilation = dilation

- self.pad = pad

- self.group = group

- self.group_channels = channels // group

- self.offset_scale = offset_scale

- self.center_feature_scale = center_feature_scale

- self.dw_conv = nn.Sequential(

- nn.Conv2d(

- channels,

- channels,

- kernel_size=dw_kernel_size,

- stride=1,

- padding=(dw_kernel_size - 1) // 2,

- groups=channels),

- build_norm_layer(

- channels,

- norm_layer,

- 'channels_first',

- 'channels_last'),

- build_act_layer(act_layer))

- self.offset = nn.Linear(

- channels,

- group * kernel_size * kernel_size * 2)

- self.mask = nn.Linear(

- channels,

- group * kernel_size * kernel_size)

- self.input_proj = nn.Linear(channels, channels)

- self.output_proj = nn.Linear(channels, channels)

- self._reset_parameters()

- if center_feature_scale:

- self.center_feature_scale_proj_weight = nn.Parameter(

- torch.zeros((group, channels), dtype=torch.float))

- self.center_feature_scale_proj_bias = nn.Parameter(

- torch.tensor(0.0, dtype=torch.float).view((1,)).repeat(group, ))

- self.center_feature_scale_module = CenterFeatureScaleModule()

- def _reset_parameters(self):

- constant_(self.offset.weight.data, 0.)

- constant_(self.offset.bias.data, 0.)

- constant_(self.mask.weight.data, 0.)

- constant_(self.mask.bias.data, 0.)

- xavier_uniform_(self.input_proj.weight.data)

- constant_(self.input_proj.bias.data, 0.)

- xavier_uniform_(self.output_proj.weight.data)

- constant_(self.output_proj.bias.data, 0.)

- def forward(self, input):

- """

- :param query (N, H, W, C)

- :return output (N, H, W, C)

- """

- input = input.permute(0, 2, 3, 1)

- N, H, W, _ = input.shape

- x = self.input_proj(input)

- x_proj = x

- x1 = input.permute(0, 3, 1, 2)

- x1 = self.dw_conv(x1)

- offset = self.offset(x1)

- mask = self.mask(x1).reshape(N, H, W, self.group, -1)

- mask = F.softmax(mask, -1).reshape(N, H, W, -1)

- x = dcnv3_core_pytorch(

- x, offset, mask,

- self.kernel_size, self.kernel_size,

- self.stride, self.stride,

- self.pad, self.pad,

- self.dilation, self.dilation,

- self.group, self.group_channels,

- self.offset_scale)

- if self.center_feature_scale:

- center_feature_scale = self.center_feature_scale_module(

- x1, self.center_feature_scale_proj_weight, self.center_feature_scale_proj_bias)

- # N, H, W, groups -> N, H, W, groups, 1 -> N, H, W, groups, _d_per_group -> N, H, W, channels

- center_feature_scale = center_feature_scale[..., None].repeat(

- 1, 1, 1, 1, self.channels // self.group).flatten(-2)

- x = x * (1 - center_feature_scale) + x_proj * center_feature_scale

- x = self.output_proj(x).permute(0, 3, 1, 2)

- return x

- def _make_divisible(v, divisor, min_value=None):

- if min_value is None:

- min_value = divisor

- new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

- # Make sure that round down does not go down by more than 10%.

- if new_v < 0.9 * v:

- new_v += divisor

- return new_v

- class h_swish(nn.Module):

- def __init__(self, inplace=False):

- super(h_swish, self).__init__()

- self.inplace = inplace

- def forward(self, x):

- return x * F.relu6(x + 3.0, inplace=self.inplace) / 6.0

- class h_sigmoid(nn.Module):

- def __init__(self, inplace=True, h_max=1):

- super(h_sigmoid, self).__init__()

- self.relu = nn.ReLU6(inplace=inplace)

- self.h_max = h_max

- def forward(self, x):

- return self.relu(x + 3) * self.h_max / 6

- class DYReLU(nn.Module):

- def __init__(self, inp, oup, reduction=4, lambda_a=1.0, K2=True, use_bias=True, use_spatial=False,

- init_a=[1.0, 0.0], init_b=[0.0, 0.0]):

- super(DYReLU, self).__init__()

- self.oup = oup

- self.lambda_a = lambda_a * 2

- self.K2 = K2

- self.avg_pool = nn.AdaptiveAvgPool2d(1)

- self.use_bias = use_bias

- if K2:

- self.exp = 4 if use_bias else 2

- else:

- self.exp = 2 if use_bias else 1

- self.init_a = init_a

- self.init_b = init_b

- # determine squeeze

- if reduction == 4:

- squeeze = inp // reduction

- else:

- squeeze = _make_divisible(inp // reduction, 4)

- # print('reduction: {}, squeeze: {}/{}'.format(reduction, inp, squeeze))

- # print('init_a: {}, init_b: {}'.format(self.init_a, self.init_b))

- self.fc = nn.Sequential(

- nn.Linear(inp, squeeze),

- nn.ReLU(inplace=True),

- nn.Linear(squeeze, oup * self.exp),

- h_sigmoid()

- )

- if use_spatial:

- self.spa = nn.Sequential(

- nn.Conv2d(inp, 1, kernel_size=1),

- nn.BatchNorm2d(1),

- )

- else:

- self.spa = None

- def forward(self, x):

- if isinstance(x, list):

- x_in = x[0]

- x_out = x[1]

- else:

- x_in = x

- x_out = x

- b, c, h, w = x_in.size()

- y = self.avg_pool(x_in).view(b, c)

- y = self.fc(y).view(b, self.oup * self.exp, 1, 1)

- if self.exp == 4:

- a1, b1, a2, b2 = torch.split(y, self.oup, dim=1)

- a1 = (a1 - 0.5) * self.lambda_a + self.init_a[0] # 1.0

- a2 = (a2 - 0.5) * self.lambda_a + self.init_a[1]

- b1 = b1 - 0.5 + self.init_b[0]

- b2 = b2 - 0.5 + self.init_b[1]

- out = torch.max(x_out * a1 + b1, x_out * a2 + b2)

- elif self.exp == 2:

- if self.use_bias: # bias but not PL

- a1, b1 = torch.split(y, self.oup, dim=1)

- a1 = (a1 - 0.5) * self.lambda_a + self.init_a[0] # 1.0

- b1 = b1 - 0.5 + self.init_b[0]

- out = x_out * a1 + b1

- else:

- a1, a2 = torch.split(y, self.oup, dim=1)

- a1 = (a1 - 0.5) * self.lambda_a + self.init_a[0] # 1.0

- a2 = (a2 - 0.5) * self.lambda_a + self.init_a[1]

- out = torch.max(x_out * a1, x_out * a2)

- elif self.exp == 1:

- a1 = y

- a1 = (a1 - 0.5) * self.lambda_a + self.init_a[0] # 1.0

- out = x_out * a1

- if self.spa:

- ys = self.spa(x_in).view(b, -1)

- ys = F.softmax(ys, dim=1).view(b, 1, h, w) * h * w

- ys = F.hardtanh(ys, 0, 3, inplace=True)/3

- out = out * ys

- return out

- class Conv3x3Norm(torch.nn.Module):

- def __init__(self, in_channels, out_channels, stride):

- super(Conv3x3Norm, self).__init__()

- self.stride = stride

- self.dcnv3 = DCNv3_pytorch(in_channels, kernel_size=3, stride=stride)

- self.dcnv2 = ModulatedDeformConv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1)

- self.bn = nn.GroupNorm(num_groups=16, num_channels=out_channels)

- def forward(self, input, **kwargs):

- if self.stride == 2:

- x = self.dcnv2(input.contiguous(), **kwargs)

- else:

- x = self.dcnv3(input)

- x = self.bn(x)

- return x

- class DyConv(nn.Module):

- def __init__(self, in_channels=256, out_channels=256, conv_func=Conv3x3Norm):

- super(DyConv, self).__init__()

- self.DyConv = nn.ModuleList()

- self.DyConv.append(conv_func(in_channels, out_channels, 1))

- self.DyConv.append(conv_func(in_channels, out_channels, 1))

- self.DyConv.append(conv_func(in_channels, out_channels, 2))

- self.AttnConv = nn.Sequential(

- nn.AdaptiveAvgPool2d(1),

- nn.Conv2d(in_channels, 1, kernel_size=1),

- nn.ReLU(inplace=True))

- self.h_sigmoid = h_sigmoid()

- self.relu = DYReLU(in_channels, out_channels)

- self.offset = nn.Conv2d(in_channels, 27, kernel_size=3, stride=1, padding=1)

- self.init_weights()

- def init_weights(self):

- for m in self.DyConv.modules():

- if isinstance(m, nn.Conv2d):

- nn.init.normal_(m.weight.data, 0, 0.01)

- if m.bias is not None:

- m.bias.data.zero_()

- for m in self.AttnConv.modules():

- if isinstance(m, nn.Conv2d):

- nn.init.normal_(m.weight.data, 0, 0.01)

- if m.bias is not None:

- m.bias.data.zero_()

- def forward(self, x):

- next_x = {}

- feature_names = list(x.keys())

- for level, name in enumerate(feature_names):

- feature = x[name]

- offset_mask = self.offset(feature)

- offset = offset_mask[:, :18, :, :]

- mask = offset_mask[:, 18:, :, :].sigmoid()

- conv_args = dict(offset=offset, mask=mask)

- temp_fea = [self.DyConv[1](feature, **conv_args)]

- if level > 0:

- temp_fea.append(self.DyConv[2](x[feature_names[level - 1]], **conv_args))

- if level < len(x) - 1:

- input = x[feature_names[level + 1]]

- temp_fea.append(F.interpolate(self.DyConv[0](input, **conv_args),

- size=[feature.size(2), feature.size(3)]))

- attn_fea = []

- res_fea = []

- for fea in temp_fea:

- res_fea.append(fea)

- attn_fea.append(self.AttnConv(fea))

- res_fea = torch.stack(res_fea)

- spa_pyr_attn = self.h_sigmoid(torch.stack(attn_fea))

- mean_fea = torch.mean(res_fea * spa_pyr_attn, dim=0, keepdim=False)

- next_x[name] = self.relu(mean_fea)

- return next_x

- def autopad(k, p=None, d=1): # kernel, padding, dilation

- """Pad to 'same' shape outputs."""

- if d > 1:

- k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

- if p is None:

- p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

- return p

- class Conv(nn.Module):

- """Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

- default_act = nn.SiLU() # default activation

- def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

- """Initialize Conv layer with given arguments including activation."""

- super().__init__()

- self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

- self.bn = nn.BatchNorm2d(c2)

- self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

- def forward(self, x):

- """Apply convolution, batch normalization and activation to input tensor."""

- return self.act(self.bn(self.conv(x)))

- def forward_fuse(self, x):

- """Perform transposed convolution of 2D data."""

- return self.act(self.conv(x))

- class DWConv(Conv):

- """Depth-wise convolution."""

- def __init__(self, c1, c2, k=1, s=1, d=1, act=True): # ch_in, ch_out, kernel, stride, dilation, activation

- """Initialize Depth-wise convolution with given parameters."""

- super().__init__(c1, c2, k, s, g=math.gcd(c1, c2), d=d, act=act)

- class DynamicDCNv3Head(nn.Module):

- """YOLOv8 Detect head for detection models. CSDNSnu77"""

- dynamic = False # force grid reconstruction

- export = False # export mode

- end2end = False # end2end

- max_det = 300 # max_det

- shape = None

- anchors = torch.empty(0) # init

- strides = torch.empty(0) # init

- def __init__(self, nc=80, ch=()):

- """Initializes the YOLOv8 detection layer with specified number of classes and channels."""

- super().__init__()

- self.nc = nc # number of classes

- self.nl = len(ch) # number of detection layers

- self.reg_max = 16 # DFL channels (ch[0] // 16 to scale 4/8/12/16/20 for n/s/m/l/x)

- self.no = nc + self.reg_max * 4 # number of outputs per anchor

- self.stride = torch.zeros(self.nl) # strides computed during build

- c2, c3 = max((16, ch[0] // 4, self.reg_max * 4)), max(ch[0], min(self.nc, 100)) # channels

- self.cv2 = nn.ModuleList(

- nn.Sequential(Conv(x, c2, 3), Conv(c2, c2, 3), nn.Conv2d(c2, 4 * self.reg_max, 1)) for x in ch

- )

- self.cv3 = nn.ModuleList(

- nn.Sequential(

- nn.Sequential(DWConv(x, x, 3), Conv(x, c3, 1)),

- nn.Sequential(DWConv(c3, c3, 3), Conv(c3, c3, 1)),

- nn.Conv2d(c3, self.nc, 1),

- )

- for x in ch

- )

- self.dfl = DFL(self.reg_max) if self.reg_max > 1 else nn.Identity()

- dyhead_tower = []

- for i in range(self.nl):

- channel = ch[i]

- dyhead_tower.append(

- DyConv(

- channel,

- channel,

- conv_func=Conv3x3Norm,

- )

- )

- self.add_module('dyhead_tower', nn.Sequential(*dyhead_tower))

- if self.end2end:

- self.one2one_cv2 = copy.deepcopy(self.cv2)

- self.one2one_cv3 = copy.deepcopy(self.cv3)

- def forward(self, x):

- tensor_dict = {i: tensor for i, tensor in enumerate(x)}

- x = self.dyhead_tower(tensor_dict)

- x = list(x.values())

- """Concatenates and returns predicted bounding boxes and class probabilities."""

- if self.end2end:

- return self.forward_end2end(x)

- for i in range(self.nl):

- x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

- if self.training: # Training path

- return x

- y = self._inference(x)

- return y if self.export else (y, x)

- def forward_end2end(self, x):

- """

- Performs forward pass of the v10Detect module.

- Args:

- x (tensor): Input tensor.

- Returns:

- (dict, tensor): If not in training mode, returns a dictionary containing the outputs of both one2many and one2one detections.

- If in training mode, returns a dictionary containing the outputs of one2many and one2one detections separately.

- """

- x_detach = [xi.detach() for xi in x]

- one2one = [

- torch.cat((self.one2one_cv2[i](x_detach[i]), self.one2one_cv3[i](x_detach[i])), 1) for i in range(self.nl)

- ]

- for i in range(self.nl):

- x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

- if self.training: # Training path

- return {"one2many": x, "one2one": one2one}

- y = self._inference(one2one)

- y = self.postprocess(y.permute(0, 2, 1), self.max_det, self.nc)

- return y if self.export else (y, {"one2many": x, "one2one": one2one})

- def _inference(self, x):

- """Decode predicted bounding boxes and class probabilities based on multiple-level feature maps."""

- # Inference path

- shape = x[0].shape # BCHW

- x_cat = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2)

- if self.dynamic or self.shape != shape:

- self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

- self.shape = shape

- if self.export and self.format in {"saved_model", "pb", "tflite", "edgetpu", "tfjs"}: # avoid TF FlexSplitV ops

- box = x_cat[:, : self.reg_max * 4]

- cls = x_cat[:, self.reg_max * 4 :]

- else:

- box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)

- if self.export and self.format in {"tflite", "edgetpu"}:

- # Precompute normalization factor to increase numerical stability

- # See https://github.com/ultralytics/ultralytics/issues/7371

- grid_h = shape[2]

- grid_w = shape[3]

- grid_size = torch.tensor([grid_w, grid_h, grid_w, grid_h], device=box.device).reshape(1, 4, 1)

- norm = self.strides / (self.stride[0] * grid_size)

- dbox = self.decode_bboxes(self.dfl(box) * norm, self.anchors.unsqueeze(0) * norm[:, :2])

- else:

- dbox = self.decode_bboxes(self.dfl(box), self.anchors.unsqueeze(0)) * self.strides

- return torch.cat((dbox, cls.sigmoid()), 1)

- def bias_init(self):

- """Initialize Detect() biases, WARNING: requires stride availability."""

- m = self # self.model[-1] # Detect() module

- # cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1

- # ncf = math.log(0.6 / (m.nc - 0.999999)) if cf is None else torch.log(cf / cf.sum()) # nominal class frequency

- for a, b, s in zip(m.cv2, m.cv3, m.stride): # from

- a[-1].bias.data[:] = 1.0 # box

- b[-1].bias.data[: m.nc] = math.log(5 / m.nc / (640 / s) ** 2) # cls (.01 objects, 80 classes, 640 img)

- if self.end2end:

- for a, b, s in zip(m.one2one_cv2, m.one2one_cv3, m.stride): # from

- a[-1].bias.data[:] = 1.0 # box

- b[-1].bias.data[: m.nc] = math.log(5 / m.nc / (640 / s) ** 2) # cls (.01 objects, 80 classes, 640 img)

- def decode_bboxes(self, bboxes, anchors):

- """Decode bounding boxes."""

- return dist2bbox(bboxes, anchors, xywh=not self.end2end, dim=1)

- @staticmethod

- def postprocess(preds: torch.Tensor, max_det: int, nc: int = 80):

- """

- Post-processes YOLO model predictions.

- Args:

- preds (torch.Tensor): Raw predictions with shape (batch_size, num_anchors, 4 + nc) with last dimension

- format [x, y, w, h, class_probs].

- max_det (int): Maximum detections per image.

- nc (int, optional): Number of classes. Default: 80.

- Returns:

- (torch.Tensor): Processed predictions with shape (batch_size, min(max_det, num_anchors), 6) and last

- dimension format [x, y, w, h, max_class_prob, class_index].

- """

- batch_size, anchors, _ = preds.shape # i.e. shape(16,8400,84)

- boxes, scores = preds.split([4, nc], dim=-1)

- index = scores.amax(dim=-1).topk(min(max_det, anchors))[1].unsqueeze(-1)

- boxes = boxes.gather(dim=1, index=index.repeat(1, 1, 4))

- scores = scores.gather(dim=1, index=index.repeat(1, 1, nc))

- scores, index = scores.flatten(1).topk(min(max_det, anchors))

- i = torch.arange(batch_size)[..., None] # batch indices

- return torch.cat([boxes[i, index // nc], scores[..., None], (index % nc)[..., None].float()], dim=-1)

- if __name__ == "__main__":

- # Generating Sample image

- image1 = (1, 64, 32, 32)

- image2 = (1, 64, 16, 16)

- image3 = (1, 64, 8, 8)

- image1 = torch.rand(image1)

- image2 = torch.rand(image2)

- image3 = torch.rand(image3)

- image = [image1, image2, image3]

- channel = (64, 64, 64)

- # Model

- mobilenet_v1 = DynamicDCNv3Head(nc=80, ch=channel)

- out = mobilenet_v1(image)

- print(out)

四、DynamicDCNv3Head的添加方式

4.1 修改一

首先我们将上面的代码复制粘贴到' ultralytics /nn' 目录下新建一个py文件复制粘贴进去,具体名字自己来定.

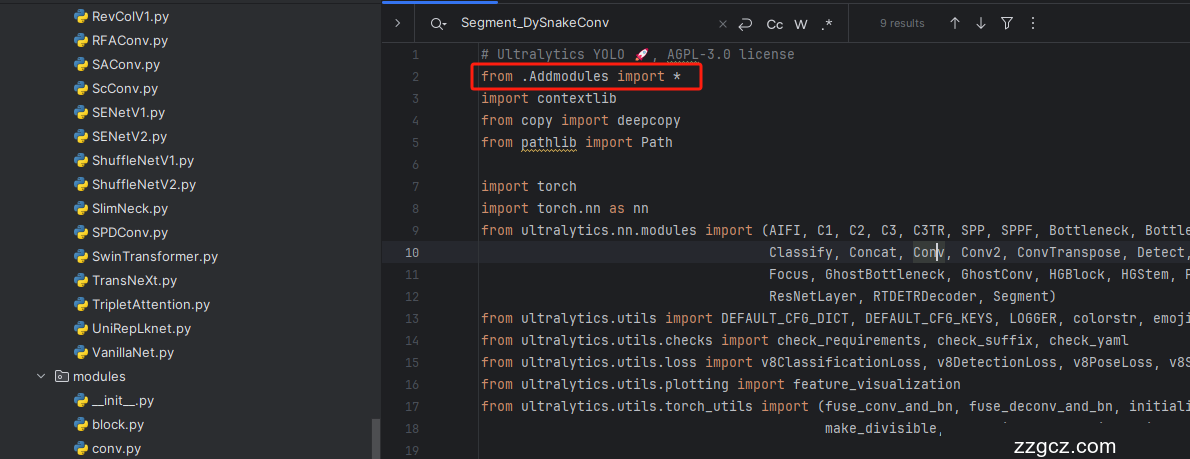

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

4.4 修改四

第四步我门找到如下文件'ultralytics/nn/tasks.py,找到如下的代码进行将检测头添加进去,这里给大家推荐个快速搜索的方法用ctrl+f然后搜索Detect然后就能快速查找了。

4.5 修改五

按照红框添加.

4.6 修改六

同理

4.7 修改七

这里有一些不一样,我们需要加一行代码

- else:

- return 'detect'

为啥呢不一样,因为这里的m在代码执行过程中会将你的代码自动转换为小写,所以直接else方便一点,以后出现一些其它分割或者其它的教程的时候在提供其它的修改教程。

4.8 修改八

同理.

4.9 修改九

找到'ultralytics/engine/validator.py'文件找到 'class BaseValidator:' 然后在其'__call__'中 self.args.half = self.device.type != 'cpu' # force FP16 val during training的一行代码下面加上self.args.half = False

到此就修改完成了,大家可以复制下面的yaml文件运行。

五、DynamicDCNv3Head检测头的yaml文件

此版本训练信息:YOLO11-DynamicDCNv3Head summary: 498 layers, 2,416,931 parameters, 2,416,915 gradients, 7.2 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [256, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [256, False]] # 19 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [256, True]] # 22 (P5/32-large)

- - [[16, 19, 22], 1, DynamicDCNv3Head, [nc]] # Detect(P3, P4, P5)

六、完美运行记录

最后提供一下完美运行的图片。

七、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~