一、本文介绍

本文给大家来的改进机制是 RepViT ,用其替换我们整个主干网络,其是今年最新推出的主干网络,其主要思想是将轻量级视觉 变换器 (ViT)的设计原则应用于传统的轻量级 卷积神经网络 (CNN)。我将其替换整个YOLOv11的Backbone,实现了大幅度涨点。我对修改后的网络(我用的最轻量的版本),在一个包含1000张图片包含大中小的检测目标的数据集上(共有20+类别),进行训练测试, 发现所有的目标上均有一定程度的涨点效果 ,下面我会附上基础版本和修改版本的训练对比图。

(本文内容可根据yolov11的N、S、M、L、X进行二次缩放,轻量化更上一层)。

二、RepViT基本原理

官方论文地址: 官方论文地址点击即可跳转

官方代码地址: 官方代码地址点击即可跳转

RepViT: Revisiting Mobile CNN From ViT Perspective 这篇论文探讨了如何改进轻量级卷积 神经网络 (CNN)以提高其在移动设备上的性能和效率。作者们发现,虽然轻量级视觉变换器( ViT )因其能够学习全局表示而表现出色,但轻量级CNN和轻量级ViT之间的架构差异尚未得到充分研究。因此,他们通过整合轻量级ViT的高效架构设计,逐步改进标准轻量级CNN(特别是MobileNetV3),从而创造了一系列全新的纯CNN模型,称为RepViT。这些模型在各种视觉任务上表现出色,比现有的轻量级ViT更高效。

其主要的改进机制包括:

-

结构性重组 :通过结构性重组(Structural Re-parameterization, SR),引入多分支拓扑结构,以提高训练时的性能。

-

扩展比率调整 :调整卷积层中的扩展比率,以减少参数冗余和延迟,同时提高网络宽度以增强 模型 性能。

-

宏观设计优化 :对网络的宏观架构进行优化,包括早期卷积层的设计、更深的下采样层、简化的分类器,以及整体阶段比例的调整。

-

微观设计调整 :在微观架构层面进行优化,包括卷积核大小的选择和压缩激励(SE)层的最佳放置。

这些创新机制共同推动了轻量级CNN的性能和效率,使其更适合在移动设备上使用,下面的是官方论文中的结构图,我们对其进行简单的分析。

这张图片是论文中的图3,展示了RepViT架构的总览。RepViT有四个阶段,输入图像的分辨率依次为

每个阶段的通道维度用 Ci 表示,批处理大小用 B 表示。

- Stem :用于预处理输入图像的模块。

- Stage1-4 :每个阶段由多个RepViTBlock组成,以及一个可选的RepViTSEBlock,包含深度可分离卷积(3x3DW),1x1卷积,压缩激励模块(SE)和前馈网络(FFN)。每个阶段通过下采样减少空间维度。

- Pooling :全局平均池化层,用于减少特征图的空间维度。

- FC :全连接层,用于最终的类别预测。

总结: 大家可以将RepViT看成是MobileNet系列的改进版本

三、RepViT的核心代码

下面的代码是整个RepViT的核心代码,其中有个版本,对应的GFLOPs也不相同,使用方式看章节四。

- from symbol import factor

- import torch.nn as nn

- from timm.models.layers import SqueezeExcite

- import torch

- __all__ = ['repvit_m0_6','repvit_m0_9', 'repvit_m1_0', 'repvit_m1_1', 'repvit_m1_5', 'repvit_m2_3']

- def _make_divisible(v, divisor, min_value=None):

- """

- This function is taken from the original tf repo.

- It ensures that all layers have a channel number that is divisible by 8

- It can be seen here:

- https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

- :param v:

- :param divisor:

- :param min_value:

- :return:

- """

- if min_value is None:

- min_value = divisor

- new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

- # Make sure that round down does not go down by more than 10%.

- if new_v < 0.9 * v:

- new_v += divisor

- return new_v

- class Conv2d_BN(torch.nn.Sequential):

- def __init__(self, a, b, ks=1, stride=1, pad=0, dilation=1,

- groups=1, bn_weight_init=1, resolution=-10000):

- super().__init__()

- self.add_module('c', torch.nn.Conv2d(

- a, b, ks, stride, pad, dilation, groups, bias=False))

- self.add_module('bn', torch.nn.BatchNorm2d(b))

- torch.nn.init.constant_(self.bn.weight, bn_weight_init)

- torch.nn.init.constant_(self.bn.bias, 0)

- @torch.no_grad()

- def fuse_self(self):

- c, bn = self._modules.values()

- w = bn.weight / (bn.running_var + bn.eps) ** 0.5

- w = c.weight * w[:, None, None, None]

- b = bn.bias - bn.running_mean * bn.weight / \

- (bn.running_var + bn.eps) ** 0.5

- m = torch.nn.Conv2d(w.size(1) * self.c.groups, w.size(

- 0), w.shape[2:], stride=self.c.stride, padding=self.c.padding, dilation=self.c.dilation,

- groups=self.c.groups,

- device=c.weight.device)

- m.weight.data.copy_(w)

- m.bias.data.copy_(b)

- return m

- class Residual(torch.nn.Module):

- def __init__(self, m, drop=0.):

- super().__init__()

- self.m = m

- self.drop = drop

- def forward(self, x):

- if self.training and self.drop > 0:

- return x + self.m(x) * torch.rand(x.size(0), 1, 1, 1,

- device=x.device).ge_(self.drop).div(1 - self.drop).detach()

- else:

- return x + self.m(x)

- @torch.no_grad()

- def fuse_self(self):

- if isinstance(self.m, Conv2d_BN):

- m = self.m.fuse_self()

- assert (m.groups == m.in_channels)

- identity = torch.ones(m.weight.shape[0], m.weight.shape[1], 1, 1)

- identity = torch.nn.functional.pad(identity, [1, 1, 1, 1])

- m.weight += identity.to(m.weight.device)

- return m

- elif isinstance(self.m, torch.nn.Conv2d):

- m = self.m

- assert (m.groups != m.in_channels)

- identity = torch.ones(m.weight.shape[0], m.weight.shape[1], 1, 1)

- identity = torch.nn.functional.pad(identity, [1, 1, 1, 1])

- m.weight += identity.to(m.weight.device)

- return m

- else:

- return self

- class RepVGGDW(torch.nn.Module):

- def __init__(self, ed) -> None:

- super().__init__()

- self.conv = Conv2d_BN(ed, ed, 3, 1, 1, groups=ed)

- self.conv1 = torch.nn.Conv2d(ed, ed, 1, 1, 0, groups=ed)

- self.dim = ed

- self.bn = torch.nn.BatchNorm2d(ed)

- def forward(self, x):

- return self.bn((self.conv(x) + self.conv1(x)) + x)

- @torch.no_grad()

- def fuse_self(self):

- conv = self.conv.fuse_self()

- conv1 = self.conv1

- conv_w = conv.weight

- conv_b = conv.bias

- conv1_w = conv1.weight

- conv1_b = conv1.bias

- conv1_w = torch.nn.functional.pad(conv1_w, [1, 1, 1, 1])

- identity = torch.nn.functional.pad(torch.ones(conv1_w.shape[0], conv1_w.shape[1], 1, 1, device=conv1_w.device),

- [1, 1, 1, 1])

- final_conv_w = conv_w + conv1_w + identity

- final_conv_b = conv_b + conv1_b

- conv.weight.data.copy_(final_conv_w)

- conv.bias.data.copy_(final_conv_b)

- bn = self.bn

- w = bn.weight / (bn.running_var + bn.eps) ** 0.5

- w = conv.weight * w[:, None, None, None]

- b = bn.bias + (conv.bias - bn.running_mean) * bn.weight / \

- (bn.running_var + bn.eps) ** 0.5

- conv.weight.data.copy_(w)

- conv.bias.data.copy_(b)

- return conv

- class RepViTBlock(nn.Module):

- def __init__(self, inp, hidden_dim, oup, kernel_size, stride, use_se, use_hs):

- super(RepViTBlock, self).__init__()

- assert stride in [1, 2]

- self.identity = stride == 1 and inp == oup

- assert (hidden_dim == 2 * inp)

- if stride == 2:

- self.token_mixer = nn.Sequential(

- Conv2d_BN(inp, inp, kernel_size, stride, (kernel_size - 1) // 2, groups=inp),

- SqueezeExcite(inp, 0.25) if use_se else nn.Identity(),

- Conv2d_BN(inp, oup, ks=1, stride=1, pad=0)

- )

- self.channel_mixer = Residual(nn.Sequential(

- # pw

- Conv2d_BN(oup, 2 * oup, 1, 1, 0),

- nn.GELU() if use_hs else nn.GELU(),

- # pw-linear

- Conv2d_BN(2 * oup, oup, 1, 1, 0, bn_weight_init=0),

- ))

- else:

- self.token_mixer = nn.Sequential(

- RepVGGDW(inp),

- SqueezeExcite(inp, 0.25) if use_se else nn.Identity(),

- )

- self.channel_mixer = Residual(nn.Sequential(

- # pw

- Conv2d_BN(inp, hidden_dim, 1, 1, 0),

- nn.GELU() if use_hs else nn.GELU(),

- # pw-linear

- Conv2d_BN(hidden_dim, oup, 1, 1, 0, bn_weight_init=0),

- ))

- def forward(self, x):

- return self.channel_mixer(self.token_mixer(x))

- class RepViT(nn.Module):

- def __init__(self, cfgs, factor):

- super(RepViT, self).__init__()

- # setting of inverted residual blocks

- cfgs = [sublist[:2] + [_make_divisible(int(sublist[2] * factor) , 8)] + sublist[3:] for sublist in cfgs]

- self.cfgs = cfgs

- # building first layer

- input_channel = self.cfgs[0][2]

- patch_embed = torch.nn.Sequential(Conv2d_BN(3, input_channel // 2, 3, 2, 1), torch.nn.GELU(),

- Conv2d_BN(input_channel // 2, input_channel, 3, 2, 1))

- layers = [patch_embed]

- # building inverted residual blocks

- block = RepViTBlock

- for k, t, c, use_se, use_hs, s in self.cfgs:

- output_channel = _make_divisible(c , 8)

- exp_size = _make_divisible(input_channel * t, 8)

- layers.append(block(input_channel, exp_size, output_channel, k, s, use_se, use_hs))

- input_channel = output_channel

- self.features = nn.ModuleList(layers)

- self.width_list = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]

- def forward(self, x):

- # x = self.features(x

- results = [None, None, None, None]

- temp = None

- i = None

- for index, f in enumerate(self.features):

- x = f(x)

- if index == 0:

- temp = x.size(1)

- i = 0

- elif x.size(1) == temp:

- results[i] = x

- else:

- temp = x.size(1)

- i = i + 1

- return results

- def repvit_m0_6(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- [3, 2, 40, 1, 0, 1],

- [3, 2, 40, 0, 0, 1],

- [3, 2, 80, 0, 0, 2],

- [3, 2, 80, 1, 0, 1],

- [3, 2, 80, 0, 0, 1],

- [3, 2, 160, 0, 1, 2],

- [3, 2, 160, 1, 1, 1],

- [3, 2, 160, 0, 1, 1],

- [3, 2, 160, 1, 1, 1],

- [3, 2, 160, 0, 1, 1],

- [3, 2, 160, 1, 1, 1],

- [3, 2, 160, 0, 1, 1],

- [3, 2, 160, 1, 1, 1],

- [3, 2, 160, 0, 1, 1],

- [3, 2, 160, 0, 1, 1],

- [3, 2, 320, 0, 1, 2],

- [3, 2, 320, 1, 1, 1],

- ]

- model = RepViT(cfgs, factor)

- return model

- def repvit_m0_9(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- # k, t, c, SE, HS, s

- [3, 2, 48, 1, 0, 1],

- [3, 2, 48, 0, 0, 1],

- [3, 2, 48, 0, 0, 1],

- [3, 2, 96, 0, 0, 2],

- [3, 2, 96, 1, 0, 1],

- [3, 2, 96, 0, 0, 1],

- [3, 2, 96, 0, 0, 1],

- [3, 2, 192, 0, 1, 2],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 1, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 192, 0, 1, 1],

- [3, 2, 384, 0, 1, 2],

- [3, 2, 384, 1, 1, 1],

- [3, 2, 384, 0, 1, 1]

- ]

- model = RepViT(cfgs, factor)

- return model

- def repvit_m1_0(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- # k, t, c, SE, HS, s

- [3, 2, 56, 1, 0, 1],

- [3, 2, 56, 0, 0, 1],

- [3, 2, 56, 0, 0, 1],

- [3, 2, 112, 0, 0, 2],

- [3, 2, 112, 1, 0, 1],

- [3, 2, 112, 0, 0, 1],

- [3, 2, 112, 0, 0, 1],

- [3, 2, 224, 0, 1, 2],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 1, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 224, 0, 1, 1],

- [3, 2, 448, 0, 1, 2],

- [3, 2, 448, 1, 1, 1],

- [3, 2, 448, 0, 1, 1]

- ]

- model = RepViT(cfgs,factor=factor)

- return model

- def repvit_m1_1(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- # k, t, c, SE, HS, s

- [3, 2, 64, 1, 0, 1],

- [3, 2, 64, 0, 0, 1],

- [3, 2, 64, 0, 0, 1],

- [3, 2, 128, 0, 0, 2],

- [3, 2, 128, 1, 0, 1],

- [3, 2, 128, 0, 0, 1],

- [3, 2, 128, 0, 0, 1],

- [3, 2, 256, 0, 1, 2],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 512, 0, 1, 2],

- [3, 2, 512, 1, 1, 1],

- [3, 2, 512, 0, 1, 1]

- ]

- model = RepViT(cfgs,factor=factor)

- return model

- def repvit_m1_5(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- # k, t, c, SE, HS, s

- [3, 2, 64, 1, 0, 1],

- [3, 2, 64, 0, 0, 1],

- [3, 2, 64, 1, 0, 1],

- [3, 2, 64, 0, 0, 1],

- [3, 2, 64, 0, 0, 1],

- [3, 2, 128, 0, 0, 2],

- [3, 2, 128, 1, 0, 1],

- [3, 2, 128, 0, 0, 1],

- [3, 2, 128, 1, 0, 1],

- [3, 2, 128, 0, 0, 1],

- [3, 2, 128, 0, 0, 1],

- [3, 2, 256, 0, 1, 2],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 1, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 256, 0, 1, 1],

- [3, 2, 512, 0, 1, 2],

- [3, 2, 512, 1, 1, 1],

- [3, 2, 512, 0, 1, 1],

- [3, 2, 512, 1, 1, 1],

- [3, 2, 512, 0, 1, 1]

- ]

- model = RepViT(cfgs,factor=factor)

- return model

- def repvit_m2_3(factor):

- """

- Constructs a MobileNetV3-Large model

- """

- cfgs = [

- # k, t, c, SE, HS, s

- [3, 2, 80, 1, 0, 1],

- [3, 2, 80, 0, 0, 1],

- [3, 2, 80, 1, 0, 1],

- [3, 2, 80, 0, 0, 1],

- [3, 2, 80, 1, 0, 1],

- [3, 2, 80, 0, 0, 1],

- [3, 2, 80, 0, 0, 1],

- [3, 2, 160, 0, 0, 2],

- [3, 2, 160, 1, 0, 1],

- [3, 2, 160, 0, 0, 1],

- [3, 2, 160, 1, 0, 1],

- [3, 2, 160, 0, 0, 1],

- [3, 2, 160, 1, 0, 1],

- [3, 2, 160, 0, 0, 1],

- [3, 2, 160, 0, 0, 1],

- [3, 2, 320, 0, 1, 2],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 1, 1, 1],

- [3, 2, 320, 0, 1, 1],

- # [3, 2, 320, 1, 1, 1],

- # [3, 2, 320, 0, 1, 1],

- [3, 2, 320, 0, 1, 1],

- [3, 2, 640, 0, 1, 2],

- [3, 2, 640, 1, 1, 1],

- [3, 2, 640, 0, 1, 1],

- # [3, 2, 640, 1, 1, 1],

- # [3, 2, 640, 0, 1, 1]

- ]

- model = RepViT(cfgs,factor=factor)

- return model

- if __name__ == '__main__':

- model = repvit_m0_6(factor=0.25)

- inputs = torch.randn((1, 3, 640, 640))

- for i in model(inputs):

- print(i.size())

四、手把手教你添加RepViT网络结构

4.1 修改一

第一步还是建立文件,我们找到如下ultralytics/nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可

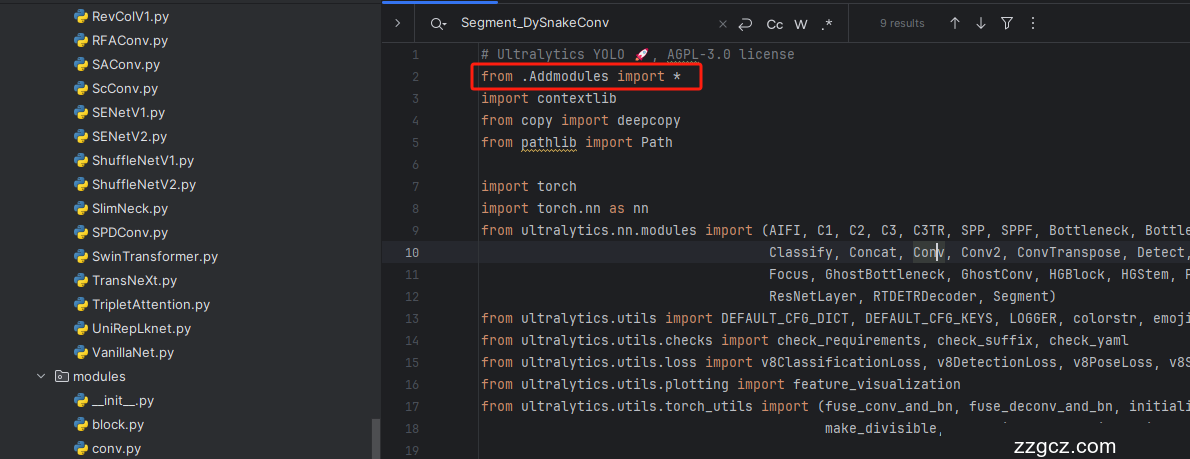

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

从今天开始以后的教程就都统一成这个样子了,因为我默认大家用了我群内的文件来进行修改!!

4.4 修改四

添加如下两行代码!!!

4.5 修改五

找到七百多行大概把具体看图片,按照图片来修改就行,添加红框内的部分,注意没有()只是 函数 名。

- elif m in {自行添加对应的模型即可,下面都是一样的}:

- m = m(*args)

- c2 = m.width_list # 返回通道列表

- backbone = True

4.6 修改六

下面的两个红框内都是需要改动的。

- if isinstance(c2, list):

- m_ = m

- m_.backbone = True

- else:

- m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # module

- t = str(m)[8:-2].replace('__main__.', '') # module type

- m.np = sum(x.numel() for x in m_.parameters()) # number params

- m_.i, m_.f, m_.type = i + 4 if backbone else i, f, t # attach index, 'from' index, type

4.7 修改七

如下的也需要修改,全部按照我的来。

代码如下把原先的代码替换了即可。

- if verbose:

- LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # print

- save.extend(x % (i + 4 if backbone else i) for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelist

- layers.append(m_)

- if i == 0:

- ch = []

- if isinstance(c2, list):

- ch.extend(c2)

- if len(c2) != 5:

- ch.insert(0, 0)

- else:

- ch.append(c2)

4.8 修改八

修改八和前面的都不太一样,需要修改前向传播中的一个部分, 已经离开了parse_model方法了。

可以在图片中开代码行数,没有离开task.py文件都是同一个文件。 同时这个部分有好几个前向传播都很相似,大家不要看错了, 是70多行左右的!!!,同时我后面提供了代码,大家直接复制粘贴即可,有时间我针对这里会出一个视频。

代码如下->

- def _predict_once(self, x, profile=False, visualize=False, embed=None):

- """

- Perform a forward pass through the network.

- Args:

- x (torch.Tensor): The input tensor to the model.

- profile (bool): Print the computation time of each layer if True, defaults to False.

- visualize (bool): Save the feature maps of the model if True, defaults to False.

- embed (list, optional): A list of feature vectors/embeddings to return.

- Returns:

- (torch.Tensor): The last output of the model.

- """

- y, dt, embeddings = [], [], [] # outputs

- for m in self.model:

- if m.f != -1: # if not from previous layer

- x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

- if profile:

- self._profile_one_layer(m, x, dt)

- if hasattr(m, 'backbone'):

- x = m(x)

- if len(x) != 5: # 0 - 5

- x.insert(0, None)

- for index, i in enumerate(x):

- if index in self.save:

- y.append(i)

- else:

- y.append(None)

- x = x[-1] # 最后一个输出传给下一层

- else:

- x = m(x) # run

- y.append(x if m.i in self.save else None) # save output

- if visualize:

- feature_visualization(x, m.type, m.i, save_dir=visualize)

- if embed and m.i in embed:

- embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten

- if m.i == max(embed):

- return torch.unbind(torch.cat(embeddings, 1), dim=0)

- return x

到这里就完成了修改部分,但是这里面细节很多,大家千万要注意不要替换多余的代码,导致报错,也不要拉下任何一部,都会导致运行失败,而且报错很难排查!!!很难排查!!!

注意!!! 额外的修改!

关注我的其实都知道,我大部分的修改都是一样的,这个网络需要额外的修改一步,就是s一个参数,将下面的s改为640!!!即可完美运行!!

打印计算量问题解决方案

我们找到如下文件'ultralytics/utils/torch_utils.py'按照如下的图片进行修改,否则容易打印不出来计算量。

注意事项!!!

如果大家在验证的时候报错形状不匹配的错误可以固定 验证集 的图片尺寸,方法如下 ->

找到下面这个文件ultralytics/ models /yolo/detect/train.py然后其中有一个类是DetectionTrainer class中的build_dataset函数中的一个参数rect=mode == 'val'改为rect=False

五、RepViT的yaml文件

复制如下yaml文件进行运行!!!

5.1 RepViT 的yaml文件版本1

此版本训练信息:YOLO11-RepViT summary: 559 layers, 2,118,115 parameters, 2,118,099 gradients, 5.4 GFLOPs

使用说明:# 下面 [-1, 1, LSKNet, [0.25]] 参数位置的0.25是通道放缩的系数, YOLOv11N是0.25 YOLOv11S是0.5 YOLOv11M是1. YOLOv11l是1 YOLOv11是1.5大家根据自己训练的YOLO版本设定即可.

# 本文支持版本有 __all__ = ['repvit_m0_6','repvit_m0_9', 'repvit_m1_0', 'repvit_m1_1', 'repvit_m1_5', 'repvit_m2_3']

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # 下面 [-1, 1, repvit_m0_6, [0.25]] 参数位置的0.25是通道放缩的系数, YOLOv11N是0.25 YOLOv11S是0.5 YOLOv11M是1. YOLOv11l是1 YOLOv11是1.5大家根据自己训练的YOLO版本设定即可.

- # 本文支持版本有 __all__ = ['repvit_m0_6','repvit_m0_9', 'repvit_m1_0', 'repvit_m1_1', 'repvit_m1_5', 'repvit_m2_3']

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, repvit_m0_6, [0.5]] # 0-4 P1/2 这里是四层大家不要被yaml文件限制住了思维,不会画图进群看视频.

- - [-1, 1, SPPF, [1024, 5]] # 5

- - [-1, 2, C2PSA, [1024]] # 6

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 3], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [512, False]] # 9

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 2], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 12 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 9], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [512, False]] # 15 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 6], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [1024, True]] # 18 (P5/32-large)

- - [[12, 15, 18], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.2 训练文件

- import warnings

- warnings.filterwarnings('ignore')

- from ultralytics import YOLO

- if __name__ == '__main__':

- model = YOLO('ultralytics/cfg/models/v8/yolov8-C2f-FasterBlock.yaml')

- # model.load('yolov8n.pt') # loading pretrain weights

- model.train(data=r'替换数据集yaml文件地址',

- # 如果大家任务是其它的'ultralytics/cfg/default.yaml'找到这里修改task可以改成detect, segment, classify, pose

- cache=False,

- imgsz=640,

- epochs=150,

- single_cls=False, # 是否是单类别检测

- batch=4,

- close_mosaic=10,

- workers=0,

- device='0',

- optimizer='SGD', # using SGD

- # resume='', # 如过想续训就设置last.pt的地址

- amp=False, # 如果出现训练损失为Nan可以关闭amp

- project='runs/train',

- name='exp',

- )

六、成功运行记录

下面是成功运行的截图,已经完成了有1个epochs的训练,图片太大截不全第2个epochs,这里改完之后打印出了点问题,但是不影响任何功能,后期我找时间修复一下这个问题。

七、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充, 目前本专栏免费阅读(暂时,大家尽早关注不迷路~) ,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~