一、本文介绍

本文给大家带来的最新改进机制是 Gold-YOLO 利用其 Neck 改进v11的Neck,GoLd-YOLO引入了一种新的机制—— 信息聚集-分发 (Gather-and-Distribute, GD) 。这个机制通过全局融合不同层次的特征并将融合后的全局信息注入到各个层级中,从而实现 更高效 的信息交互和融合。这种方法增强了 模型 的颈部(neck)信息融合能力 (有点类似于长颈鹿的脖子该Neck部分很长) , 同时也没有显著增加延迟, 提高了模型在检测不同大小物体时的性能, 同时欢迎大家订阅本专栏,本专栏每周更新3-5篇最新机制,更有包含我所有改进的文件和交流群提供给大家。

欢迎大家订阅我的专栏一起学习YOLO!

二、Gold-YOLO模型原理

论文地址: 官方论文地址

代码地址: 官方代码地址

2.1 Gold-YOLO的基本原理

Gold-YOLO 是一种先进的目标检测模型,它通过一种创新的 聚合-分发(Gather-and-Distribute, GD)机制 来提高信息融合效率。这一机制利用卷积和自注意力操作来处理来自网络不同层的信息。通过这种方式,Gold-YOLO能够更有效地融合多尺度特征,实现低延迟和高准确性之间的理想平衡。此外,Gold-YOLO还首次在YOLO系列中采用了 MAE风格的预训练 ,从而提高了模型的学习效率和准确度。

Gold-YOLO的基本原理可以概括如下:

1. 聚合-分发机制(GD) : 通过卷积和自注意力操作实现,这一机制有效地融合了来自网络不同层的信息。

2. 多尺度特征融合: GD 机制提高了多尺度特征的融合能力,从而提升了目标检测的准确性。

3. MAE风格预训练: 首次在YOLO系列中采用,提高了模型的学习效率和准确度。

下面我将为大家展示 Gold-YOLO架构 :

主要包括以下几个部分:

1. 主干(Backbone):

对输入图像进行初步处理,提取特征。

2. 低阶聚合分发(Low-GD)分支:

用于对较大尺寸特征图进行对齐(Low-FAM)和融合(Low-IFM)。

3. 高阶聚合分发(High-GD)分支:

用于对较小尺寸特征图进行对齐(High-FAM)和融合(High-IFM)。

4. 注入模块(Inject):

将融合的信息整合并传递给检测头部。

5. 头部(Head):

利用融合后的特征进行目标检测。

总结: 在这张图中,Gold-YOLO的多尺度特征融合体现在低阶(Low-GD)和高阶(High-GD)聚合-分发分支的设计上。这两个分支通过特征对齐模块(FAM)和信息融合模块(IFM)来处理不同尺寸的特征图。通过这种结构,Gold-YOLO可以有效地融合来自网络不同深度层次的信息,这对于准确检测不同大小的目标至关重要。

2.2 聚合-分发机制(GD)

聚合-分发机制(GD) 是Gold-YOLO模型的核心特征之一,其目的是 解决信息融合问题 。在这个机制中,采用 特征对齐模块(FAM) 和 信息融合模块(IFM) 对不同层级的特征进行聚合,并通过 信息注入模块(Inject) 将融合后的信息分发回网络的各个层级。这样,模型就能更有效地利用多尺度特征,从而在保持低延迟的同时提高目标检测的准确性。

下面展示给大家的图像展示了Gold-YOLO架构中的 两个关键模块 :

(a) 信息注入模块(Inject): 该模块通过卷积和Sigmoid激活 函数 等操作结合本地特征和全局特征,旨在用全局上下文信息增强特征图,这对于准确的目标检测至关重要。

(b) 轻量级邻层融合(LAF)模块: 此模块用于改进相邻层特征图的融合。它使用平均池化和双线性上/下采样等操作来对齐和合并特征图,从而确保每一层的本地特征都富含来自其直接邻层的信息。

总结: 图中展示的信息注入模块和轻量级邻层融合(LAF)模块是实现高效信息融合的关键组成部分,通过结合不同层的局部(本地)和全局特征,提高了模型的目标检测 性能 。

2.3 多尺度特征融合

多尺度特征融合 是一种在目标检测模型中常用的技术,旨在提高模型对不同大小目标的检测能力。通过结合来自网络不同层级的特征,该技术能够捕获从粗糙到精细的多种尺度的信息。低层次特征通常含有更多关于小对象的细节,而高层次特征则捕捉到大对象的语义信息。多尺度特征融合通过 聚合这些层级的特征 来增强模型的表示能力,使得模型能够更准确地识别和定位图像中的各种尺寸的对象。

下面展示了 Gold-YOLO模型中的聚合-分发结构 。

图(a)中的 低阶聚合分发(Low-GD) 分支包括低阶特征对齐模块(Low-FAM)和低阶信息融合模块(Low-IFM)。图(b)中的 高阶聚合分发(High-GD) 分支包含高阶特征对齐模块(High-FAM)和高阶信息融合模块(High-IFM)。

总结: 这两个分支是Gold-YOLO模型中处理不同尺寸特征图并提高目标检测性能的关键部分,通过不同尺度的特征对齐(FAM)和信息融合(IFM)模块,增强了模型处理不同尺度特征并提高目标检测性能的能力。

2.4 MAE风格预训练

MAE风格预训练(Masked Autoencoder for self-supervised learning) 指的是一种 自监督学习方法 ,用于提升模型在处理大规模数据集时的学习效率和准确性。在这种预训练方法中,模型被训练来重建输入数据中被随机遮蔽的部分,通过这一过程模型学习到了数据的内在表示。这种训练方式 不依赖于标签数据 ,使得模型能够学习到丰富的数据表示。 在计算机视觉领域 ,MAE风格预训练尤其有效,因为它促使模型捕捉到图像的结构性特征和内容,从而在之后的监督学习任务中,如目标检测或图像分类,能更快地收敛并提高性能。 在Gold-YOLO中 ,采用MAE预训练进一步提升了模型对图像特征的理解,从而在目标检测任务中实现了更高的准确率。

三、Gold-YOLO核心代码

使用方式看章节四,同时其中代码使用涉及到mmcv这个库,这个库需要很强的版本特征,如果你们通过pip下载失败,可以通过下面的链接直接下载编译版本的,然后到本地通过pip安装即可,同时我以后发的检测头基本上都需要这个mmcv库。

- import torch

- from torch import nn

- import torch.nn.functional as F

- import numpy as np

- from mmcv.cnn import ConvModule, build_norm_layer

- __all__ = ('Low_FAM', 'Low_IFM', 'Split', 'SimConv', 'Low_LAF', 'Inject', 'RepBlock', 'High_FAM', 'High_IFM', 'High_LAF')

- class High_LAF(nn.Module):

- def forward(self, x1, x2):

- if torch.onnx.is_in_onnx_export():

- self.pool = onnx_AdaptiveAvgPool2d

- else:

- self.pool = nn.functional.adaptive_avg_pool2d

- N, C, H, W = x2.shape

- # output_size = np.array([H, W])

- output_size = [H, W]

- x1 = self.pool(x1, output_size)

- return torch.cat([x1, x2], 1)

- class High_IFM(nn.Module):

- def __init__(self, block_num, embedding_dim, key_dim, num_heads,

- mlp_ratio=4., attn_ratio=2., drop=0., attn_drop=0., drop_path=0.,

- norm_cfg=dict(type='BN', requires_grad=True),

- act_layer=nn.ReLU6):

- super().__init__()

- self.block_num = block_num

- drop_path = [x.item() for x in torch.linspace(0, drop_path[0], drop_path[1])] # 0.1, 2

- self.transformer_blocks = nn.ModuleList()

- for i in range(self.block_num):

- self.transformer_blocks.append(top_Block(

- embedding_dim, key_dim=key_dim, num_heads=num_heads,

- mlp_ratio=mlp_ratio, attn_ratio=attn_ratio,

- drop=drop, drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path,

- norm_cfg=norm_cfg, act_layer=act_layer))

- def forward(self, x):

- # token * N

- for i in range(self.block_num):

- x = self.transformer_blocks[i](x)

- return x

- class Mlp(nn.Module):

- def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.ReLU, drop=0.,

- norm_cfg=dict(type='BN', requires_grad=True)):

- super().__init__()

- out_features = out_features or in_features

- hidden_features = hidden_features or in_features

- self.fc1 = Conv2d_BN(in_features, hidden_features, norm_cfg=norm_cfg)

- self.dwconv = nn.Conv2d(hidden_features, hidden_features, 3, 1, 1, bias=True, groups=hidden_features)

- self.act = act_layer()

- self.fc2 = Conv2d_BN(hidden_features, out_features, norm_cfg=norm_cfg)

- self.drop = nn.Dropout(drop)

- def forward(self, x):

- x = self.fc1(x)

- x = self.dwconv(x)

- x = self.act(x)

- x = self.drop(x)

- x = self.fc2(x)

- x = self.drop(x)

- return x

- class top_Block(nn.Module):

- def __init__(self, dim, key_dim, num_heads, mlp_ratio=4., attn_ratio=2., drop=0.,

- drop_path=0., act_layer=nn.ReLU, norm_cfg=dict(type='BN2d', requires_grad=True)):

- super().__init__()

- self.dim = dim

- self.num_heads = num_heads

- self.mlp_ratio = mlp_ratio

- self.attn = Attention(dim, key_dim=key_dim, num_heads=num_heads, attn_ratio=attn_ratio, activation=act_layer,

- norm_cfg=norm_cfg)

- # NOTE: drop path for stochastic depth, we shall see if this is better than dropout here

- self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

- mlp_hidden_dim = int(dim * mlp_ratio)

- self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, act_layer=act_layer, drop=drop,

- norm_cfg=norm_cfg)

- def forward(self, x1):

- x1 = x1 + self.drop_path(self.attn(x1))

- x1 = x1 + self.drop_path(self.mlp(x1))

- return x1

- def drop_path(x, drop_prob: float = 0., training: bool = False):

- """Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

- This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

- the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

- See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

- changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

- 'survival rate' as the argument.

- """

- if drop_prob == 0. or not training:

- return x

- keep_prob = 1 - drop_prob

- shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

- random_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)

- random_tensor.floor_() # binarize

- output = x.div(keep_prob) * random_tensor

- return output

- class DropPath(nn.Module):

- """Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

- """

- def __init__(self, drop_prob=None):

- super(DropPath, self).__init__()

- self.drop_prob = drop_prob

- def forward(self, x):

- return drop_path(x, self.drop_prob, self.training)

- class Attention(torch.nn.Module):

- def __init__(self, dim, key_dim, num_heads,

- attn_ratio=4,

- activation=None,

- norm_cfg=dict(type='BN', requires_grad=True), ):

- super().__init__()

- self.num_heads = num_heads

- self.scale = key_dim ** -0.5

- self.key_dim = key_dim

- self.nh_kd = nh_kd = key_dim * num_heads # num_head key_dim

- self.d = int(attn_ratio * key_dim)

- self.dh = int(attn_ratio * key_dim) * num_heads

- self.attn_ratio = attn_ratio

- self.to_q = Conv2d_BN(dim, nh_kd, 1, norm_cfg=norm_cfg)

- self.to_k = Conv2d_BN(dim, nh_kd, 1, norm_cfg=norm_cfg)

- self.to_v = Conv2d_BN(dim, self.dh, 1, norm_cfg=norm_cfg)

- self.proj = torch.nn.Sequential(activation(), Conv2d_BN(

- self.dh, dim, bn_weight_init=0, norm_cfg=norm_cfg))

- def forward(self, x): # x (B,N,C)

- B, C, H, W = get_shape(x)

- qq = self.to_q(x).reshape(B, self.num_heads, self.key_dim, H * W).permute(0, 1, 3, 2)

- kk = self.to_k(x).reshape(B, self.num_heads, self.key_dim, H * W)

- vv = self.to_v(x).reshape(B, self.num_heads, self.d, H * W).permute(0, 1, 3, 2)

- attn = torch.matmul(qq, kk)

- attn = attn.softmax(dim=-1) # dim = k

- xx = torch.matmul(attn, vv)

- xx = xx.permute(0, 1, 3, 2).reshape(B, self.dh, H, W)

- xx = self.proj(xx)

- return xx

- def get_shape(tensor):

- shape = tensor.shape

- if torch.onnx.is_in_onnx_export():

- shape = [i.cpu().numpy() for i in shape]

- return shape

- class Conv2d_BN(nn.Sequential):

- def __init__(self, a, b, ks=1, stride=1, pad=0, dilation=1,

- groups=1, bn_weight_init=1,

- norm_cfg=dict(type='BN', requires_grad=True)):

- super().__init__()

- self.inp_channel = a

- self.out_channel = b

- self.ks = ks

- self.pad = pad

- self.stride = stride

- self.dilation = dilation

- self.groups = groups

- self.add_module('c', nn.Conv2d(

- a, b, ks, stride, pad, dilation, groups, bias=False))

- bn = build_norm_layer(norm_cfg, b)[1]

- nn.init.constant_(bn.weight, bn_weight_init)

- nn.init.constant_(bn.bias, 0)

- self.add_module('bn', bn)

- class High_FAM(nn.Module):

- def __init__(self, stride, pool_mode='onnx'):

- super().__init__()

- self.stride = stride

- if pool_mode == 'torch':

- self.pool = nn.functional.adaptive_avg_pool2d

- elif pool_mode == 'onnx':

- self.pool = onnx_AdaptiveAvgPool2d

- def forward(self, inputs):

- B, C, H, W = get_shape(inputs[-1])

- H = (H - 1) // self.stride + 1

- W = (W - 1) // self.stride + 1

- # output_size = np.array([H, W])

- output_size = [H, W]

- if not hasattr(self, 'pool'):

- self.pool = nn.functional.adaptive_avg_pool2d

- if torch.onnx.is_in_onnx_export():

- self.pool = onnx_AdaptiveAvgPool2d

- out = [self.pool(inp, output_size) for inp in inputs]

- return torch.cat(out, dim=1)

- class RepVGGBlock(nn.Module):

- '''RepVGGBlock is a basic rep-style block, including training and deploy status

- This code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py

- '''

- def __init__(self, in_channels, out_channels, kernel_size=3,

- stride=1, padding=1, dilation=1, groups=1, padding_mode='zeros', deploy=False, use_se=False):

- super(RepVGGBlock, self).__init__()

- """ Initialization of the class.

- Args:

- in_channels (int): Number of channels in the input image

- out_channels (int): Number of channels produced by the convolution

- kernel_size (int or tuple): Size of the convolving kernel

- stride (int or tuple, optional): Stride of the convolution. Default: 1

- padding (int or tuple, optional): Zero-padding added to both sides of

- the input. Default: 1

- dilation (int or tuple, optional): Spacing between kernel elements. Default: 1

- groups (int, optional): Number of blocked connections from input

- channels to output channels. Default: 1

- padding_mode (string, optional): Default: 'zeros'

- deploy: Whether to be deploy status or training status. Default: False

- use_se: Whether to use se. Default: False

- """

- self.deploy = deploy

- self.groups = groups

- self.in_channels = in_channels

- self.out_channels = out_channels

- assert kernel_size == 3

- assert padding == 1

- padding_11 = padding - kernel_size // 2

- self.nonlinearity = nn.ReLU()

- if use_se:

- raise NotImplementedError("se block not supported yet")

- else:

- self.se = nn.Identity()

- if deploy:

- self.rbr_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

- stride=stride,

- padding=padding, dilation=dilation, groups=groups, bias=True,

- padding_mode=padding_mode)

- else:

- self.rbr_identity = nn.BatchNorm2d(

- num_features=in_channels) if out_channels == in_channels and stride == 1 else None

- self.rbr_dense = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,

- stride=stride, padding=padding, groups=groups)

- self.rbr_1x1 = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride,

- padding=padding_11, groups=groups)

- def forward(self, inputs):

- '''Forward process'''

- if hasattr(self, 'rbr_reparam'):

- return self.nonlinearity(self.se(self.rbr_reparam(inputs)))

- if self.rbr_identity is None:

- id_out = 0

- else:

- id_out = self.rbr_identity(inputs)

- return self.nonlinearity(self.se(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out))

- def get_equivalent_kernel_bias(self):

- kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)

- kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)

- kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)

- return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

- def _pad_1x1_to_3x3_tensor(self, kernel1x1):

- if kernel1x1 is None:

- return 0

- else:

- return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

- def _fuse_bn_tensor(self, branch):

- if branch is None:

- return 0, 0

- if isinstance(branch, nn.Sequential):

- kernel = branch.conv.weight

- running_mean = branch.bn.running_mean

- running_var = branch.bn.running_var

- gamma = branch.bn.weight

- beta = branch.bn.bias

- eps = branch.bn.eps

- else:

- assert isinstance(branch, nn.BatchNorm2d)

- if not hasattr(self, 'id_tensor'):

- input_dim = self.in_channels // self.groups

- kernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)

- for i in range(self.in_channels):

- kernel_value[i, i % input_dim, 1, 1] = 1

- self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

- kernel = self.id_tensor

- running_mean = branch.running_mean

- running_var = branch.running_var

- gamma = branch.weight

- beta = branch.bias

- eps = branch.eps

- std = (running_var + eps).sqrt()

- t = (gamma / std).reshape(-1, 1, 1, 1)

- return kernel * t, beta - running_mean * gamma / std

- def switch_to_deploy(self):

- if hasattr(self, 'rbr_reparam'):

- return

- kernel, bias = self.get_equivalent_kernel_bias()

- self.rbr_reparam = nn.Conv2d(in_channels=self.rbr_dense.conv.in_channels,

- out_channels=self.rbr_dense.conv.out_channels,

- kernel_size=self.rbr_dense.conv.kernel_size, stride=self.rbr_dense.conv.stride,

- padding=self.rbr_dense.conv.padding, dilation=self.rbr_dense.conv.dilation,

- groups=self.rbr_dense.conv.groups, bias=True)

- self.rbr_reparam.weight.data = kernel

- self.rbr_reparam.bias.data = bias

- for para in self.parameters():

- para.detach_()

- self.__delattr__('rbr_dense')

- self.__delattr__('rbr_1x1')

- if hasattr(self, 'rbr_identity'):

- self.__delattr__('rbr_identity')

- if hasattr(self, 'id_tensor'):

- self.__delattr__('id_tensor')

- self.deploy = True

- class RepBlock(nn.Module):

- '''

- RepBlock is a stage block with rep-style basic block

- '''

- def __init__(self, in_channels, out_channels, n=1, block=RepVGGBlock, basic_block=RepVGGBlock):

- super().__init__()

- self.conv1 = block(in_channels, out_channels)

- self.block = nn.Sequential(*(block(out_channels, out_channels) for _ in range(n - 1))) if n > 1 else None

- '''

- if block == BottleRep:

- self.conv1 = BottleRep(in_channels, out_channels, basic_block=basic_block, weight=True)

- n = n // 2

- self.block = nn.Sequential(

- *(BottleRep(out_channels, out_channels, basic_block=basic_block, weight=True) for _ in

- range(n - 1))) if n > 1 else None

- '''

- def forward(self, x):

- x = self.conv1(x)

- if self.block is not None:

- x = self.block(x)

- return x

- class Inject(nn.Module):

- def __init__(

- self,

- inp: int,

- oup: int,

- global_index: int,

- norm_cfg=dict(type='BN', requires_grad=True),

- activations=nn.ReLU6,

- global_inp=None,

- ) -> None:

- super().__init__()

- self.global_index = global_index

- self.norm_cfg = norm_cfg

- if not global_inp:

- global_inp = inp

- self.local_embedding = ConvModule(inp, oup, kernel_size=1, norm_cfg=self.norm_cfg, act_cfg=None)

- self.global_embedding = ConvModule(global_inp, oup, kernel_size=1, norm_cfg=self.norm_cfg, act_cfg=None)

- self.global_act = ConvModule(global_inp, oup, kernel_size=1, norm_cfg=self.norm_cfg, act_cfg=None)

- self.act = h_sigmoid()

- def forward(self, x_l, x_g):

- '''

- x_g: global features

- x_l: local features

- '''

- x_g = x_g[self.global_index]

- B, C, H, W = x_l.shape

- g_B, g_C, g_H, g_W = x_g.shape

- use_pool = H < g_H

- local_feat = self.local_embedding(x_l)

- global_act = self.global_act(x_g)

- global_feat = self.global_embedding(x_g)

- if use_pool:

- avg_pool = get_avg_pool()

- # output_size = np.array([H, W])

- output_size = [H, W]

- sig_act = avg_pool(global_act, output_size)

- global_feat = avg_pool(global_feat, output_size)

- else:

- sig_act = F.interpolate(self.act(global_act), size=(H, W), mode='bilinear', align_corners=False)

- global_feat = F.interpolate(global_feat, size=(H, W), mode='bilinear', align_corners=False)

- out = local_feat * sig_act + global_feat

- return out

- class h_sigmoid(nn.Module):

- def __init__(self, inplace=True):

- super(h_sigmoid, self).__init__()

- self.relu = nn.ReLU6(inplace=inplace)

- def forward(self, x):

- return self.relu(x + 3) / 6

- def get_avg_pool():

- if torch.onnx.is_in_onnx_export():

- avg_pool = onnx_AdaptiveAvgPool2d

- else:

- avg_pool = nn.functional.adaptive_avg_pool2d

- return avg_pool

- class Low_LAF(nn.Module):

- def __init__(self, in_channels, out_channels):

- super().__init__()

- self.cv1 = SimConv(in_channels, out_channels, 1, 1)

- self.cv_fuse = SimConv(round(out_channels * 2.5), out_channels, 1, 1)

- self.downsample = nn.functional.adaptive_avg_pool2d

- def forward(self, x):

- N, C, H, W = x[1].shape

- # output_size = np.array([H, W])

- output_size = [H, W]

- if torch.onnx.is_in_onnx_export():

- self.downsample = onnx_AdaptiveAvgPool2d

- output_size = np.array([H, W])

- x0 = self.downsample(x[0], output_size)

- x1 = self.cv1(x[1])

- x2 = F.interpolate(x[2], size=(H, W), mode='bilinear', align_corners=False)

- return self.cv_fuse(torch.cat((x0, x1, x2), dim=1))

- class SimConv(nn.Module):

- '''Normal Conv with ReLU VAN_activation'''

- def __init__(self, in_channels, out_channels, kernel_size, stride, groups=1, bias=False, padding=None):

- super().__init__()

- if padding is None:

- padding = kernel_size // 2

- self.conv = nn.Conv2d(

- in_channels,

- out_channels,

- kernel_size=kernel_size,

- stride=stride,

- padding=padding,

- groups=groups,

- bias=bias,

- )

- self.bn = nn.BatchNorm2d(out_channels)

- self.act = nn.ReLU()

- def forward(self, x):

- return self.act(self.bn(self.conv(x)))

- def forward_fuse(self, x):

- return self.act(self.conv(x))

- class Split(nn.Module):

- def __init__(self, trans_channels):

- super().__init__()

- self.trans_channels = trans_channels

- def forward(self, x):

- return x.split(self.trans_channels, dim=1)

- class Low_IFM(nn.Module):

- def __init__(self, in_channels, embed_dims, fuse_block_num, out_channels):

- super().__init__()

- self.conv1 = Conv(in_channels, embed_dims, kernel_size=1, stride=1, padding=0)

- self.block = nn.ModuleList([RepVGGBlock(embed_dims, embed_dims) for _ in range(fuse_block_num)]) if fuse_block_num > 0 else nn.Identity

- self.conv2 = Conv(embed_dims, out_channels, kernel_size=1, stride=1, padding=0)

- def forward(self, x):

- x = self.conv1(x)

- for b in self.block:

- x = b(x)

- out = self.conv2(x)

- return out

- class Low_FAM(nn.Module):

- def __init__(self):

- super().__init__()

- self.avg_pool = nn.functional.adaptive_avg_pool2d

- def forward(self, x):

- x_l, x_m, x_s, x_n = x

- B, C, H, W = x_s.shape

- # output_size = np.array([H, W])

- output_size = [H, W]

- if torch.onnx.is_in_onnx_export():

- self.avg_pool = onnx_AdaptiveAvgPool2d

- x_l = self.avg_pool(x_l, output_size)

- x_m = self.avg_pool(x_m, output_size)

- x_n = F.interpolate(x_n, size=(H, W), mode='bilinear', align_corners=False)

- out = torch.cat([x_l, x_m, x_s, x_n], 1)

- return out

- def conv_bn(in_channels, out_channels, kernel_size, stride, padding, groups=1, bias=False):

- '''Basic cell for rep-style block, including conv and bn'''

- result = nn.Sequential()

- result.add_module('conv', nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

- kernel_size=kernel_size, stride=stride, padding=padding, groups=groups,

- bias=bias))

- result.add_module('bn', nn.BatchNorm2d(num_features=out_channels))

- return result

- class Conv(nn.Module):

- '''Normal Conv with SiLU VAN_activation'''

- def __init__(self, in_channels, out_channels, kernel_size, stride, groups=1, bias=False, padding=None):

- super().__init__()

- if padding is None:

- padding = kernel_size // 2

- self.conv = nn.Conv2d(

- in_channels,

- out_channels,

- kernel_size=kernel_size,

- stride=stride,

- padding=padding,

- groups=groups,

- bias=bias,

- )

- self.bn = nn.BatchNorm2d(out_channels)

- self.act = nn.SiLU()

- def forward(self, x):

- return self.act(self.bn(self.conv(x)))

- def onnx_AdaptiveAvgPool2d(x, output_size):

- stride_size = np.floor(np.array(x.shape[-2:]) / output_size).astype(np.int32)

- kernel_size = np.array(x.shape[-2:]) - (output_size - 1) * stride_size

- avg = nn.AvgPool2d(kernel_size=list(kernel_size), stride=list(stride_size))

- x = avg(x)

- return x

四、Gold-YOLO使用方式

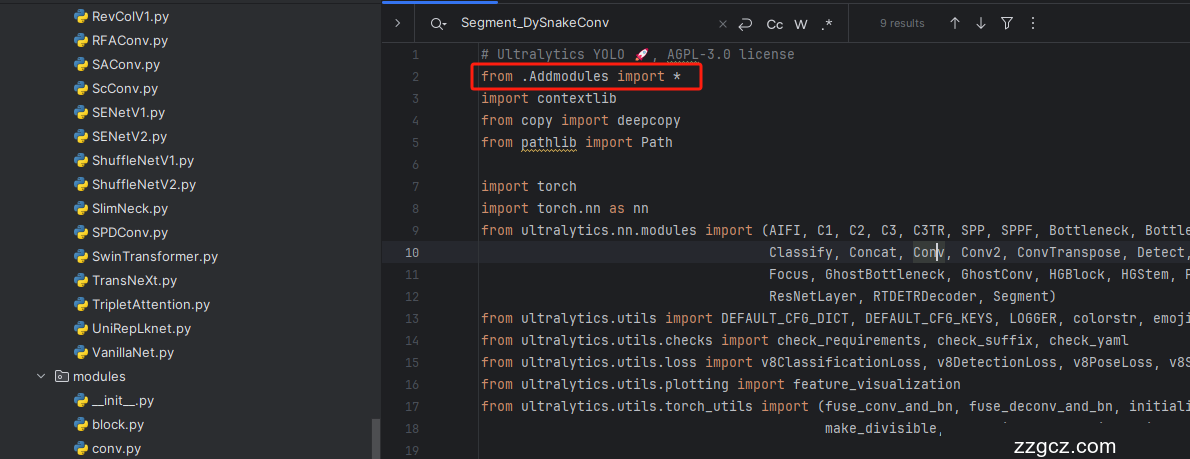

4.1 修改一

第一还是建立文件,我们找到如下 ultralytics /nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

从今天开始以后的教程就都统一成这个样子了,因为我默认大家用了我群内的文件来进行修改!!

4.4 修改四

按照图片进行修改.

4.5 修改五

将下面的代码按照图片添加,注意缩进不要复制时候缩进错误了导致报错。

- # --------------GOLD-YOLO--------------

- elif m in (nn.Conv2d, SimConv):

- c1, c2 = ch[f], args[0]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(c2 * width, 8)

- args = [c1, c2, *args[1:]]

- elif m in (High_IFM, ):

- c2 = args[1]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(c2 * width, 8)

- args = [args[0], c2, *args[2:]]

- elif m in (Low_FAM, High_FAM, High_LAF):

- c2 = sum(ch[x] for x in f)

- elif m is Low_IFM:

- c1, c2 = ch[f], args[2]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(min(c2, max_channels) * width, 8)

- args = [c1, *args[:-1], c2]

- elif m is Low_LAF:

- c1, c2 = ch[f[1]], args[0]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(min(c2, max_channels) * width, 8)

- args = [c1, c2, *args[1:]]

- elif m is Inject:

- global_index = args[1]

- c1, c2 = ch[f[1]][global_index], args[0]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(min(c2, max_channels) * width, 8)

- args = [c1, c2, global_index]

- elif m is RepBlock:

- c1, c2 = ch[f], args[0]

- if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2 = make_divisible(min(c2, max_channels) * width, 8)

- nums_repeat = max(round(args[1] * depth), 1) if args[1] > 1 else args[1] # depth gain

- args = [c1, c2, nums_repeat]

- elif m is Split:

- goldyolo = True

- c2 = []

- for arg in args:

- if arg != nc: # if c2 not equal to number of classes (i.e. for Classify() output)

- c2.append(make_divisible(min(arg, max_channels) * width, 8))

- args = [c2]

- # --------------GOLD-YOLO--------------

4.6 修改六

此处如果你修改了我的主干篇代码那么就需要修改,如果你没修改跳过此步即可.

4.7 修改七

同理按照图片添加即可,此处是必须修改的!

- if m in [Inject, High_LAF]:

- # input nums

- m_.input_nums = len(f)

- else:

- m_.input_nums = 1

4.8 修改八

此处如果你修改了我的主干篇代码那么就需要修改,如果你没修改跳过此步即可.

4.9 修改九

如果你未修改主干则看4.9.1如果修改了主干则看4.9.2.

4.9.1 未修改主干

上面的代码修改都是按照顺序来的,此处的代码修改不和上面的顺序一样我们需要找到'ultralytics/nn/tasks.py'文件的开头,然后进行修改。

- try:

- if m.input_nums > 1:

- # input nums more than one

- x = m(*x) # run

- else:

- x = m(x)

- except AttributeError:

- # AttributeError: 'Conv' object has no attribute 'input_nums'

- x = m(x)

修改完之后的样子如下图所示!

4.9.2 修改主干

上面的代码修改都是按照顺序来的,此处的代码修改不和上面的顺序一样我们需要找到'ultralytics/nn/tasks.py'文件的开头,然后进行修改。

- def _predict_once(self, x, profile=False, visualize=False, embed=None):

- """

- Perform a forward pass through the network.

- Args:

- x (torch.Tensor): The input tensor to the model.

- profile (bool): Print the computation time of each layer if True, defaults to False.

- visualize (bool): Save the feature maps of the model if True, defaults to False.

- embed (list, optional): A list of feature vectors/embeddings to return.

- Returns:

- (torch.Tensor): The last output of the model.

- """

- y, dt, embeddings = [], [], [] # outputs

- for m in self.model:

- if m.f != -1: # if not from previous layer

- x = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layers

- if profile:

- self._profile_one_layer(m, x, dt)

- if hasattr(m, 'backbone'):

- try:

- if m.input_nums > 1:

- # input nums more than one

- x = m(*x) # run

- else:

- x = m(x)

- except AttributeError:

- # AttributeError: 'Conv' object has no attribute 'input_nums'

- x = m(x)

- if len(x) != 5: # 0 - 5

- x.insert(0, None)

- for index, i in enumerate(x):

- if index in self.save:

- y.append(i)

- else:

- y.append(None)

- x = x[-1] # 最后一个输出传给下一层

- else:

- try:

- if m.input_nums > 1:

- # input nums more than one

- x = m(*x) # run

- else:

- x = m(x)

- except AttributeError:

- # AttributeError: 'Conv' object has no attribute 'input_nums'

- x = m(x)

- y.append(x if m.i in self.save else None) # save output

- if visualize:

- feature_visualization(x, m.type, m.i, save_dir=visualize)

- if embed and m.i in embed:

- embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flatten

- if m.i == max(embed):

- return torch.unbind(torch.cat(embeddings, 1), dim=0)

- return x

五、Gold-YOLO的yaml文件

5.1 yaml文件

需要注意的是本文的代码仅支持YOLOv11n和s使用,其余版本使用会报错,因为不兼容的问题导致.

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [[2, 4, 6, -1], 1, Low_FAM, []]

- - [-1, 1, Low_IFM, [96, 3, 768]]

- - [-1, 1, Split, [512, 256]] # 13-low_global_info

- - [10, 1, SimConv, [512, 1, 1]] # 14-c5_half

- - [[4, 6, -1], 1, Low_LAF, [512]]

- - [[-1, 13], 1, Inject, [512, 0]]

- - [-1, 1, RepBlock, [512, 12]] # 17-p4

- - [-1, 1, SimConv, [256, 1, 1]] # 18-p4_half

- - [[2, 4, -1], 1, Low_LAF, [256]]

- - [[-1, 13], 1, Inject, [256, 1]]

- - [-1, 1, RepBlock, [256, 12]] # 21-p3

- - [[-1, 17, 10], 1, High_FAM, [1, 'torch']]

- - [-1, 1, High_IFM, [2, 1792, 8, 4, 1, 2, 0, 0, [0.1, 2]]]

- - [-1, 1, nn.Conv2d, [1536, 1, 1, 0]]

- - [-1, 1, Split, [512, 1024]] # 25-high_global_info

- - [[21, 18], 1, High_LAF, []]

- - [[-1, 25], 1, Inject, [512, 0]]

- - [-1, 1, RepBlock, [512, 12]] # 28-n4

- - [[-1, 14], 1, High_LAF, []]

- - [[-1, 25], 1, Inject, [1024, 1]]

- - [-1, 1, RepBlock, [1024, 12]] # 31-n5

- - [[21, 28, 31], 1, Detect, [nc]] # Detect(P3, N4, N5)

5.2 运行代码

创建一个run.py文件放在v11项目的根目录下。

- import warnings

- warnings.filterwarnings('ignore')

- from ultralytics import YOLO

- if __name__ == '__main__':

- model = YOLO("替换你的模型yaml文件路径")

- model.load('yolov8n.pt') # 我这里用的n的权重文件,大家可以自行替换自己的版本的

- model.train(data=r'替换你的数据集yaml文件地址',

- cache=False,

- imgsz=640,

- epochs=150,

- batch=16,

- close_mosaic=0,

- workers=0,

- device=0,

- optimizer='SGD', # using SGD

- amp=False,# close amp

- )

5.3 成功运行截图

六、全文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充, 目前本专栏免费阅读(暂时,大家尽早关注不迷路~), 如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~