一、本文介绍

本文给大家带来的改进机制是 ACmix自注意力机制的改进版本 ,它的核心思想是,传统 卷积操作 和自注意力模块的大部分计算都可以通过1x1的卷积来实现。ACmix首先使用1x1卷积对输入特征图进行投影,生成一组中间特征,然后根据不同的范式,即自注意力和卷积方式,分别重用和聚合这些中间特征。这样,ACmix既能利用自注意力的全局感知能力,又能通过卷积捕获局部特征,从而在保持较低计算成本的同时,提高 模型 的性能。 本文包含二次创新C2PSA机制!

二、ACmix的框架原理

官方论文地址: 官方论文地址

官方代码地址: 官方代码地址

2.1 ACMix的基本原理

ACmix是一种混合模型,结合了 自注意力机制 和卷积运算的优势。它的核心思想是,传统卷积操作和自注意力模块的大部分计算都可以通过1x1的卷积来实现。ACmix首先使用1x1卷积对输入特征图进行投影,生成一组中间特征,然后根据不同的范式,即自注意力和卷积方式,分别重用和聚合这些中间特征。这样,ACmix既能利用自注意力的全局感知能力,又能通过卷积捕获局部特征,从而在保持较低计算成本的同时,提高模型的 性能 。

ACmix模型的主要改进机制可以分为以下两点:

1. 自注意力和卷积的整合:将自注意力和卷积技术融合,实现两者优势的结合。

2. 运算分解与重构:通过分解自注意力和卷积中的运算,重构为1×1卷积形式,提高了运算效率。

2.1.1 自注意力和卷积的整合

文章中指出,自注意力和卷积的整合通过以下方式实现:

特征分解:

自注意力机制的查询(query)、键(key)、值(value)与卷积操作通过1x1卷积进行特征分解。

运算共享:

卷积和自注意力共享相同的1x1卷积运算,减少了重复的计算量。

特征融合:

在ACmix模型中,卷积和自注意力生成的特征通过求和操作进行融合,加强了模型的特征提取能力。

模块化设计:

通过模块化设计,ACmix可以灵活地嵌入到不同的网络结构中,增强网络的表征能力。

这张图片展示了ACmix中的主要概念,它比较了卷积、自注意力和ACmix各自的结构和计算复杂度。图中:

(a) 卷积:展示了标准卷积操作,包含一个

的1x1卷积,表示卷积核大小和卷积操作的聚合。

的1x1卷积,表示卷积核大小和卷积操作的聚合。

(b) 自注意力:展示了自注意力机制,它包含三个头部的1x1卷积,代表多头注意力机制中每个头部的线性变换,以及自注意力聚合。

(c) ACmix(我们的方法):结合了卷积和自注意力聚合,其中1x1卷积在两者之间共享,旨在减少计算开销并整合轻量级的聚合操作。

整体上,ACmix旨在通过共享计算资源(1x1卷积)并结合两种不同的聚合操作,以优化特征通道上的计算复杂度。

2.1.2 运算分解与重构

在ACmix中,运算分解与重构的概念是指将传统的卷积运算和自注意力运算拆分,并重新构建为更高效的形式。这主要通过以下步骤实现:

分解卷积和自注意力:

将标准的卷积核分解成多个1×1卷积核,每个核处理不同的特征子集,同时将自注意力机制中的查询(query)、键(key)和值(value)的生成也转换为1×1卷积操作。

重构为混合模块:

将分解后的卷积和自注意力运算重构成一个统一的混合模块,既包含了卷积的空间特征提取能力,也融入了自注意力的全局信息聚合功能。

提高运算效率:

这种分解与重构的方法减少了冗余计算,提高了运算效率,同时降低了模型的复杂度。

这张图片展示了ACmix提出的混合模块的结构。图示包含了:

(a) 卷积:3x3卷积通过1x1卷积的方式被分解,展示了特征图的转换过程。

(b)自注意力:输入特征先转换成查询(query)、键(key)和值(value),使用1x1卷积实现,并通过相似度匹配计算注意力权重。

(c) ACmix:结合了(a)和(b)的特点,在第一阶段使用三个1x1卷积对输入特征图进行投影,在第二阶段将两种路径得到的特征相加,作为最终输出。

右图显示了ACmix模块的流程,强调了两种机制的融合并提供了每个操作块的计算复杂度。

三、ACmix的核心代码

使用方法看下一章.

- import torch

- import torch.nn as nn

- __all__ = ['ACmix', 'C2PSA_ACmix']

- def position(H, W, type, is_cuda=True):

- if is_cuda:

- loc_w = torch.linspace(-1.0, 1.0, W).cuda().unsqueeze(0).repeat(H, 1).to(type)

- loc_h = torch.linspace(-1.0, 1.0, H).cuda().unsqueeze(1).repeat(1, W).to(type)

- else:

- loc_w = torch.linspace(-1.0, 1.0, W).unsqueeze(0).repeat(H, 1)

- loc_h = torch.linspace(-1.0, 1.0, H).unsqueeze(1).repeat(1, W)

- loc = torch.cat([loc_w.unsqueeze(0), loc_h.unsqueeze(0)], 0).unsqueeze(0)

- return loc

- def stride(x, stride):

- b, c, h, w = x.shape

- return x[:, :, ::stride, ::stride]

- def init_rate_half(tensor):

- if tensor is not None:

- tensor.data.fill_(0.5)

- def init_rate_0(tensor):

- if tensor is not None:

- tensor.data.fill_(0.)

- class ACmix(nn.Module):

- def __init__(self, in_planes, kernel_att=7, head=4, kernel_conv=3, stride=1, dilation=1):

- super(ACmix, self).__init__()

- if head == 0: # 防止head参数等于0避免报错.

- head = 1 # head等于0时默认不用多头.

- out_planes = in_planes

- self.in_planes = in_planes

- self.out_planes = out_planes

- self.head = head

- self.kernel_att = kernel_att

- self.kernel_conv = kernel_conv

- self.stride = stride

- self.dilation = dilation

- self.rate1 = torch.nn.Parameter(torch.Tensor(1))

- self.rate2 = torch.nn.Parameter(torch.Tensor(1))

- self.head_dim = self.out_planes // self.head

- self.conv1 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

- self.conv2 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

- self.conv3 = nn.Conv2d(in_planes, out_planes, kernel_size=1)

- self.conv_p = nn.Conv2d(2, self.head_dim, kernel_size=1)

- self.padding_att = (self.dilation * (self.kernel_att - 1) + 1) // 2

- self.pad_att = torch.nn.ReflectionPad2d(self.padding_att)

- self.unfold = nn.Unfold(kernel_size=self.kernel_att, padding=0, stride=self.stride)

- self.softmax = torch.nn.Softmax(dim=1)

- self.fc = nn.Conv2d(3 * self.head, self.kernel_conv * self.kernel_conv, kernel_size=1, bias=False)

- self.dep_conv = nn.Conv2d(self.kernel_conv * self.kernel_conv * self.head_dim, out_planes,

- kernel_size=self.kernel_conv, bias=True, groups=self.head_dim, padding=1,

- stride=stride)

- self.reset_parameters()

- def reset_parameters(self):

- init_rate_half(self.rate1)

- init_rate_half(self.rate2)

- kernel = torch.zeros(self.kernel_conv * self.kernel_conv, self.kernel_conv, self.kernel_conv)

- for i in range(self.kernel_conv * self.kernel_conv):

- kernel[i, i // self.kernel_conv, i % self.kernel_conv] = 1.

- kernel = kernel.squeeze(0).repeat(self.out_planes, 1, 1, 1)

- self.dep_conv.weight = nn.Parameter(data=kernel, requires_grad=True)

- self.dep_conv.bias = init_rate_0(self.dep_conv.bias)

- def forward(self, x):

- q, k, v = self.conv1(x), self.conv2(x), self.conv3(x)

- scaling = float(self.head_dim) ** -0.5

- b, c, h, w = q.shape

- h_out, w_out = h // self.stride, w // self.stride

- # ### att

- # ## positional encoding

- pe = self.conv_p(position(h, w, x.dtype, x.is_cuda))

- q_att = q.view(b * self.head, self.head_dim, h, w) * scaling

- k_att = k.view(b * self.head, self.head_dim, h, w)

- v_att = v.view(b * self.head, self.head_dim, h, w)

- if self.stride > 1:

- q_att = stride(q_att, self.stride)

- q_pe = stride(pe, self.stride)

- else:

- q_pe = pe

- unfold_k = self.unfold(self.pad_att(k_att)).view(b * self.head, self.head_dim,

- self.kernel_att * self.kernel_att, h_out,

- w_out) # b*head, head_dim, k_att^2, h_out, w_out

- unfold_rpe = self.unfold(self.pad_att(pe)).view(1, self.head_dim, self.kernel_att * self.kernel_att, h_out,

- w_out) # 1, head_dim, k_att^2, h_out, w_out

- att = (q_att.unsqueeze(2) * (unfold_k + q_pe.unsqueeze(2) - unfold_rpe)).sum(

- 1) # (b*head, head_dim, 1, h_out, w_out) * (b*head, head_dim, k_att^2, h_out, w_out) -> (b*head, k_att^2, h_out, w_out)

- att = self.softmax(att)

- out_att = self.unfold(self.pad_att(v_att)).view(b * self.head, self.head_dim, self.kernel_att * self.kernel_att,

- h_out, w_out)

- out_att = (att.unsqueeze(1) * out_att).sum(2).view(b, self.out_planes, h_out, w_out)

- ## conv

- f_all = self.fc(torch.cat(

- [q.view(b, self.head, self.head_dim, h * w), k.view(b, self.head, self.head_dim, h * w),

- v.view(b, self.head, self.head_dim, h * w)], 1))

- f_conv = f_all.permute(0, 2, 1, 3).reshape(x.shape[0], -1, x.shape[-2], x.shape[-1])

- out_conv = self.dep_conv(f_conv)

- return self.rate1 * out_att + self.rate2 * out_conv

- def autopad(k, p=None, d=1): # kernel, padding, dilation

- """Pad to 'same' shape outputs."""

- if d > 1:

- k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

- if p is None:

- p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

- return p

- class Conv(nn.Module):

- """Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

- default_act = nn.SiLU() # default activation

- def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

- """Initialize Conv layer with given arguments including activation."""

- super().__init__()

- self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

- self.bn = nn.BatchNorm2d(c2)

- self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

- def forward(self, x):

- """Apply convolution, batch normalization and activation to input tensor."""

- return self.act(self.bn(self.conv(x)))

- def forward_fuse(self, x):

- """Perform transposed convolution of 2D data."""

- return self.act(self.conv(x))

- class PSABlock(nn.Module):

- """

- PSABlock class implementing a Position-Sensitive Attention block for neural networks.

- This class encapsulates the functionality for applying multi-head attention and feed-forward neural network layers

- with optional shortcut connections.

- Attributes:

- attn (Attention): Multi-head attention module.

- ffn (nn.Sequential): Feed-forward neural network module.

- add (bool): Flag indicating whether to add shortcut connections.

- Methods:

- forward: Performs a forward pass through the PSABlock, applying attention and feed-forward layers.

- Examples:

- Create a PSABlock and perform a forward pass

- """

- def __init__(self, c, attn_ratio=0.5, num_heads=4, shortcut=True) -> None:

- """Initializes the PSABlock with attention and feed-forward layers for enhanced feature extraction."""

- super().__init__()

- self.attn = ACmix(c, head=num_heads)

- self.ffn = nn.Sequential(Conv(c, c * 2, 1), Conv(c * 2, c, 1, act=False))

- self.add = shortcut

- def forward(self, x):

- """Executes a forward pass through PSABlock, applying attention and feed-forward layers to the input tensor."""

- x = x + self.attn(x) if self.add else self.attn(x)

- x = x + self.ffn(x) if self.add else self.ffn(x)

- return x

- class C2PSA_ACmix(nn.Module):

- """

- C2PSA module with attention mechanism for enhanced feature extraction and processing.

- This module implements a convolutional block with attention mechanisms to enhance feature extraction and processing

- capabilities. It includes a series of PSABlock modules for self-attention and feed-forward operations.

- Attributes:

- c (int): Number of hidden channels.

- cv1 (Conv): 1x1 convolution layer to reduce the number of input channels to 2*c.

- cv2 (Conv): 1x1 convolution layer to reduce the number of output channels to c.

- m (nn.Sequential): Sequential container of PSABlock modules for attention and feed-forward operations.

- Methods:

- forward: Performs a forward pass through the C2PSA module, applying attention and feed-forward operations.

- Notes:

- This module essentially is the same as PSA module, but refactored to allow stacking more PSABlock modules.

- """

- def __init__(self, c1, c2, n=1, e=0.5):

- """Initializes the C2PSA module with specified input/output channels, number of layers, and expansion ratio."""

- super().__init__()

- assert c1 == c2

- self.c = int(c1 * e)

- self.cv1 = Conv(c1, 2 * self.c, 1, 1)

- self.cv2 = Conv(2 * self.c, c1, 1)

- self.m = nn.Sequential(*(PSABlock(self.c, attn_ratio=0.5, num_heads=self.c // 64) for _ in range(n)))

- def forward(self, x):

- """Processes the input tensor 'x' through a series of PSA blocks and returns the transformed tensor."""

- a, b = self.cv1(x).split((self.c, self.c), dim=1)

- b = self.m(b)

- return self.cv2(torch.cat((a, b), 1))

- if __name__ == "__main__":

- # Generating Sample image

- image_size = (1, 64, 240, 240)

- image = torch.rand(*image_size)

- # Model

- mobilenet_v1 = C2PSA_ACmix(64, 64)

- out = mobilenet_v1(image)

- print(out.size())

四、手把手教你添加ACmix

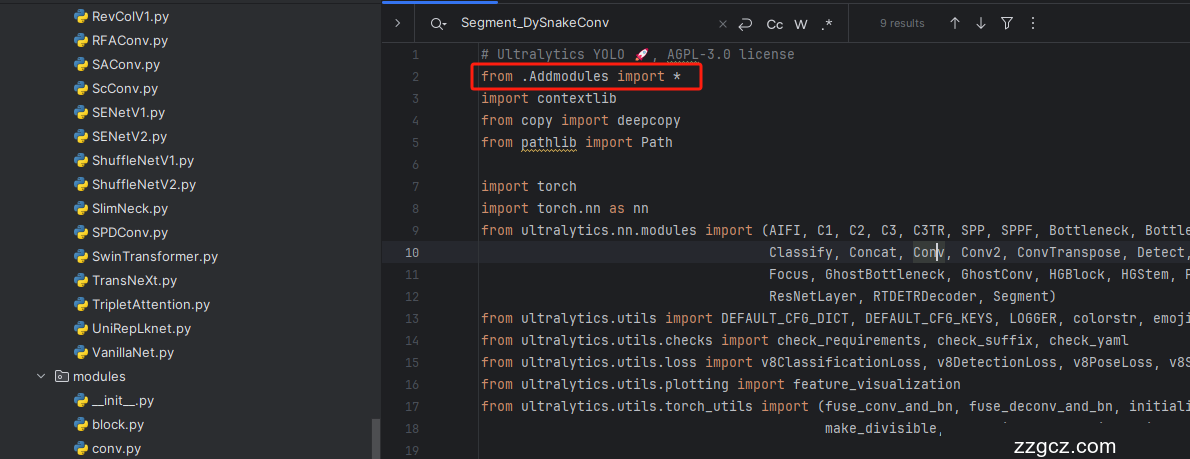

4.1 修改一

第一还是建立文件,我们找到如下 ultralytics /nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

4.2 修改二

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步我门中到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

从今天开始以后的教程就都统一成这个样子了,因为我默认大家用了我群内的文件来进行修改!!

4.4 修改四

按照我的添加在parse_model里添加即可。

到此就修改完成了,大家可以复制下面的yaml文件运行。

五、ACmix的yaml文件和运行记录

5.1 ACMix的yaml版本一(推荐)

此版本的信息如下:YOLO11-C2PSA-ACmix summary: 317 layers, 2,599,639 parameters, 2,599,623 gradients, 6.5 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA_ACmix, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

- - [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.2 ACMix的yaml版本二

添加的版本二具体那种适合你需要大家自己多做实验来尝试。

此版本的信息:YOLO11-ACmix summary: 349 layers, 2,887,161 parameters, 2,887,145 gradients, 7.1 GFLOPs

#注意力机制我这里其实是添加了三个但是实际一般生效就只添加一个就可以了,所以大家可以自行注释

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, ACmix, []] # 17 (P3/8-small) 小目标检测层输出位置增加注意力机制

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [512, False]] # 20 (P4/16-medium)

- - [-1, 1, ACmix, []] # 21 (P4/16-medium) 中目标检测层输出位置增加注意力机制

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [1024, True]] # 24 (P5/32-large)

- - [-1, 1, ACmix, []] # 25 (P5/32-large) 大目标检测层输出位置增加注意力机制

- # 注意力机制我这里其实是添加了三个但是实际一般生效就只添加一个就可以了,所以大家可以自行注释来尝试, 上面三个仅建议大家保留一个, 但是from位置要对齐.

- # 具体在那一层用注意力机制可以根据自己的数据集场景进行选择。

- # 如果你自己配置注意力位置注意from[17, 21, 25]位置要对应上对应的检测层!

- - [[17, 21, 25], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.3 ACMix的训练过程截图

下面是添加了ACMix的训练截图。

大家可以看下面的运行结果和添加的位置所以不存在我发的代码不全或者运行不了的问题大家有问题也可以在评论区评论我看到都会为大家解答(我知道的)。

五、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~