一、本文介绍

本文给大家带来的最新改进是利用分层特征融合块HFFB创新yolov11的neck部分我称之为HFPN,这个模块可以融合局部特征、全局特征、中间特征将三种特征融合在一起辅助yolov11进行检测,经过我的设计分为三种可以 针对大目标、小目标、标准目标的检测方式均不同 ,大家可以根据自己的数据集进行不同的选择,本文的内容为我独家创新。

二、原理介绍

官方论文地址: 官方论文点击此处即可跳转

官方代码地址: 官方代码点击此处即可跳转

HiFuse 采用了 三分支分层多尺度特征融合网络 ,结合 CNN 和 Transformer 的优势:

局部分支(Local Feature Block) :通过 3×3 深度可分离卷积提取局部特征。

全局分支(Global Feature Block) :基于 Swin Transformer 采用 窗口多头自注意力(W-MSA) 提取全局信息。

自适应层次特征融合块(HFF Block) :用于融合不同层次的局部和全局特征,包括:

空间注意力(SA) :增强局部细节。

通道注意力(CA) :提升特定语义特征。

残差反向 MLP(IRMLP) :防止梯度消失,提高信息流动。

Shortcut 连接 :优化特征融合效果。

三、核心代码

核心代码的使用方式看章节四!

- import torch

- import torch.nn as nn

- import torch.nn.functional as F

- class Conv(nn.Module):

- def __init__(self, inp_dim, out_dim, kernel_size=3, stride=1, bn=False, relu=True, bias=True, group=1):

- super(Conv, self).__init__()

- self.inp_dim = inp_dim

- self.conv = nn.Conv2d(inp_dim, out_dim, kernel_size, stride, padding=(kernel_size-1)//2, bias=bias)

- self.relu = None

- self.bn = None

- if relu:

- self.relu = nn.ReLU(inplace=True)

- if bn:

- self.bn = nn.BatchNorm2d(out_dim)

- def forward(self, x):

- assert x.size()[1] == self.inp_dim, "{} {}".format(x.size()[1], self.inp_dim)

- x = self.conv(x)

- if self.bn is not None:

- x = self.bn(x)

- if self.relu is not None:

- x = self.relu(x)

- return x

- def drop_path_f(x, drop_prob: float = 0., training: bool = False):

- """Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

- This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

- the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

- See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

- changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

- 'survival rate' as the argument.

- """

- if drop_prob == 0. or not training:

- return x

- keep_prob = 1 - drop_prob

- shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

- random_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)

- random_tensor.floor_() # binarize

- output = x.div(keep_prob) * random_tensor

- return output

- class DropPath(nn.Module):

- """Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

- """

- def __init__(self, drop_prob=None):

- super(DropPath, self).__init__()

- self.drop_prob = drop_prob

- def forward(self, x):

- return drop_path_f(x, self.drop_prob, self.training)

- ##### Local Feature Block Component #####

- class LayerNorm(nn.Module):

- r""" LayerNorm that supports two data formats: channels_last (default) or channels_first.

- The ordering of the dimensions in the inputs. channels_last corresponds to inputs with

- shape (batch_size, height, width, channels) while channels_first corresponds to inputs

- with shape (batch_size, channels, height, width).

- """

- def __init__(self, normalized_shape, eps=1e-6, data_format="channels_last"):

- super().__init__()

- self.weight = nn.Parameter(torch.ones(normalized_shape), requires_grad=True)

- self.bias = nn.Parameter(torch.zeros(normalized_shape), requires_grad=True)

- self.eps = eps

- self.data_format = data_format

- if self.data_format not in ["channels_last", "channels_first"]:

- raise ValueError(f"not support data format '{self.data_format}'")

- self.normalized_shape = (normalized_shape,)

- def forward(self, x: torch.Tensor) -> torch.Tensor:

- if self.data_format == "channels_last":

- return F.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

- elif self.data_format == "channels_first":

- # [batch_size, channels, height, width]

- mean = x.mean(1, keepdim=True)

- var = (x - mean).pow(2).mean(1, keepdim=True)

- x = (x - mean) / torch.sqrt(var + self.eps)

- x = self.weight[:, None, None] * x + self.bias[:, None, None]

- return x

- class Local_block(nn.Module):

- r""" Local Feature Block. There are two equivalent implementations:

- (1) DwConv -> LayerNorm (channels_first) -> 1x1 Conv -> GELU -> 1x1 Conv; all in (N, C, H, W)

- (2) DwConv -> Permute to (N, H, W, C); LayerNorm (channels_last) -> Linear -> GELU -> Linear; Permute back

- We use (2) as we find it slightly faster in PyTorch

- Args:

- dim (int): Number of input channels.

- drop_rate (float): Stochastic depth rate. Default: 0.0

- """

- def __init__(self, dim, drop_rate=0.):

- super().__init__()

- self.dwconv = nn.Conv2d(dim, dim, kernel_size=3, padding=1, groups=dim) # depthwise conv

- self.norm = LayerNorm(dim, eps=1e-6, data_format="channels_last")

- self.pwconv = nn.Linear(dim, dim) # pointwise/1x1 convs, implemented with linear layers

- self.act = nn.GELU()

- self.drop_path = DropPath(drop_rate) if drop_rate > 0. else nn.Identity()

- def forward(self, x: torch.Tensor) -> torch.Tensor:

- shortcut = x

- x = self.dwconv(x)

- x = x.permute(0, 2, 3, 1) # [N, C, H, W] -> [N, H, W, C]

- x = self.norm(x)

- x = self.pwconv(x)

- x = self.act(x)

- x = x.permute(0, 3, 1, 2) # [N, H, W, C] -> [N, C, H, W]

- x = shortcut + self.drop_path(x)

- return x

- class IRMLP(nn.Module):

- def __init__(self, inp_dim, out_dim):

- super(IRMLP, self).__init__()

- self.conv1 = Conv(inp_dim, inp_dim, 3, relu=False, bias=False, group=inp_dim)

- self.conv2 = Conv(inp_dim, inp_dim * 4, 1, relu=False, bias=False)

- self.conv3 = Conv(inp_dim * 4, out_dim, 1, relu=False, bias=False, bn=True)

- self.gelu = nn.GELU()

- self.bn1 = nn.BatchNorm2d(inp_dim)

- def forward(self, x):

- residual = x

- out = self.conv1(x)

- out = self.gelu(out)

- out += residual

- out = self.bn1(out)

- out = self.conv2(out)

- out = self.gelu(out)

- out = self.conv3(out)

- return out

- # Hierachical Feature Fusion Block

- class HFFB(nn.Module):

- def __init__(self, ch_1, r_2=16, drop_rate=0.):

- super(HFFB, self).__init__()

- ch_2 = ch_1

- ch_int = ch_1

- ch_out = ch_2

- self.maxpool=nn.AdaptiveMaxPool2d(1)

- self.avgpool=nn.AdaptiveAvgPool2d(1)

- self.se=nn.Sequential(

- nn.Conv2d(ch_2, ch_2 // r_2, 1,bias=False),

- nn.ReLU(),

- nn.Conv2d(ch_2 // r_2, ch_2, 1,bias=False)

- )

- self.sigmoid = nn.Sigmoid()

- self.spatial = Conv(2, 1, 7, bn=True, relu=False, bias=False)

- self.W_l = Conv(ch_1, ch_int, 1, bn=True, relu=False)

- self.W_g = Conv(ch_2, ch_int, 1, bn=True, relu=False)

- self.Avg = nn.AvgPool2d(2, stride=2)

- self.Updim = Conv(ch_int//2, ch_int, 1, bn=True, relu=True)

- self.norm1 = LayerNorm(ch_int * 3, eps=1e-6, data_format="channels_first")

- self.norm2 = LayerNorm(ch_int * 2, eps=1e-6, data_format="channels_first")

- self.norm3 = LayerNorm(ch_1 + ch_2 + ch_int, eps=1e-6, data_format="channels_first")

- self.W3 = Conv(ch_int * 3, ch_int, 1, bn=True, relu=False)

- self.W = Conv(ch_int * 2, ch_int, 1, bn=True, relu=False)

- self.gelu = nn.GELU()

- self.residual = IRMLP(ch_1 + ch_2 + ch_int, ch_out)

- self.drop_path = DropPath(drop_rate) if drop_rate > 0. else nn.Identity()

- def forward(self, x):

- l, g, f = x

- W_local = self.W_l(l) # local feature from Local Feature Block

- W_global = self.W_g(g) # global feature from Global Feature Block

- if f is not None:

- W_f = self.Updim(f)

- W_f = self.Avg(W_f)

- shortcut = W_f

- X_f = torch.cat([W_f, W_local, W_global], 1)

- X_f = self.norm1(X_f)

- X_f = self.W3(X_f)

- X_f = self.gelu(X_f)

- else:

- shortcut = 0

- X_f = torch.cat([W_local, W_global], 1)

- X_f = self.norm2(X_f)

- X_f = self.W(X_f)

- X_f = self.gelu(X_f)

- # spatial attention for ConvNeXt branch

- l_jump = l

- max_result, _ = torch.max(l, dim=1, keepdim=True)

- avg_result = torch.mean(l, dim=1, keepdim=True)

- result = torch.cat([max_result, avg_result], 1)

- l = self.spatial(result)

- l = self.sigmoid(l) * l_jump

- # channel attetion for transformer branch

- g_jump = g

- max_result=self.maxpool(g)

- avg_result=self.avgpool(g)

- max_out=self.se(max_result)

- avg_out=self.se(avg_result)

- g = self.sigmoid(max_out+avg_out) * g_jump

- fuse = torch.cat([g, l, X_f], 1)

- fuse = self.norm3(fuse)

- fuse = self.residual(fuse)

- fuse = shortcut + self.drop_path(fuse)

- return fuse

四、添加方法

4.1 修改一

第一还是建立文件,我们找到如下ultralytics/nn文件夹下建立一个目录名字呢就是'Addmodules'文件夹( 用群内的文件的话已经有了无需新建) !然后在其内部建立一个新的py文件将核心代码复制粘贴进去即可。

4.2 修改二

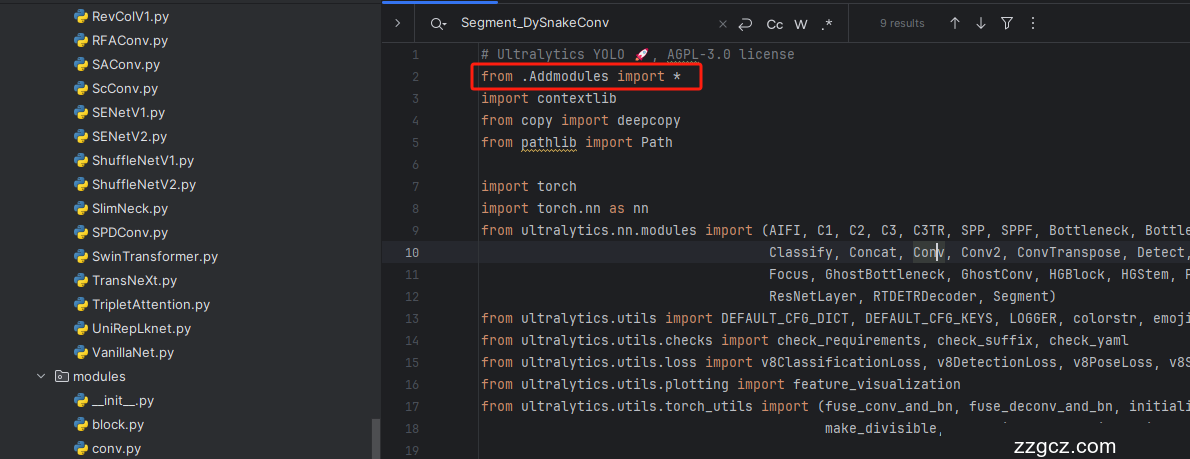

第二步我们在该目录下创建一个新的py文件名字为'__init__.py'( 用群内的文件的话已经有了无需新建) ,然后在其内部导入我们的检测头如下图所示。

4.3 修改三

第三步找到如下文件'ultralytics/nn/tasks.py'进行导入和注册我们的模块( 用群内的文件的话已经有了无需重新导入直接开始第四步即可) !

4.4 修改四

找到文件到如下文件'ultralytics/nn/tasks.py',在其中的parse_model方法中添加即可(根据周围代码进行定位即可,如果不会入群内有视频讲解)。

到此就修改完成了,大家可以复制下面的yaml文件运行,

如果不会添加可联系作者入群观看视频教程。

五、正式训练

5.1 yaml文件1

训练信息:YOLO11-HFPN summary: 362 layers, 11,554,950 parameters, 11,554,934 gradients, 13.6 GFLOPs

- # Ultralytics YOLO 🚀, AGPL-3.0 license

- # YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

- # Parameters

- nc: 80 # number of classes

- scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

- # [depth, width, max_channels]

- n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

- s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

- m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

- l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

- x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

- # YOLO11n backbone

- backbone:

- # [from, repeats, module, args]

- - [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- - [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- - [-1, 2, C3k2, [256, False, 0.25]]

- - [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- - [-1, 2, C3k2, [512, False, 0.25]]

- - [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- - [-1, 2, C3k2, [512, True]]

- - [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- - [-1, 2, C3k2, [1024, True]]

- - [-1, 1, SPPF, [1024, 5]] # 9

- - [-1, 2, C2PSA, [1024]] # 10

- # YOLO11n head

- head:

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 6], 1, Concat, [1]] # cat backbone P4

- - [-1, 2, C3k2, [512, False]] # 13

- - [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- - [[-1, 4], 1, Concat, [1]] # cat backbone P3

- - [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

- - [-1, 1, Conv, [256, 3, 2]]

- - [[-1, 13], 1, Concat, [1]] # cat head P4

- - [-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

- - [-1, 1, Conv, [512, 3, 2]]

- - [[-1, 10], 1, Concat, [1]] # cat head P5

- - [-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

- # 下面分了三组,每一组针对的目标不一样顺序是 大、中、小,根据自己的选择进行注释选择即可,只能选择一个默认是小

- # - [[22, 10, 19], 1, HFFB, []] # 23 (P5/32-large)

- # - [[16, 19, 23], 1, Detect, [nc]] # Detect(P3, P4, P5)

- # - [[19, 6, 16], 1, HFFB, []] # 23 (P4/16-medium)

- # - [[16, 23, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

- - [[16, 3, 1], 1, HFFB, []] # 23 (P3/8-small)

- - [[23, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

5.2 训练代码

大家可以创建一个py文件将我给的代码复制粘贴进去,配置好自己的文件路径即可运行。

- import warnings

- warnings.filterwarnings('ignore')

- from ultralytics import YOLO

- if __name__ == '__main__':

- model = YOLO('替换你的模型配置文件yaml文件地址')

- # 如何切换模型版本, 上面的ymal文件可以改为 yolov11s.yaml就是使用的v11s,

- # 类似某个改进的yaml文件名称为yolov11-XXX.yaml那么如果想使用其它版本就把上面的名称改为yolov11l-XXX.yaml即可(改的是上面YOLO中间的名字不是配置文件的)!

- # model.load('yolo11n.pt') # 是否加载预训练权重,科研不建议大家加载否则很难提升精度

- model.train(data=r"替换你的数据集配置文件地址",

- # 如果大家任务是其它的'ultralytics/cfg/default.yaml'找到这里修改task可以改成detect, segment, classify, pose

- cache=False,

- imgsz=640,

- epochs=150,

- single_cls=False, # 是否是单类别检测

- batch=16,

- close_mosaic=0,

- workers=0,

- device='0',

- optimizer='SGD', # using SGD

- # resume='runs/train/exp21/weights/last.pt', # 如过想续训就设置last.pt的地址

- amp=False, # 如果出现训练损失为Nan可以关闭amp

- project='runs/train',

- name='exp',

- )

5.3 训练过程截图

五、本文总结

到此本文的正式分享内容就结束了,在这里给大家推荐我的YOLOv11改进有效涨点专栏,本专栏目前为新开的平均质量分98分,后期我会根据各种最新的前沿顶会进行论文复现,也会对一些老的改进机制进行补充,如果大家觉得本文帮助到你了,订阅本专栏,关注后续更多的更新~