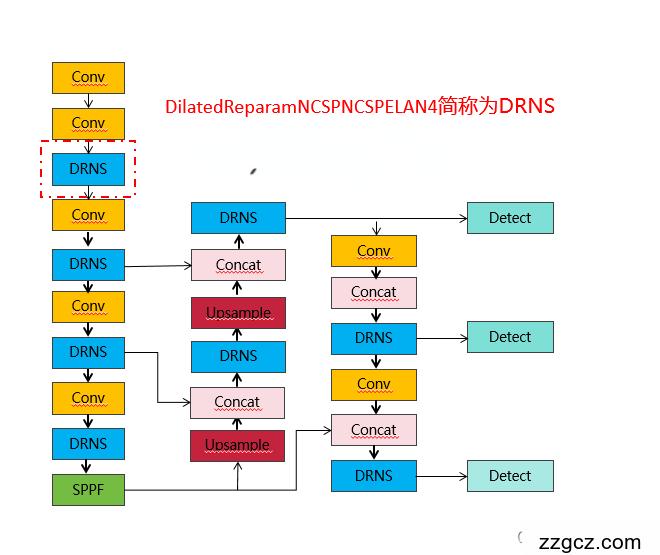

💡💡💡本文独家改进:DilatedReparamBlock结合YOLOv9RepNCSPELAN4二次创新

改进结构图如下:

收录

YOLOv8原创自研

💡💡💡全网独家首发创新(原创),适合paper !!!

💡💡💡 2024年计算机视觉顶会创新点适用于Yolov5、Yolov7、Yolov8等各个Yolo系列,专栏文章提供每一步步骤和源码,轻松带你上手魔改网络 !!!

💡💡💡重点:通过本专栏的阅读,后续你也可以设计魔改网络,在网络不同位置(Backbone、head、detect、loss等)进行魔改,实现创新!!!

1.UniRepLKNet改进原理介绍

论文:https://arxiv.org/pdf/2311.15599.pdf

论文:https://arxiv.org/pdf/2311.15599.pdf

摘要:大内核卷积神经网络(ConvNet)最近受到了广泛的研究关注,但有两个未解决的关键问题需要进一步研究。1)现有大内核ConvNet的架构很大程度上遵循传统ConvNet或Transformer的设计原则,而大内核ConvNet的架构设计仍然没有得到解决。2)由于 Transformer 已经主导了多种模态,ConvNet 是否在视觉以外的领域也具有强大的通用感知能力还有待研究。在本文中,我们从两个方面做出贡献。1)我们提出了设计大内核ConvNet的四个架构指南,其核心是利用大内核区别于小内核的本质特征——看得宽而不深入。遵循这些准则,我们提出的大内核 ConvNet 在图像识别方面表现出了领先的性能。例如,我们的模型实现了 88.0% 的 ImageNet 准确率、55.6% 的 ADE20K mIoU 和 56.4% 的 COCO box AP,表现出比最近提出的一些强大竞争对手更好的性能和更高的速度。2)我们发现,大内核是在 ConvNet 原本不擅长的领域发挥卓越性能的关键。通过某些与模态相关的预处理方法,即使没有对架构进行模态特定的定制,所提出的模型也能在时间序列预测和音频识别任务上实现最先进的性能。

大卷积核CNN设计:

- 局部结构设计:使用SE、bottleneck等高效结构来增加深度。

- 重参数化:用膨胀卷积来捕捉稀疏特征。本文提出了一个子模块叫Dilated Reparam Block,这个模块中除了大核卷积以外,还用了并行的膨胀卷积,而且利用结构重参数化的思想,整个block可以等价转换为一个大核卷积。这是因为小kernel+膨胀卷积等价于大kernel+非膨胀卷积。如下图所示。

- 关于kernel size:根据下游任务及所采用的具体框架来选定kernel size;对本文涉及的任务而言,13x13是足够的。

- 关于scaling law:对一个已经用了很多大kernel的小模型而言,当增加模型的深度时(例如从Tiny级别模型的18层增加到Base级别的36层),增加的那些block应该用depthwise 3x3,不用再增加大kernel了,感受野已经足够大了,但用3x3这么高效的操作来提高特征抽象层次总是有好处的。

2.如何将入YOLOv8

2.1 新建ultralytics/nn/backbone/UniRepLKNetBlock.py

import torch

import torch.nn as nn

import torch.nn.functional as F

from timm.layers import trunc_normal_, DropPath, to_2tuple

from functools import partial

import torch.utils.checkpoint as checkpoint

import numpy as np

from ultralytics.nn.modules.conv import Conv,autopad

from ultralytics.nn.modules.block import C3,C2f

class GRNwithNHWC(nn.Module):

""" GRN (Global Response Normalization) layer

Originally proposed in ConvNeXt V2 (https://arxiv.org/abs/2301.00808)

This implementation is more efficient than the original (https://github.com/facebookresearch/ConvNeXt-V2)

We assume the inputs to this layer are (N, H, W, C)

"""

def __init__(self, dim, use_bias=True):

super().__init__()

self.use_bias = use_bias

self.gamma = nn.Parameter(torch.zeros(1, 1, 1, dim))

if self.use_bias:

self.beta = nn.Parameter(torch.zeros(1, 1, 1, dim))

def forward(self, x):

Gx = torch.norm(x, p=2, dim=(1, 2), keepdim=True)

Nx = Gx / (Gx.mean(dim=-1, keepdim=True) + 1e-6)

if self.use_bias:

return (self.gamma * Nx + 1) * x + self.beta

else:

return (self.gamma * Nx + 1) * x

class NCHWtoNHWC(nn.Module):

def __init__(self):

super().__init__()

def forward(self, x):

return x.permute(0, 2, 3, 1)

class NHWCtoNCHW(nn.Module):

def __init__(self):

super().__init__()

def forward(self, x):

return x.permute(0, 3, 1, 2)

#================== This function decides which conv implementation (the native or iGEMM) to use

# Note that iGEMM large-kernel conv impl will be used if

# - you attempt to do so (attempt_to_use_large_impl=True), and

# - it has been installed (follow https://github.com/AILab-CVC/UniRepLKNet), and

# - the conv layer is depth-wise, stride = 1, non-dilated, kernel_size > 5, and padding == kernel_size // 2

def get_conv2d(in_channels, out_channels, kernel_size, stride, padding, dilation, groups, bias,

attempt_use_lk_impl=True):

kernel_size = to_2tuple(kernel_size)

if padding is None:

padding = (kernel_size[0] // 2, kernel_size[1] // 2)

else:

padding = to_2tuple(padding)

need_large_impl = kernel_size[0] == kernel_size[1] and kernel_size[0] > 5 and padding == (kernel_size[0] // 2, kernel_size[1] // 2)

# if attempt_use_lk_impl and need_large_impl:

# print('---------------- trying to import iGEMM implementation for large-kernel conv')

# try:

# from depthwise_conv2d_implicit_gemm import DepthWiseConv2dImplicitGEMM

# print('---------------- found iGEMM implementation ')

# except:

# DepthWiseConv2dImplicitGEMM = None

# print('---------------- found no iGEMM. use original conv. follow https://github.com/AILab-CVC/UniRepLKNet to install it.')

# if DepthWiseConv2dImplicitGEMM is not None and need_large_impl and in_channels == out_channels \

# and out_channels == groups and stride == 1 and dilation == 1:

# print(f'===== iGEMM Efficient Conv Impl, channels {in_channels}, kernel size {kernel_size} =====')

# return DepthWiseConv2dImplicitGEMM(in_channels, kernel_size, bias=bias)

return nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=bias)

def get_bn(dim, use_sync_bn=False):

if use_sync_bn:

return nn.SyncBatchNorm(dim)

else:

return nn.BatchNorm2d(dim)

class SEBlock(nn.Module):

"""

Squeeze-and-Excitation Block proposed in SENet (https://arxiv.org/abs/1709.01507)

We assume the inputs to this layer are (N, C, H, W)

"""

def __init__(self, input_channels, internal_neurons):

super(SEBlock, self).__init__()

self.down = nn.Conv2d(in_channels=input_channels, out_channels=internal_neurons,

kernel_size=1, stride=1, bias=True)

self.up = nn.Conv2d(in_channels=internal_neurons, out_channels=input_channels,

kernel_size=1, stride=1, bias=True)

self.input_channels = input_channels

self.nonlinear = nn.ReLU(inplace=True)

def forward(self, inputs):

x = F.adaptive_avg_pool2d(inputs, output_size=(1, 1))

x = self.down(x)

x = self.nonlinear(x)

x = self.up(x)

x = F.sigmoid(x)

return inputs * x.view(-1, self.input_channels, 1, 1)

def fuse_bn(conv, bn):

conv_bias = 0 if conv.bias is None else conv.bias

std = (bn.running_var + bn.eps).sqrt()

return conv.weight * (bn.weight / std).reshape(-1, 1, 1, 1), bn.bias + (conv_bias - bn.running_mean) * bn.weight / std

def convert_dilated_to_nondilated(kernel, dilate_rate):

identity_kernel = torch.ones((1, 1, 1, 1)).to(kernel.device)

if kernel.size(1) == 1:

# This is a DW kernel

dilated = F.conv_transpose2d(kernel, identity_kernel, stride=dilate_rate)

return dilated

else:

# This is a dense or group-wise (but not DW) kernel

slices = []

for i in range(kernel.size(1)):

dilated = F.conv_transpose2d(kernel[:,i:i+1,:,:], identity_kernel, stride=dilate_rate)

slices.append(dilated)

return torch.cat(slices, dim=1)

def merge_dilated_into_large_kernel(large_kernel, dilated_kernel, dilated_r):

large_k = large_kernel.size(2)

dilated_k = dilated_kernel.size(2)

equivalent_kernel_size = dilated_r * (dilated_k - 1) + 1

equivalent_kernel = convert_dilated_to_nondilated(dilated_kernel, dilated_r)

rows_to_pad = large_k // 2 - equivalent_kernel_size // 2

merged_kernel = large_kernel + F.pad(equivalent_kernel, [rows_to_pad] * 4)

return merged_kernel

class DilatedReparamBlock(nn.Module):

"""

Dilated Reparam Block proposed in UniRepLKNet (https://github.com/AILab-CVC/UniRepLKNet)

We assume the inputs to this block are (N, C, H, W)

"""

def __init__(self, channels, kernel_size, deploy=False, use_sync_bn=False, attempt_use_lk_impl=True):

super().__init__()

self.lk_origin = get_conv2d(channels, channels, kernel_size, stride=1,

padding=kernel_size//2, dilation=1, groups=channels, bias=deploy,

attempt_use_lk_impl=attempt_use_lk_impl)

self.attempt_use_lk_impl = attempt_use_lk_impl

# Default settings. We did not tune them carefully. Different settings may work better.

if kernel_size == 17:

self.kernel_sizes = [5, 9, 3, 3, 3]

self.dilates = [1, 2, 4, 5, 7]

elif kernel_size == 15:

self.kernel_sizes = [5, 7, 3, 3, 3]

self.dilates = [1, 2, 3, 5, 7]

elif kernel_size == 13:

self.kernel_sizes = [5, 7, 3, 3, 3]

self.dilates = [1, 2, 3, 4, 5]

elif kernel_size == 11:

self.kernel_sizes = [5, 5, 3, 3, 3]

self.dilates = [1, 2, 3, 4, 5]

elif kernel_size == 9:

self.kernel_sizes = [5, 5, 3, 3]

self.dilates = [1, 2, 3, 4]

elif kernel_size == 7:

self.kernel_sizes = [5, 3, 3]

self.dilates = [1, 2, 3]

elif kernel_size == 5:

self.kernel_sizes = [3, 3]

self.dilates = [1, 2]

else:

raise ValueError('Dilated Reparam Block requires kernel_size >= 5')

if not deploy:

self.origin_bn = get_bn(channels, use_sync_bn)

for k, r in zip(self.kernel_sizes, self.dilates):

self.__setattr__('dil_conv_k{}_{}'.format(k, r),

nn.Conv2d(in_channels=channels, out_channels=channels, kernel_size=k, stride=1,

padding=(r * (k - 1) + 1) // 2, dilation=r, groups=channels,

bias=False))

self.__setattr__('dil_bn_k{}_{}'.format(k, r), get_bn(channels, use_sync_bn=use_sync_bn))

def forward(self, x):

if not hasattr(self, 'origin_bn'): # deploy mode

return self.lk_origin(x)

out = self.origin_bn(self.lk_origin(x))

for k, r in zip(self.kernel_sizes, self.dilates):

conv = self.__getattr__('dil_conv_k{}_{}'.format(k, r))

bn = self.__getattr__('dil_bn_k{}_{}'.format(k, r))

out = out + bn(conv(x))

return out

def switch_to_deploy(self):

if hasattr(self, 'origin_bn'):

origin_k, origin_b = fuse_bn(self.lk_origin, self.origin_bn)

for k, r in zip(self.kernel_sizes, self.dilates):

conv = self.__getattr__('dil_conv_k{}_{}'.format(k, r))

bn = self.__getattr__('dil_bn_k{}_{}'.format(k, r))

branch_k, branch_b = fuse_bn(conv, bn)

origin_k = merge_dilated_into_large_kernel(origin_k, branch_k, r)

origin_b += branch_b

merged_conv = get_conv2d(origin_k.size(0), origin_k.size(0), origin_k.size(2), stride=1,

padding=origin_k.size(2)//2, dilation=1, groups=origin_k.size(0), bias=True,

attempt_use_lk_impl=self.attempt_use_lk_impl)

merged_conv.weight.data = origin_k

merged_conv.bias.data = origin_b

self.lk_origin = merged_conv

self.__delattr__('origin_bn')

for k, r in zip(self.kernel_sizes, self.dilates):

self.__delattr__('dil_conv_k{}_{}'.format(k, r))

self.__delattr__('dil_bn_k{}_{}'.format(k, r))

class UniRepLKNetBlock(nn.Module):

def __init__(self,

dim,

kernel_size,

drop_path=0.,

layer_scale_init_value=1e-6,

deploy=False,

attempt_use_lk_impl=True,

with_cp=False,

use_sync_bn=False,

ffn_factor=4):

super().__init__()

self.with_cp = with_cp

# if deploy:

# print('------------------------------- Note: deploy mode')

# if self.with_cp:

# print('****** note with_cp = True, reduce memory consumption but may slow down training ******')

self.need_contiguous = (not deploy) or kernel_size >= 7

if kernel_size == 0:

self.dwconv = nn.Identity()

self.norm = nn.Identity()

elif deploy:

self.dwconv = get_conv2d(dim, dim, kernel_size=kernel_size, stride=1, padding=kernel_size // 2,

dilation=1, groups=dim, bias=True,

attempt_use_lk_impl=attempt_use_lk_impl)

self.norm = nn.Identity()

elif kernel_size >= 7:

self.dwconv = DilatedReparamBlock(dim, kernel_size, deploy=deploy,

use_sync_bn=use_sync_bn,

attempt_use_lk_impl=attempt_use_lk_impl)

self.norm = get_bn(dim, use_sync_bn=use_sync_bn)

elif kernel_size == 1:

self.dwconv = nn.Conv2d(dim, dim, kernel_size=kernel_size, stride=1, padding=kernel_size // 2,

dilation=1, groups=1, bias=deploy)

self.norm = get_bn(dim, use_sync_bn=use_sync_bn)

else:

assert kernel_size in [3, 5]

self.dwconv = nn.Conv2d(dim, dim, kernel_size=kernel_size, stride=1, padding=kernel_size // 2,

dilation=1, groups=dim, bias=deploy)

self.norm = get_bn(dim, use_sync_bn=use_sync_bn)

self.se = SEBlock(dim, dim // 4)

ffn_dim = int(ffn_factor * dim)

self.pwconv1 = nn.Sequential(

NCHWtoNHWC(),

nn.Linear(dim, ffn_dim))

self.act = nn.Sequential(

nn.GELU(),

GRNwithNHWC(ffn_dim, use_bias=not deploy))

if deploy:

self.pwconv2 = nn.Sequential(

nn.Linear(ffn_dim, dim),

NHWCtoNCHW())

else:

self.pwconv2 = nn.Sequential(

nn.Linear(ffn_dim, dim, bias=False),

NHWCtoNCHW(),

get_bn(dim, use_sync_bn=use_sync_bn))

self.gamma = nn.Parameter(layer_scale_init_value * torch.ones(dim),

requires_grad=True) if (not deploy) and layer_scale_init_value is not None \

and layer_scale_init_value > 0 else None

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

def forward(self, inputs):

def _f(x):

if self.need_contiguous:

x = x.contiguous()

y = self.se(self.norm(self.dwconv(x)))

y = self.pwconv2(self.act(self.pwconv1(y)))

if self.gamma is not None:

y = self.gamma.view(1, -1, 1, 1) * y

return self.drop_path(y) + x

if self.with_cp and inputs.requires_grad:

return checkpoint.checkpoint(_f, inputs)

else:

return _f(inputs)

def switch_to_deploy(self):

if hasattr(self.dwconv, 'switch_to_deploy'):

self.dwconv.switch_to_deploy()

if hasattr(self.norm, 'running_var') and hasattr(self.dwconv, 'lk_origin'):

std = (self.norm.running_var + self.norm.eps).sqrt()

self.dwconv.lk_origin.weight.data *= (self.norm.weight / std).view(-1, 1, 1, 1)

self.dwconv.lk_origin.bias.data = self.norm.bias + (self.dwconv.lk_origin.bias - self.norm.running_mean) * self.norm.weight / std

self.norm = nn.Identity()

if self.gamma is not None:

final_scale = self.gamma.data

self.gamma = None

else:

final_scale = 1

if self.act[1].use_bias and len(self.pwconv2) == 3:

grn_bias = self.act[1].beta.data

self.act[1].__delattr__('beta')

self.act[1].use_bias = False

linear = self.pwconv2[0]

grn_bias_projected_bias = (linear.weight.data @ grn_bias.view(-1, 1)).squeeze()

bn = self.pwconv2[2]

std = (bn.running_var + bn.eps).sqrt()

new_linear = nn.Linear(linear.in_features, linear.out_features, bias=True)

new_linear.weight.data = linear.weight * (bn.weight / std * final_scale).view(-1, 1)

linear_bias = 0 if linear.bias is None else linear.bias.data

linear_bias += grn_bias_projected_bias

new_linear.bias.data = (bn.bias + (linear_bias - bn.running_mean) * bn.weight / std) * final_scale

self.pwconv2 = nn.Sequential(new_linear, self.pwconv2[1])

class C3_UniRepLKNetBlock(C3):

def __init__(self, c1, c2, n=1, k=7, shortcut=False, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(UniRepLKNetBlock(c_, k) for _ in range(n)))

class C2f_UniRepLKNetBlock(C2f):

def __init__(self, c1, c2, n=1, k=7, shortcut=False, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

self.m = nn.ModuleList(UniRepLKNetBlock(self.c, k) for _ in range(n))

class RepConvN(nn.Module):

"""RepConv is a basic rep-style block, including training and deploy status

This code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py

"""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=3, s=1, p=1, g=1, d=1, act=True, bn=False, deploy=False):

super().__init__()

assert k == 3 and p == 1

self.g = g

self.c1 = c1

self.c2 = c2

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

self.bn = None

self.conv1 = Conv(c1, c2, k, s, p=p, g=g, act=False)

self.conv2 = Conv(c1, c2, 1, s, p=(p - k // 2), g=g, act=False)

def forward_fuse(self, x):

"""Forward process"""

return self.act(self.conv(x))

def forward(self, x):

"""Forward process"""

if hasattr(self, 'conv'):

return self.forward_fuse(x)

id_out = 0 if self.bn is None else self.bn(x)

return self.act(self.conv1(x) + self.conv2(x) + id_out)

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.conv1)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.conv2)

kernelid, biasid = self._fuse_bn_tensor(self.bn)

return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _avg_to_3x3_tensor(self, avgp):

channels = self.c1

groups = self.g

kernel_size = avgp.kernel_size

input_dim = channels // groups

k = torch.zeros((channels, input_dim, kernel_size, kernel_size))

k[np.arange(channels), np.tile(np.arange(input_dim), groups), :, :] = 1.0 / kernel_size ** 2

return k

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, Conv):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

elif isinstance(branch, nn.BatchNorm2d):

if not hasattr(self, 'id_tensor'):

input_dim = self.c1 // self.g

kernel_value = np.zeros((self.c1, input_dim, 3, 3), dtype=np.float32)

for i in range(self.c1):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def switch_to_deploy(self):

if hasattr(self, 'conv'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.conv = nn.Conv2d(in_channels=self.conv1.conv.in_channels,

out_channels=self.conv1.conv.out_channels,

kernel_size=self.conv1.conv.kernel_size,

stride=self.conv1.conv.stride,

padding=self.conv1.conv.padding,

dilation=self.conv1.conv.dilation,

groups=self.conv1.conv.groups,

bias=True).requires_grad_(False)

self.conv.weight.data = kernel

self.conv.bias.data = bias

for para in self.parameters():

para.detach_()

self.__delattr__('conv1')

self.__delattr__('conv2')

if hasattr(self, 'nm'):

self.__delattr__('nm')

if hasattr(self, 'bn'):

self.__delattr__('bn')

if hasattr(self, 'id_tensor'):

self.__delattr__('id_tensor')

class RepNBottleneck(nn.Module):

# Standard bottleneck

def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5): # ch_in, ch_out, shortcut, kernels, groups, expand

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = RepConvN(c1, c_, k[0], 1)

self.cv2 = Conv(c_, c2, k[1], 1, g=g)

self.add = shortcut and c1 == c2

def forward(self, x):

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class RepNCSP(nn.Module):

# CSP Bottleneck with 3 convolutions

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # optional act=FReLU(c2)

self.m = nn.Sequential(*(RepNBottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))

def forward(self, x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

class RepNCSPELAN4(nn.Module):

# csp-elan

def __init__(self, c1, c2, c3, c4, c5=1): # ch_in, ch_out, number, shortcut, groups, expansion

super().__init__()

self.c = c3//2

self.cv1 = Conv(c1, c3, 1, 1)

self.cv2 = nn.Sequential(RepNCSP(c3//2, c4, c5), Conv(c4, c4, 3, 1))

self.cv3 = nn.Sequential(RepNCSP(c4, c4, c5), Conv(c4, c4, 3, 1))

self.cv4 = Conv(c3+(2*c4), c2, 1, 1)

def forward(self, x):

y = list(self.cv1(x).chunk(2, 1))

y.extend((m(y[-1])) for m in [self.cv2, self.cv3])

return self.cv4(torch.cat(y, 1))

def forward_split(self, x):

y = list(self.cv1(x).split((self.c, self.c), 1))

y.extend(m(y[-1]) for m in [self.cv2, self.cv3])

return self.cv4(torch.cat(y, 1))

class DilatedReparamBottleneck(RepNBottleneck):

def __init__(self, c1, c2, kernel_size, shortcut=True, g=1, k=(3, 3), e=0.5):

super().__init__(c1, c2, shortcut, g, k, e)

c_ = int(c2 * e) # hidden channels

self.cv1 = DilatedReparamBlock(c1, kernel_size)

class DilatedReparamBottleneckNCSP(RepNCSP):

def __init__(self, c1, c2, n=1, kernel_size=7, shortcut=True, g=1, e=0.5):

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(DilatedReparamBottleneck(c_, c_, kernel_size, shortcut, g, e=1.0) for _ in range(n)))

class DilatedReparamNCSPNCSPELAN4(RepNCSPELAN4):

def __init__(self, c1, c2, c3, c4, c5=1, c6=7):

super().__init__(c1, c2, c3, c4, c5)

self.cv2 = nn.Sequential(DilatedReparamBottleneckNCSP(c3//2, c4, c5, c6), Conv(c4, c4, 3, 1))

self.cv3 = nn.Sequential(DilatedReparamBottleneckNCSP(c4, c4, c5, c6), Conv(c4, c4, 3, 1))

2.2 注册ultralytics/nn/tasks.py

1)

from ultralytics.nn.backbone.UniRepLKNet import *2)修改def parse_model(d, ch, verbose=True): # model_dict, input_channels(3)

n = n_ = max(round(n * depth), 1) if n > 1 else n # depth gain

if m in (

Classify,

Conv,

ConvTranspose,

GhostConv,

Bottleneck,

GhostBottleneck,

SPP,

SPPF,

DWConv,

Focus,

BottleneckCSP,

C1,

C2,

C2f,

C2fAttn,

C3,

C3TR,

C3Ghost,

nn.ConvTranspose2d,

DWConvTranspose2d,

C3x,

RepC3,

DilatedReparamNCSPNCSPELAN4

):

c1, c2 = ch[f], args[0]2.3 yolov8-DilatedReparamNCSPNCSPELAN4.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [128, 64, 32, 1, 13]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [256, 128, 64, 1, 11]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [512, 256, 128, 1, 9]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [1024, 512, 256, 1, 7]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [512, 256, 128, 1, 5]] # 12

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [256, 128, 64, 1, 5]] # 15 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [512, 256, 128, 1, 5]] # 18 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 1, DilatedReparamNCSPNCSPELAN4, [1024, 512, 256, 1, 5]] # 21 (P5/32-large)

- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)